Daniel Chiu

Generalization by Recognizing Confusion

Jun 13, 2020

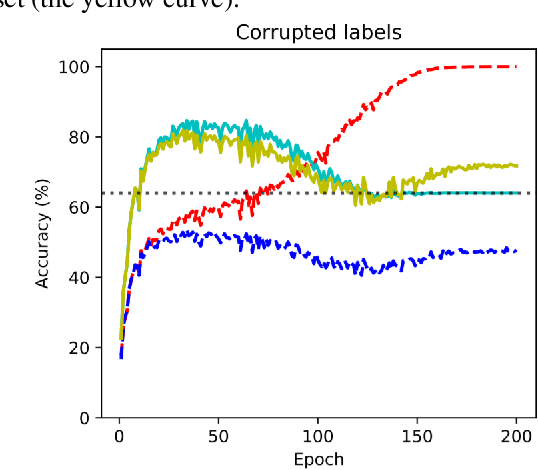

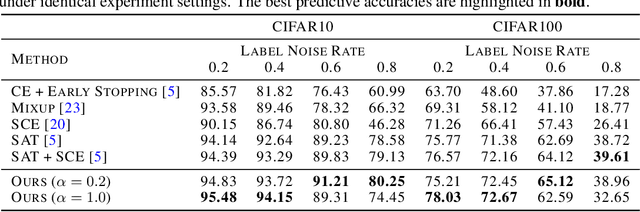

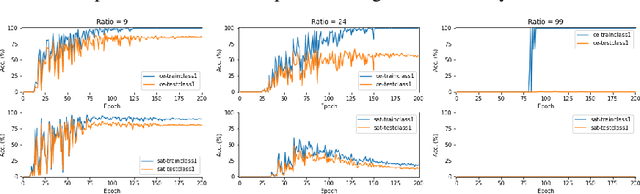

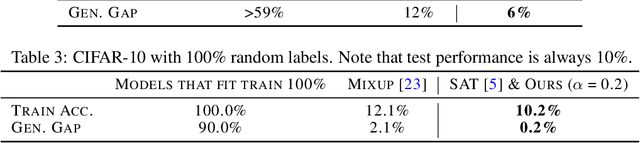

Abstract:A recently-proposed technique called self-adaptive training augments modern neural networks by allowing them to adjust training labels on the fly, to avoid overfitting to samples that may be mislabeled or otherwise non-representative. By combining the self-adaptive objective with mixup, we further improve the accuracy of self-adaptive models for image recognition; the resulting classifier obtains state-of-the-art accuracies on datasets corrupted with label noise. Robustness to label noise implies a lower generalization gap; thus, our approach also leads to improved generalizability. We find evidence that the Rademacher complexity of these algorithms is low, suggesting a new path towards provable generalization for this type of deep learning model. Last, we highlight a novel connection between difficulties accounting for rare classes and robustness under noise, as rare classes are in a sense indistinguishable from label noise. Our code can be found at https://github.com/Tuxianeer/generalizationconfusion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge