Damitha Seneviratne

Anomaly Detection using Deep Reconstruction and Forecasting for Autonomous Systems

Jun 25, 2020

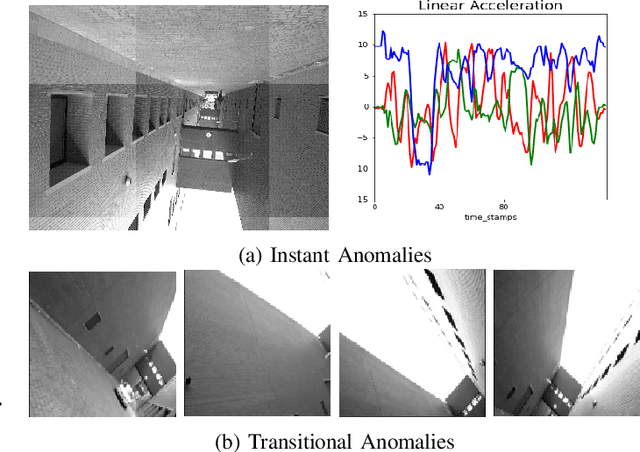

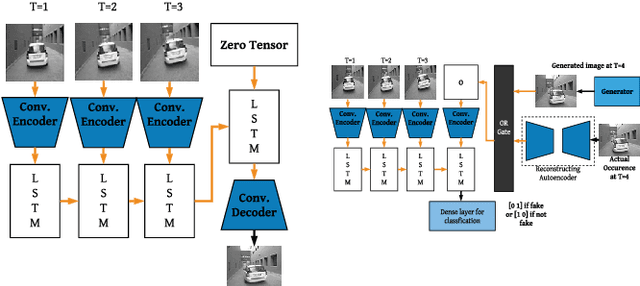

Abstract:We propose self-supervised deep algorithms to detect anomalies in heterogeneous autonomous systems using frontal camera video and IMU readings. Given that the video and IMU data are not synchronized, each of them are analyzed separately. The vision-based system, which utilizes a conditional GAN, analyzes immediate-past three frames and attempts to predict the next frame. The frame is classified as either an anomalous case or a normal case based on the degree of difference estimated using the prediction error and a threshold. The IMU-based system utilizes two approaches to classify the timestamps; the first being an LSTM autoencoder which reconstructs three consecutive IMU vectors and the second being an LSTM forecaster which is utilized to predict the next vector using the previous three IMU vectors. Based on the reconstruction error, the prediction error, and a threshold, the timestamp is classified as either an anomalous case or a normal case. The composition of algorithms won runners up at the IEEE Signal Processing Cup anomaly detection challenge 2020. In the competition dataset of camera frames consisting of both normal and anomalous cases, we achieve a test accuracy of 94% and an F1-score of 0.95. Furthermore, we achieve an accuracy of 100% on a test set containing normal IMU data, and an F1-score of 0.98 on the test set of abnormal IMU data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge