Dale R. Durran

A Practical Probabilistic Benchmark for AI Weather Models

Jan 27, 2024Abstract:Since the weather is chaotic, forecasts aim to predict the distribution of future states rather than make a single prediction. Recently, multiple data driven weather models have emerged claiming breakthroughs in skill. However, these have mostly been benchmarked using deterministic skill scores, and little is known about their probabilistic skill. Unfortunately, it is hard to fairly compare AI weather models in a probabilistic sense, since variations in choice of ensemble initialization, definition of state, and noise injection methodology become confounding. Moreover, even obtaining ensemble forecast baselines is a substantial engineering challenge given the data volumes involved. We sidestep both problems by applying a decades-old idea -- lagged ensembles -- whereby an ensemble can be constructed from a moderately-sized library of deterministic forecasts. This allows the first parameter-free intercomparison of leading AI weather models' probabilistic skill against an operational baseline. The results reveal that two leading AI weather models, i.e. GraphCast and Pangu, are tied on the probabilistic CRPS metric even though the former outperforms the latter in deterministic scoring. We also reveal how multiple time-step loss functions, which many data-driven weather models have employed, are counter-productive: they improve deterministic metrics at the cost of increased dissipation, deteriorating probabilistic skill. This is confirmed through ablations applied to a spherical Fourier Neural Operator (SFNO) approach to AI weather forecasting. Separate SFNO ablations modulating effective resolution reveal it has a useful effect on ensemble dispersion relevant to achieving good ensemble calibration. We hope these and forthcoming insights from lagged ensembles can help guide the development of AI weather forecasts and have thus shared the diagnostic code.

Sub-seasonal forecasting with a large ensemble of deep-learning weather prediction models

Feb 09, 2021

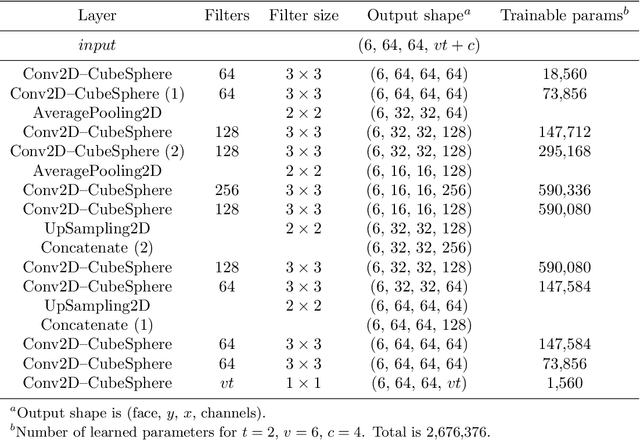

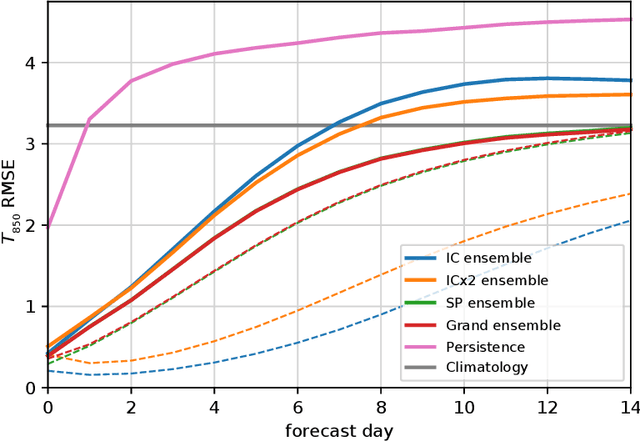

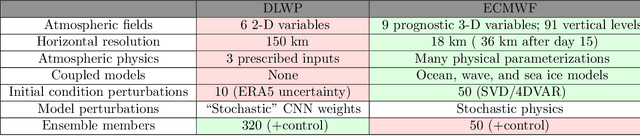

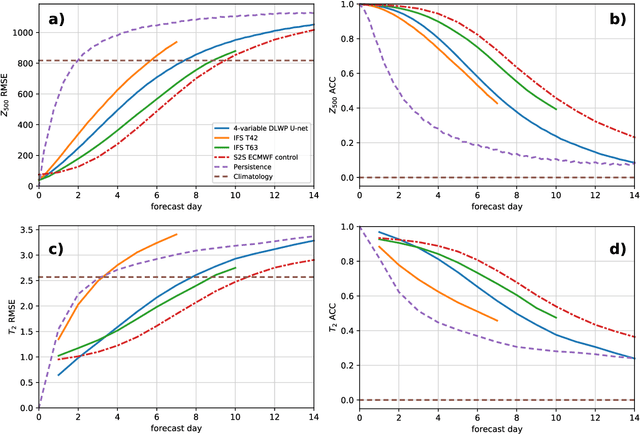

Abstract:We present an ensemble prediction system using a Deep Learning Weather Prediction (DLWP) model that recursively predicts key atmospheric variables with six-hour time resolution. This model uses convolutional neural networks (CNNs) on a cubed sphere grid to produce global forecasts. The approach is computationally efficient, requiring just three minutes on a single GPU to produce a 320-member set of six-week forecasts at 1.4{\deg} resolution. Ensemble spread is primarily produced by randomizing the CNN training process to create a set of 32 DLWP models with slightly different learned weights. Although our DLWP model does not forecast precipitation, it does forecast total column water vapor, and it gives a reasonable 4.5-day deterministic forecast of Hurricane Irma. In addition to simulating mid-latitude weather systems, it spontaneously generates tropical cyclones in a one-year free-running simulation. Averaged globally and over a two-year test set, the ensemble mean RMSE retains skill relative to climatology beyond two-weeks, with anomaly correlation coefficients remaining above 0.6 through six days. Our primary application is to subseasonal-to-seasonal (S2S) forecasting at lead times from two to six weeks. Current forecast systems have low skill in predicting one- or 2-week-average weather patterns at S2S time scales. The continuous ranked probability score (CRPS) and the ranked probability skill score (RPSS) show that the DLWP ensemble is only modestly inferior in performance to the European Centre for Medium Range Weather Forecasts (ECMWF) S2S ensemble over land at lead times of 4 and 5-6 weeks. At shorter lead times, the ECMWF ensemble performs better than DLWP.

Improving data-driven global weather prediction using deep convolutional neural networks on a cubed sphere

Mar 15, 2020

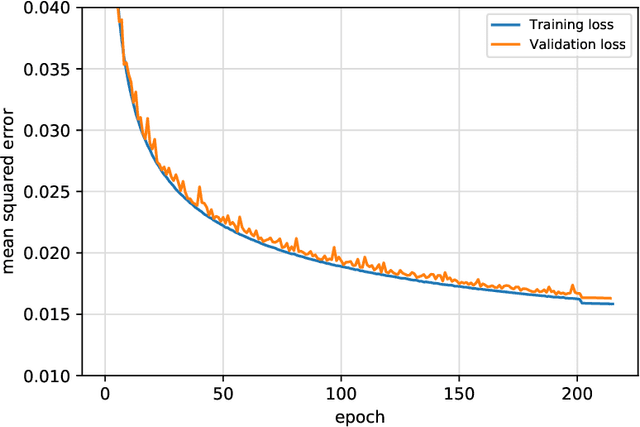

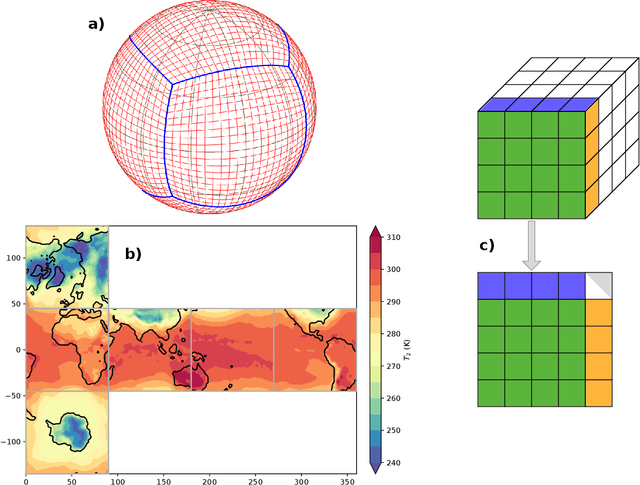

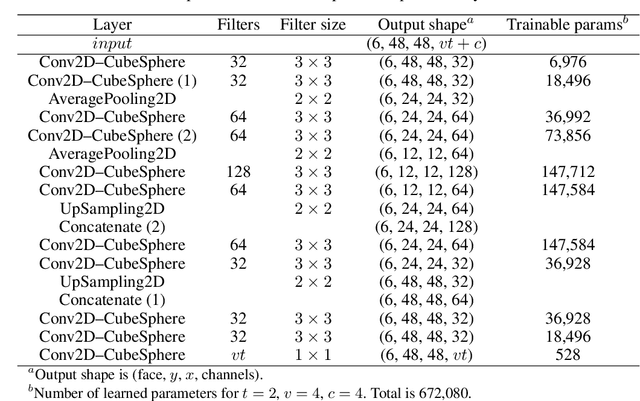

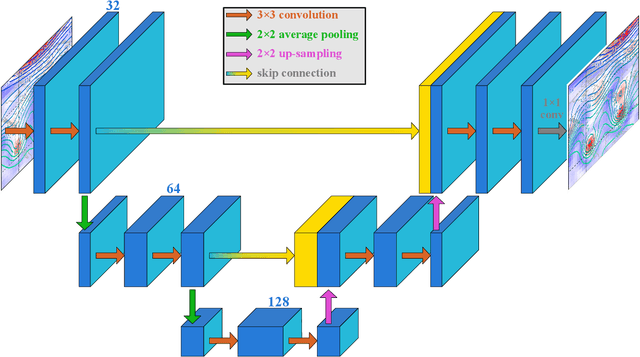

Abstract:We present a significantly-improved data-driven global weather forecasting framework using a deep convolutional neural network (CNN) to forecast several basic atmospheric variables on a global grid. New developments in this framework include an offline volume-conservative mapping to a cubed-sphere grid, improvements to the CNN architecture, and the minimization of the loss function over multiple steps in a prediction sequence. The cubed-sphere remapping minimizes the distortion on the cube faces on which convolution operations are performed and provides natural boundary conditions for padding in the CNN. Our improved model produces weather forecasts that are indefinitely stable and produce realistic weather patterns at lead times of several weeks and longer. For short- to medium-range forecasting, our model significantly outperforms persistence, climatology, and a coarse-resolution dynamical numerical weather prediction (NWP) model. Unsurprisingly, our forecasts are worse than those from a high-resolution state-of-the-art operational NWP system. Our data-driven model is able to learn to forecast complex surface temperature patterns from few input atmospheric state variables. On annual time scales, our model produces a realistic seasonal cycle driven solely by the prescribed variation in top-of-atmosphere solar forcing. Although it is currently less accurate than operational weather forecasting models, our data-driven CNN executes much faster than those models, suggesting that machine learning could prove to be a valuable tool for large-ensemble forecasting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge