Dafei Qiu

Weakly-Supervised Cross-Domain Segmentation of Electron Microscopy with Sparse Point Annotation

Mar 31, 2024

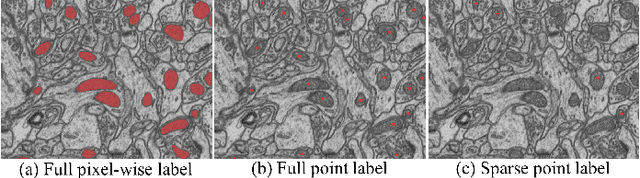

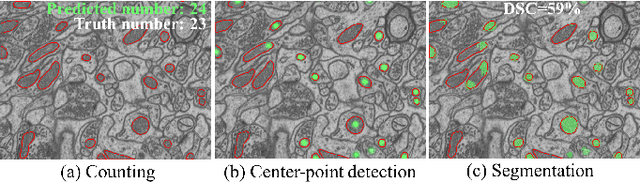

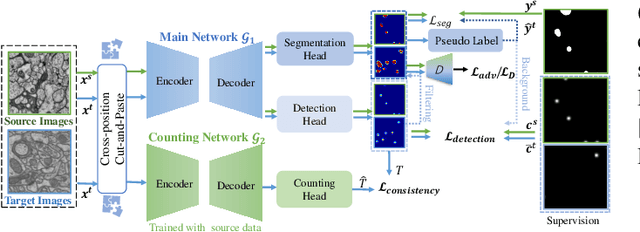

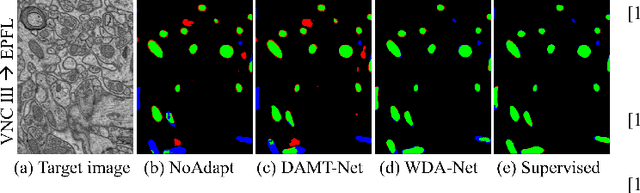

Abstract:Accurate segmentation of organelle instances from electron microscopy (EM) images plays an essential role in many neuroscience researches. However, practical scenarios usually suffer from high annotation costs, label scarcity, and large domain diversity. While unsupervised domain adaptation (UDA) that assumes no annotation effort on the target data is promising to alleviate these challenges, its performance on complicated segmentation tasks is still far from practical usage. To address these issues, we investigate a highly annotation-efficient weak supervision, which assumes only sparse center-points on a small subset of object instances in the target training images. To achieve accurate segmentation with partial point annotations, we introduce instance counting and center detection as auxiliary tasks and design a multitask learning framework to leverage correlations among the counting, detection, and segmentation, which are all tasks with partial or no supervision. Building upon the different domain-invariances of the three tasks, we enforce counting estimation with a novel soft consistency loss as a global prior for center detection, which further guides the per-pixel segmentation. To further compensate for annotation sparsity, we develop a cross-position cut-and-paste for label augmentation and an entropy-based pseudo-label selection. The experimental results highlight that, by simply using extremely weak annotation, e.g., 15\% sparse points, for model training, the proposed model is capable of significantly outperforming UDA methods and produces comparable performance as the supervised counterpart. The high robustness of our model shown in the validations and the low requirement of expert knowledge for sparse point annotation further improve the potential application value of our model.

WDA-Net: Weakly-Supervised Domain Adaptive Segmentation of Electron Microscopy

Oct 30, 2022

Abstract:Accurate segmentation of organelle instances, e.g., mitochondria, is essential for electron microscopy analysis. Despite the outstanding performance of fully supervised methods, they highly rely on sufficient per-pixel annotated data and are sensitive to domain shift. Aiming to develop a highly annotation-efficient approach with competitive performance, we focus on weakly-supervised domain adaptation (WDA) with a type of extremely sparse and weak annotation demanding minimal annotation efforts, i.e., sparse point annotations on only a small subset of object instances. To reduce performance degradation arising from domain shift, we explore multi-level transferable knowledge through conducting three complementary tasks, i.e., counting, detection, and segmentation, constituting a task pyramid with different levels of domain invariance. The intuition behind this is that after investigating a related source domain, it is much easier to spot similar objects in the target domain than to delineate their fine boundaries. Specifically, we enforce counting estimation as a global constraint to the detection with sparse supervision, which further guides the segmentation. A cross-position cut-and-paste augmentation is introduced to further compensate for the annotation sparsity. Extensive validations show that our model with only 15% point annotations can achieve comparable performance as supervised models and shows robustness to annotation selection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge