Dae-Young Yun

Neural Window Decoder for SC-LDPC Codes

Nov 28, 2024

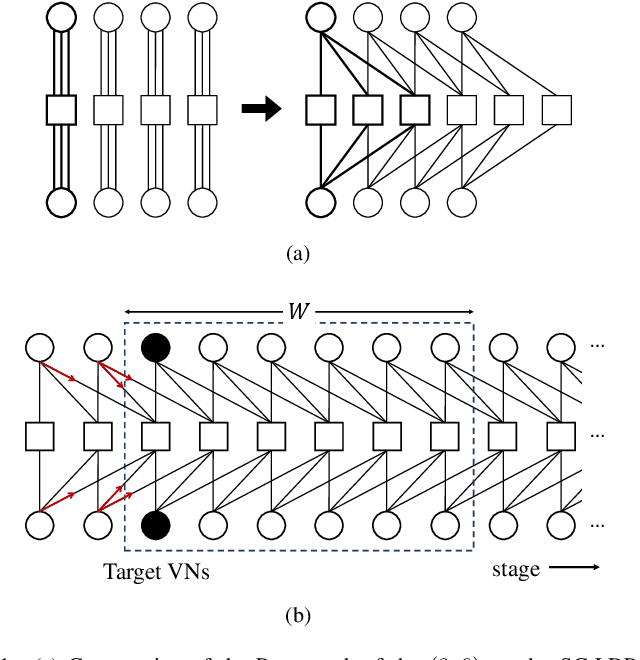

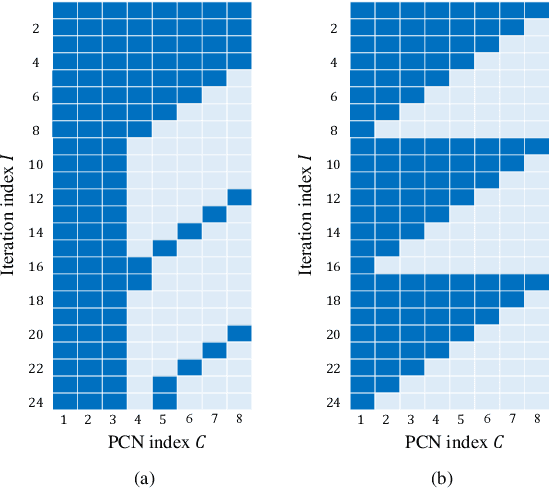

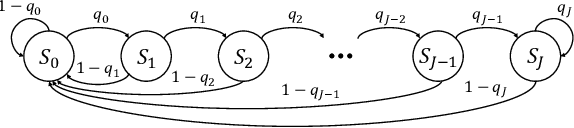

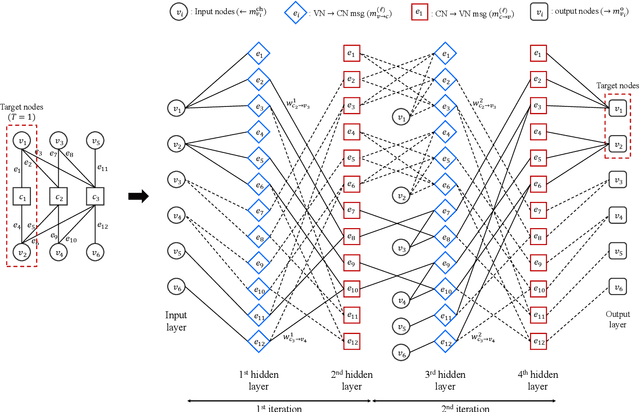

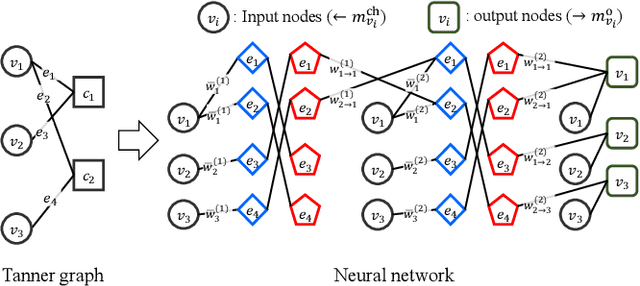

Abstract:In this paper, we propose a neural window decoder (NWD) for spatially coupled low-density parity-check (SC-LDPC) codes. The proposed NWD retains the conventional window decoder (WD) process but incorporates trainable neural weights. To train the weights of NWD, we introduce two novel training strategies. First, we restrict the loss function to target variable nodes (VNs) of the window, which prunes the neural network and accordingly enhances training efficiency. Second, we employ the active learning technique with a normalized loss term to prevent the training process from biasing toward specific training regions. Next, we develop a systematic method to derive non-uniform schedules for the NWD based on the training results. We introduce trainable damping factors that reflect the relative importance of check node (CN) updates. By skipping updates with less importance, we can omit $\mathbf{41\%}$ of CN updates without performance degradation compared to the conventional WD. Lastly, we address the error propagation problem inherent in SC-LDPC codes by deploying a complementary weight set, which is activated when an error is detected in the previous window. This adaptive decoding strategy effectively mitigates error propagation without requiring modifications to the code and decoder structures.

Boosted Neural Decoders: Achieving Extreme Reliability of LDPC Codes for 6G Networks

May 22, 2024

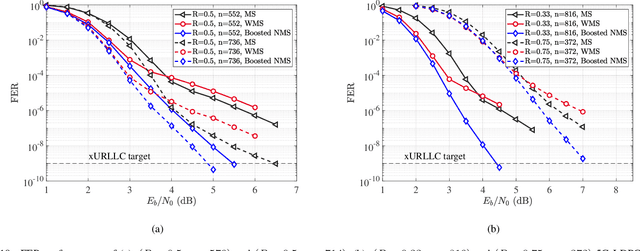

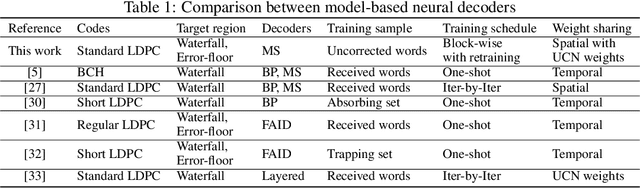

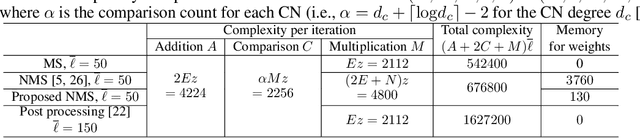

Abstract:Ensuring extremely high reliability is essential for channel coding in 6G networks. The next-generation of ultra-reliable and low-latency communications (xURLLC) scenario within 6G networks requires a frame error rate (FER) below 10-9. However, low-density parity-check (LDPC) codes, the standard in 5G new radio (NR), encounter a challenge known as the error floor phenomenon, which hinders to achieve such low rates. To tackle this problem, we introduce an innovative solution: boosted neural min-sum (NMS) decoder. This decoder operates identically to conventional NMS decoders, but is trained by novel training methods including: i) boosting learning with uncorrected vectors, ii) block-wise training schedule to address the vanishing gradient issue, iii) dynamic weight sharing to minimize the number of trainable weights, iv) transfer learning to reduce the required sample count, and v) data augmentation to expedite the sampling process. Leveraging these training strategies, the boosted NMS decoder achieves the state-of-the art performance in reducing the error floor as well as superior waterfall performance. Remarkably, we fulfill the 6G xURLLC requirement for 5G LDPC codes without the severe error floor. Additionally, the boosted NMS decoder, once its weights are trained, can perform decoding without additional modules, making it highly practical for immediate application.

Boosting Learning for LDPC Codes to Improve the Error-Floor Performance

Oct 11, 2023

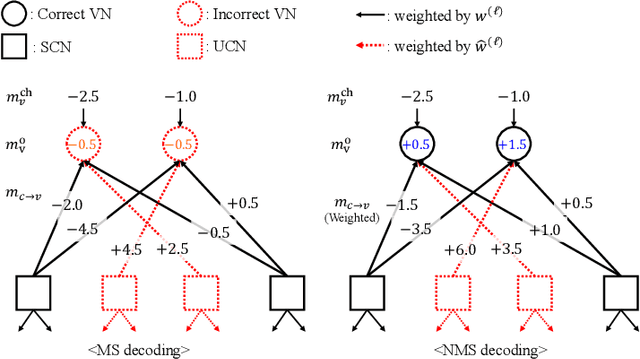

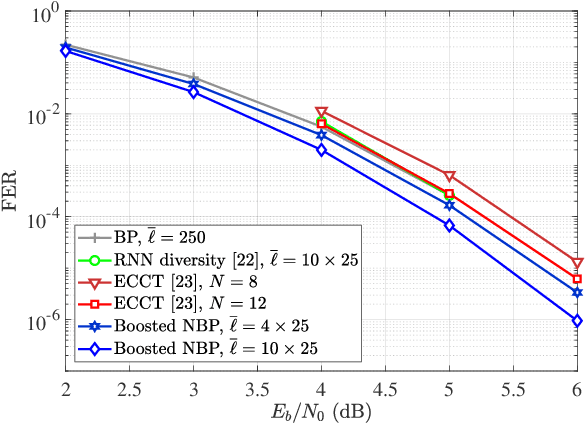

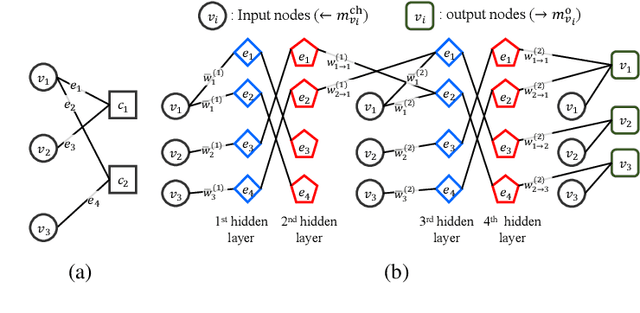

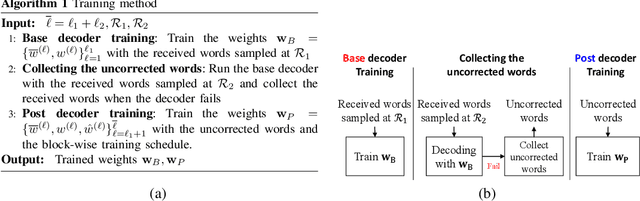

Abstract:Low-density parity-check (LDPC) codes have been successfully commercialized in communication systems due to their strong error correction ability and simple decoding process. However, the error-floor phenomenon of LDPC codes, in which the error rate stops decreasing rapidly at a certain level, poses challenges in achieving extremely low error rates and the application of LDPC codes in scenarios demanding ultra high reliability. In this work, we propose training methods to optimize neural min-sum (NMS) decoders that are robust to the error-floor. Firstly, by leveraging the boosting learning technique of ensemble networks, we divide the decoding network into two networks and train the post network to be specialized for uncorrected codewords that failed in the first network. Secondly, to address the vanishing gradient issue in training, we introduce a block-wise training schedule that locally trains a block of weights while retraining the preceding block. Lastly, we show that assigning different weights to unsatisfied check nodes effectively lowers the error-floor with a minimal number of weights. By applying these training methods to standard LDPC codes, we achieve the best error-floor performance compared to other decoding methods. The proposed NMS decoder, optimized solely through novel training methods without additional modules, can be implemented into current LDPC decoders without incurring extra hardware costs. The source code is available at https://github.com/ghy1228/LDPC_Error_Floor.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge