Dae Young Park

Learning Student-Friendly Teacher Networks for Knowledge Distillation

Feb 16, 2021

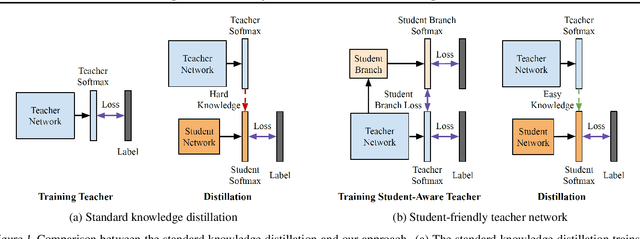

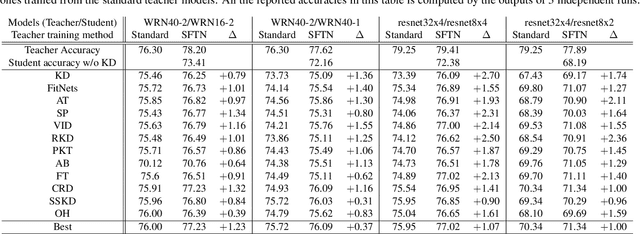

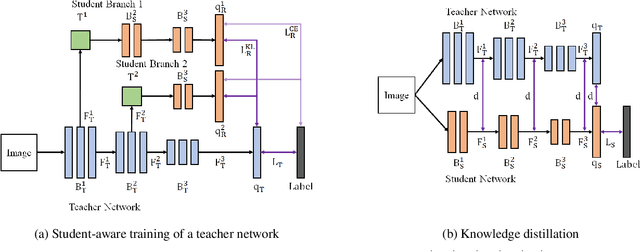

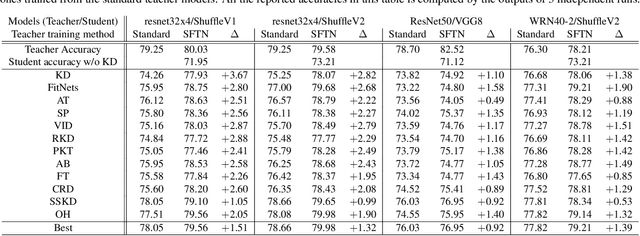

Abstract:We propose a novel knowledge distillation approach to facilitate the transfer of dark knowledge from a teacher to a student. Contrary to most of the existing methods that rely on effective training of student models given pretrained teachers, we aim to learn the teacher models that are friendly to students and, consequently, more appropriate for knowledge transfer. In other words, even at the time of optimizing a teacher model, the proposed algorithm learns the student branches jointly to obtain student-friendly representations. Since the main goal of our approach lies in training teacher models and the subsequent knowledge distillation procedure is straightforward, most of the existing knowledge distillation algorithms can adopt this technique to improve the performance of the student models in terms of accuracy and convergence speed. The proposed algorithm demonstrates outstanding accuracy in several well-known knowledge distillation techniques with various combinations of teacher and student architectures.

Arbitrary Style Transfer with Style-Attentional Networks

Jan 03, 2019

Abstract:Arbitrary style transfer aims to synthesize a content image with style of an image that has never been seen before. Recent arbitrary style transfer algorithms have trade-off between the content structure and the style patterns, or maintaining the global and local style patterns at the same time is difficult due to the patch-based mechanism. In this paper, we introduce a novel style-attentional network (SANet), which efficiently and flexibly decorates the local style patterns according to the semantic spatial distribution of the content image. A new identity loss function and a multi-level features embedding also make our SANet and decoder preserve the content structure as much as possible while enriching the style patterns. Experimental results demonstrate that our algorithm synthesizes higher-quality stylized images in real-time than the state-of-the-art-algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge