Cuong Dang

A Curious Case of Searching for the Correlation between Training Data and Adversarial Robustness of Transformer Textual Models

Feb 18, 2024

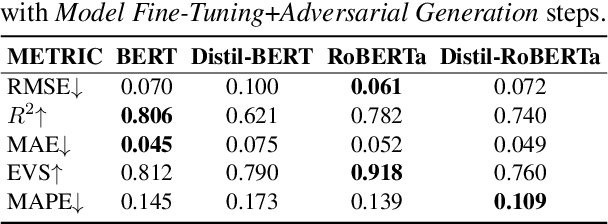

Abstract:Existing works have shown that fine-tuned textual transformer models achieve state-of-the-art prediction performances but are also vulnerable to adversarial text perturbations. Traditional adversarial evaluation is often done \textit{only after} fine-tuning the models and ignoring the training data. In this paper, we want to prove that there is also a strong correlation between training data and model robustness. To this end, we extract 13 different features representing a wide range of input fine-tuning corpora properties and use them to predict the adversarial robustness of the fine-tuned models. Focusing mostly on encoder-only transformer models BERT and RoBERTa with additional results for BART, ELECTRA and GPT2, we provide diverse evidence to support our argument. First, empirical analyses show that (a) extracted features can be used with a lightweight classifier such as Random Forest to effectively predict the attack success rate and (b) features with the most influence on the model robustness have a clear correlation with the robustness. Second, our framework can be used as a fast and effective additional tool for robustness evaluation since it (a) saves 30x-193x runtime compared to the traditional technique, (b) is transferable across models, (c) can be used under adversarial training, and (d) robust to statistical randomness. Our code will be publicly available.

Non-invasive super-resolution imaging through scattering media using fluctuating speckles

Sep 16, 2021

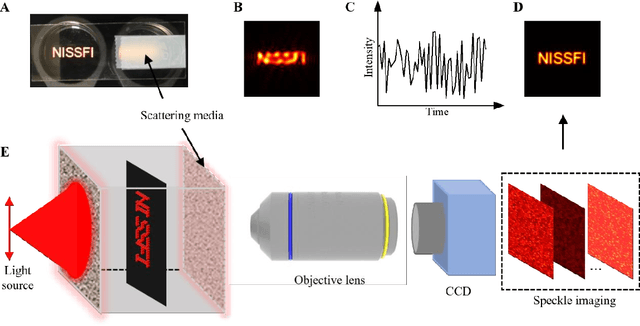

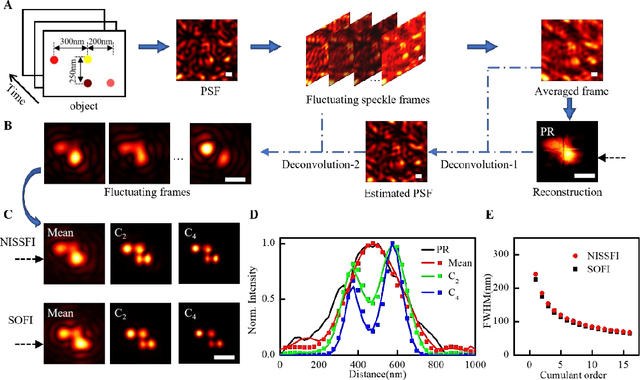

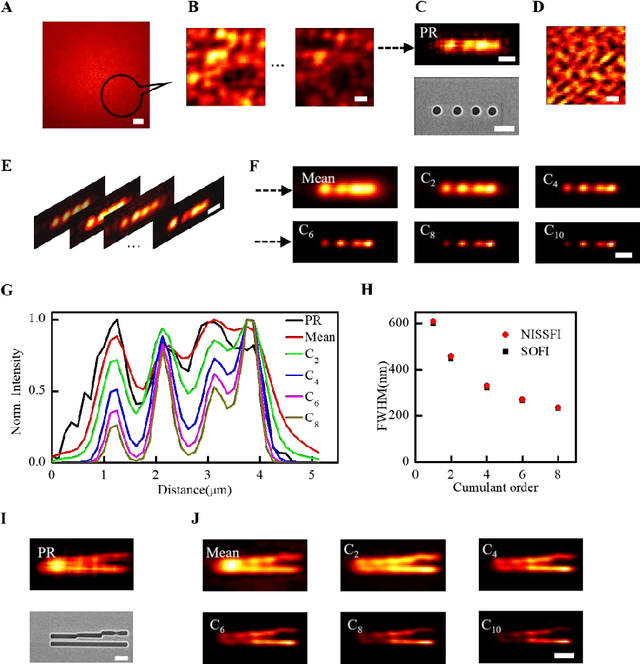

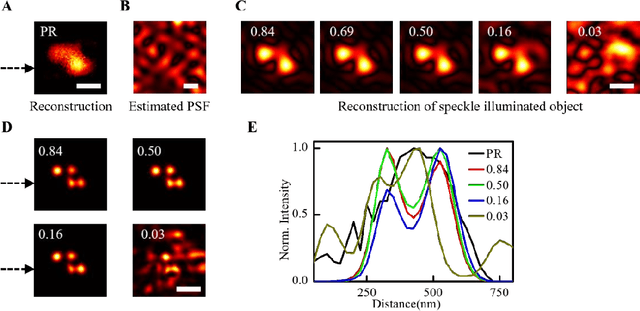

Abstract:Extending super-resolution imaging techniques to objects hidden in strongly scattering media potentially revolutionize the technical analysis for much broader categories of samples, such as biological tissues. The main challenge is the media's inhomogeneous structures which scramble the light path and create noise-like speckle patterns, hindering the object's visualization even at a low-resolution level. Here, we propose a computational method relying on the object's spatial and temporal fluctuation to visualize nanoscale objects through scattering media non-invasively. The fluctuating object can be achieved by random speckle illumination, illuminating through dynamic scattering media, or flickering emitters. The optical memory effect allows us to derive the object at diffraction limit resolution and estimate the point spreading function (PSF). Multiple images of the fluctuating object are obtained by deconvolution, then super-resolution images are achieved by computing the high order cumulants. Non-linearity of high order cumulant significantly suppresses the noise and artifacts in the resulting images and enhances the resolution by a factor of $\sqrt{N}$, where $N$ is the cumulant order. Our non-invasive super-resolution speckle fluctuation imaging (NISFFI) presents a nanoscopy technique with very simple hardware to visualize samples behind scattering media.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge