Craig Schindler

Nonholonomic Yaw Control of an Underactuated Flying Robot with Model-based Reinforcement Learning

Sep 02, 2020

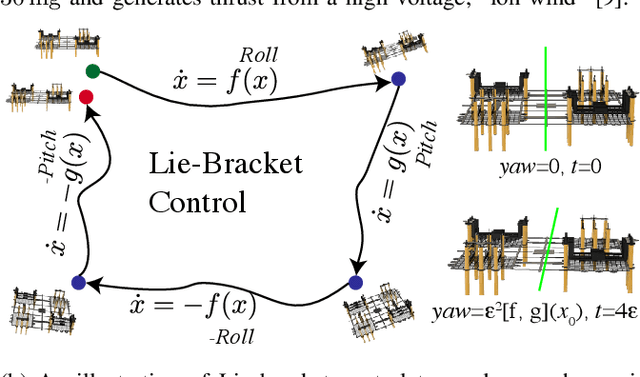

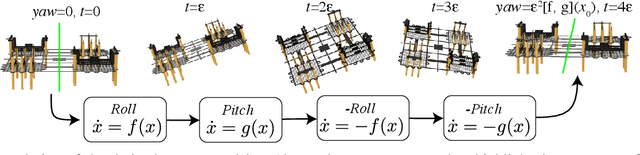

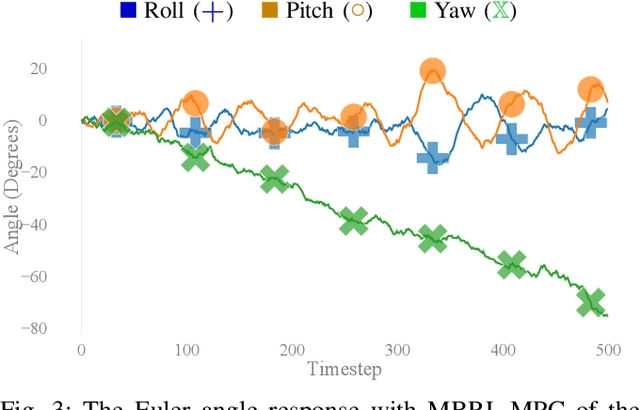

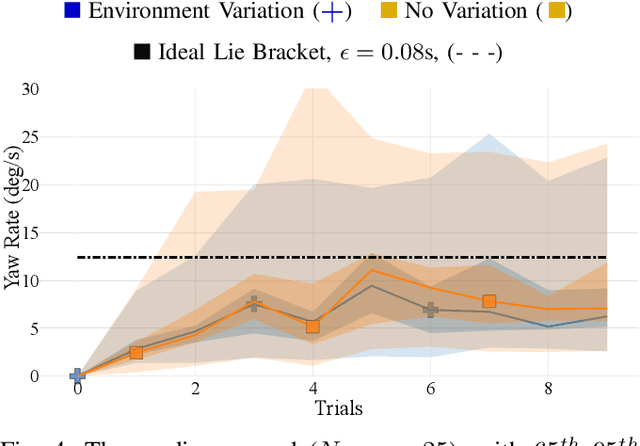

Abstract:Nonholonomic control is a candidate to control nonlinear systems with path-dependant states. We investigate an underactuated flying micro-aerial-vehicle, the ionocraft, that requires nonholonomic control in the yaw-direction for complete attitude control. Deploying an analytical control law involves substantial engineering design and is sensitive to inaccuracy in the system model. With specific assumptions on assembly and system dynamics, we derive a Lie bracket for yaw control of the ionocraft. As a comparison to the significant engineering effort required for an analytic control law, we implement a data-driven model-based reinforcement learning yaw controller in a simulated flight task. We demonstrate that a simple model-based reinforcement learning framework can match the derived Lie bracket control (in yaw rate and chosen actions) in a few minutes of flight data, without a pre-defined dynamics function. This paper shows that learning-based approaches are useful as a tool for synthesis of nonlinear control laws previously only addressable through expert-based design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge