Cory Hymel

Analysis of LLMs vs Human Experts in Requirements Engineering

Jan 31, 2025

Abstract:The majority of research around Large Language Models (LLM) application to software development has been on the subject of code generation. There is little literature on LLMs' impact on requirements engineering (RE), which deals with the process of developing and verifying the system requirements. Within RE, there is a subdiscipline of requirements elicitation, which is the practice of discovering and documenting requirements for a system from users, customers, and other stakeholders. In this analysis, we compare LLM's ability to elicit requirements of a software system, as compared to that of a human expert in a time-boxed and prompt-boxed study. We found LLM-generated requirements were evaluated as more aligned (+1.12) than human-generated requirements with a trend of being more complete (+10.2%). Conversely, we found users tended to believe that solutions they perceived as more aligned had been generated by human experts. Furthermore, while LLM-generated documents scored higher and performed at 720x the speed, their cost was, on average, only 0.06% that of a human expert. Overall, these findings indicate that LLMs will play an increasingly important role in requirements engineering by improving requirements definitions, enabling more efficient resource allocation, and reducing overall project timelines.

Improving Performance of Commercially Available AI Products in a Multi-Agent Configuration

Oct 29, 2024

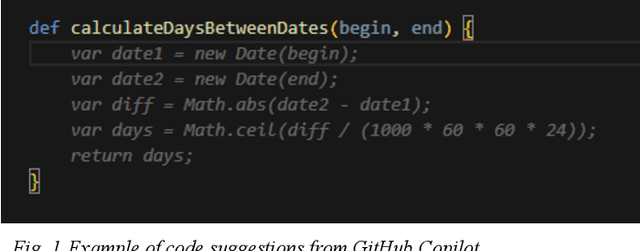

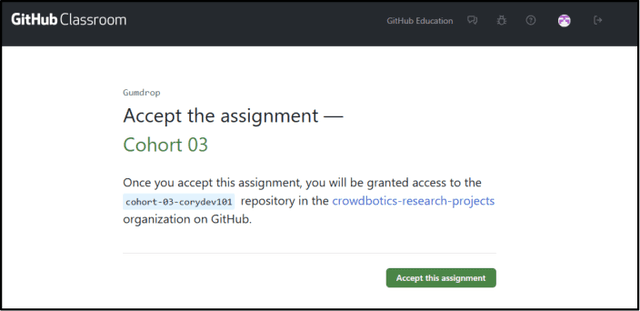

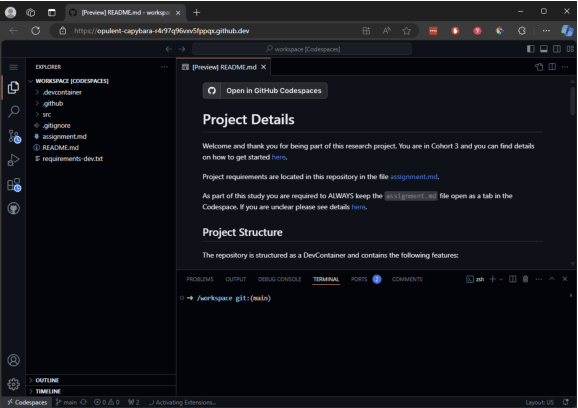

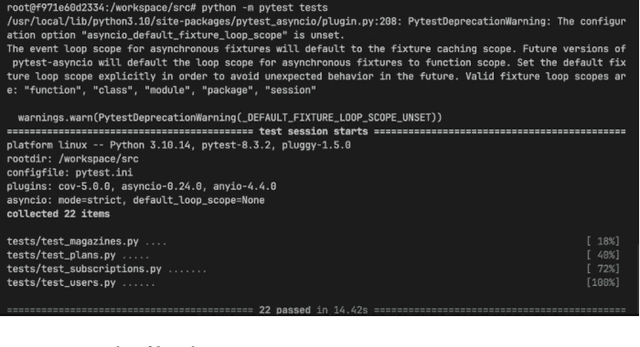

Abstract:In recent years, with the rapid advancement of large language models (LLMs), multi-agent systems have become increasingly more capable of practical application. At the same time, the software development industry has had a number of new AI-powered tools developed that improve the software development lifecycle (SDLC). Academically, much attention has been paid to the role of multi-agent systems to the SDLC. And, while single-agent systems have frequently been examined in real-world applications, we have seen comparatively few real-world examples of publicly available commercial tools working together in a multi-agent system with measurable improvements. In this experiment we test context sharing between Crowdbotics PRD AI, a tool for generating software requirements using AI, and GitHub Copilot, an AI pair-programming tool. By sharing business requirements from PRD AI, we improve the code suggestion capabilities of GitHub Copilot by 13.8% and developer task success rate by 24.5% -- demonstrating a real-world example of commercially-available AI systems working together with improved outcomes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge