Colby Fronk

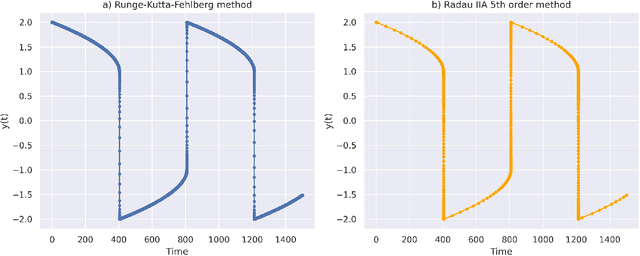

Training Stiff Neural Ordinary Differential Equations with Implicit Single-Step Methods

Oct 08, 2024

Abstract:Stiff systems of ordinary differential equations (ODEs) are pervasive in many science and engineering fields, yet standard neural ODE approaches struggle to learn them. This limitation is the main barrier to the widespread adoption of neural ODEs. In this paper, we propose an approach based on single-step implicit schemes to enable neural ODEs to handle stiffness and demonstrate that our implicit neural ODE method can learn stiff dynamics. This work addresses a key limitation in current neural ODE methods, paving the way for their use in a wider range of scientific problems.

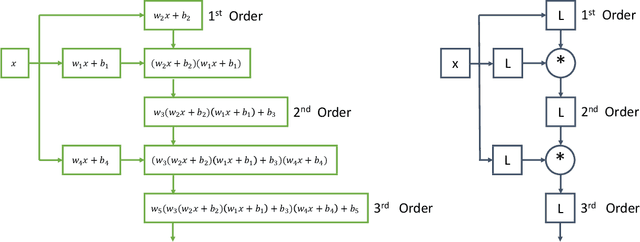

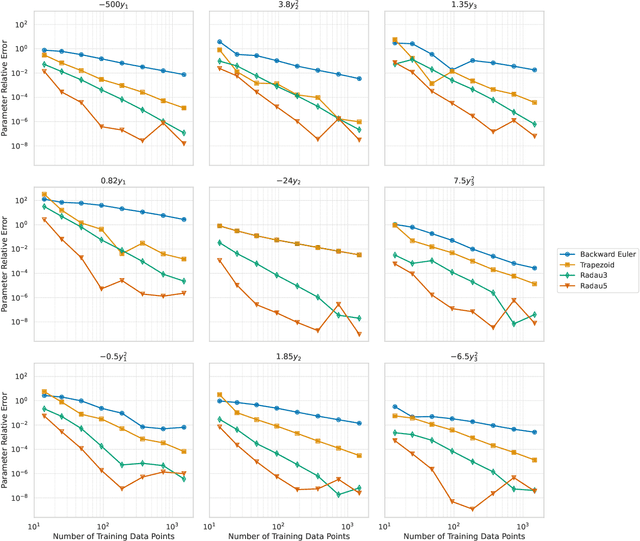

Bayesian polynomial neural networks and polynomial neural ordinary differential equations

Aug 25, 2023

Abstract:Symbolic regression with polynomial neural networks and polynomial neural ordinary differential equations (ODEs) are two recent and powerful approaches for equation recovery of many science and engineering problems. However, these methods provide point estimates for the model parameters and are currently unable to accommodate noisy data. We address this challenge by developing and validating the following Bayesian inference methods: the Laplace approximation, Markov Chain Monte Carlo (MCMC) sampling methods, and variational inference. We have found the Laplace approximation to be the best method for this class of problems. Our work can be easily extended to the broader class of symbolic neural networks to which the polynomial neural network belongs.

Interpretable Polynomial Neural Ordinary Differential Equations

Aug 09, 2022

Abstract:Neural networks have the ability to serve as universal function approximators, but they are not interpretable and don't generalize well outside of their training region. Both of these issues are problematic when trying to apply standard neural ordinary differential equations (neural ODEs) to dynamical systems. We introduce the polynomial neural ODE, which is a deep polynomial neural network inside of the neural ODE framework. We demonstrate the capability of polynomial neural ODEs to predict outside of the training region, as well as perform direct symbolic regression without additional tools such as SINDy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge