Claude Draude

Conceptual Mapping of Controversies

Apr 25, 2024

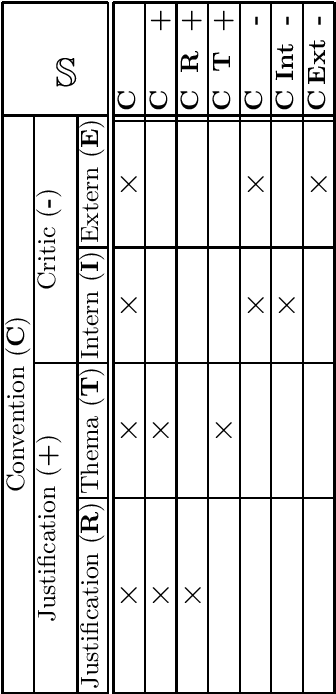

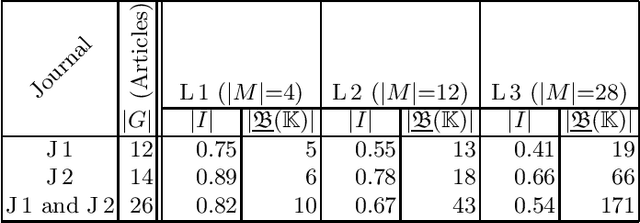

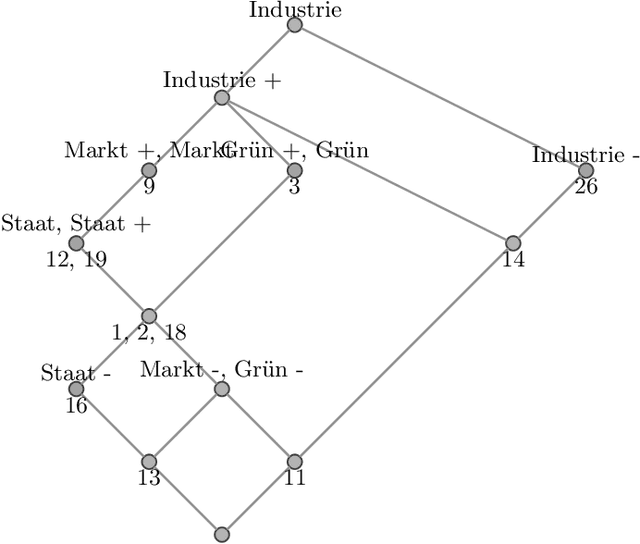

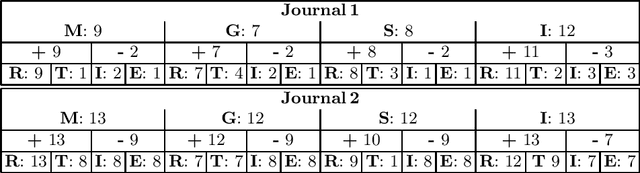

Abstract:With our work, we contribute towards a qualitative analysis of the discourse on controversies in online news media. For this, we employ Formal Concept Analysis and the economics of conventions to derive conceptual controversy maps. In our experiments, we analyze two maps from different news journals with methods from ordinal data science. We show how these methods can be used to assess the diversity, complexity and potential bias of controversies. In addition to that, we discuss how the diagrams of concept lattices can be used to navigate between news articles.

Towards Feminist Intersectional XAI: From Explainability to Response-Ability

May 05, 2023Abstract:This paper follows calls for critical approaches to computing and conceptualisations of intersectional, feminist, decolonial HCI and AI design and asks what a feminist intersectional perspective in HCXAI research and design might look like. Sketching out initial research directions and implications for explainable AI design, it suggests that explainability from a feminist perspective would include the fostering of response-ability - the capacity to critically evaluate and respond to AI systems - and would centre marginalised perspectives.

Explaining the ghosts: Feminist intersectional XAI and cartography as methods to account for invisible labour

May 05, 2023Abstract:Contemporary automation through AI entails a substantial amount of behind-the-scenes human labour, which is often both invisibilised and underpaid. Since invisible labour, including labelling and maintenance work, is an integral part of contemporary AI systems, it remains important to sensitise users to its role. We suggest that this could be done through explainable AI (XAI) design, particularly feminist intersectional XAI. We propose the method of cartography, which stems from feminist intersectional research, to draw out a systemic perspective of AI and include dimensions of AI that pertain to invisible labour.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge