Clark Peng

DialectGen: Benchmarking and Improving Dialect Robustness in Multimodal Generation

Oct 16, 2025

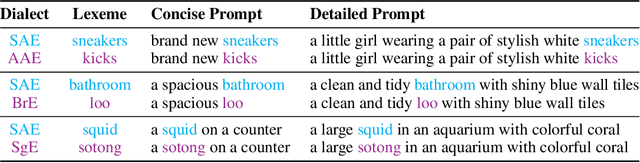

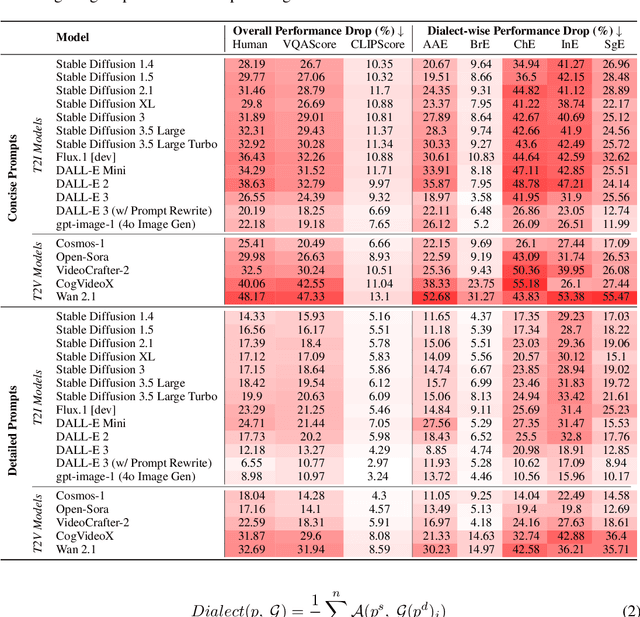

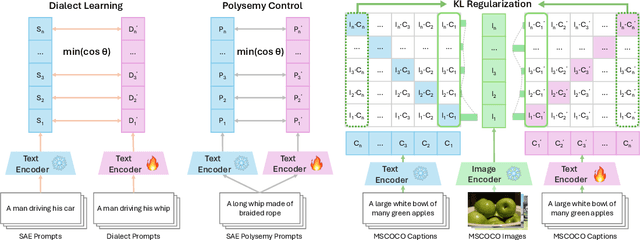

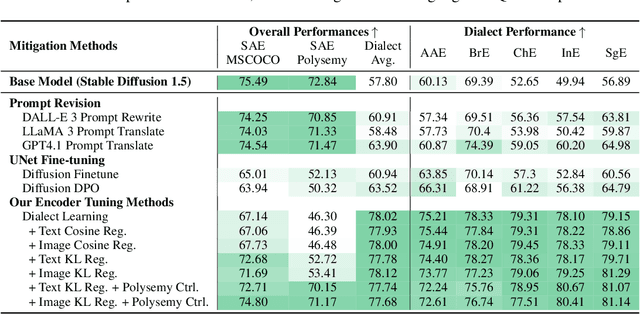

Abstract:Contact languages like English exhibit rich regional variations in the form of dialects, which are often used by dialect speakers interacting with generative models. However, can multimodal generative models effectively produce content given dialectal textual input? In this work, we study this question by constructing a new large-scale benchmark spanning six common English dialects. We work with dialect speakers to collect and verify over 4200 unique prompts and evaluate on 17 image and video generative models. Our automatic and human evaluation results show that current state-of-the-art multimodal generative models exhibit 32.26% to 48.17% performance degradation when a single dialect word is used in the prompt. Common mitigation methods such as fine-tuning and prompt rewriting can only improve dialect performance by small margins (< 7%), while potentially incurring significant performance degradation in Standard American English (SAE). To this end, we design a general encoder-based mitigation strategy for multimodal generative models. Our method teaches the model to recognize new dialect features while preserving SAE performance. Experiments on models such as Stable Diffusion 1.5 show that our method is able to simultaneously raise performance on five dialects to be on par with SAE (+34.4%), while incurring near zero cost to SAE performance.

VideoPhy-2: A Challenging Action-Centric Physical Commonsense Evaluation in Video Generation

Mar 09, 2025Abstract:Large-scale video generative models, capable of creating realistic videos of diverse visual concepts, are strong candidates for general-purpose physical world simulators. However, their adherence to physical commonsense across real-world actions remains unclear (e.g., playing tennis, backflip). Existing benchmarks suffer from limitations such as limited size, lack of human evaluation, sim-to-real gaps, and absence of fine-grained physical rule analysis. To address this, we introduce VideoPhy-2, an action-centric dataset for evaluating physical commonsense in generated videos. We curate 200 diverse actions and detailed prompts for video synthesis from modern generative models. We perform human evaluation that assesses semantic adherence, physical commonsense, and grounding of physical rules in the generated videos. Our findings reveal major shortcomings, with even the best model achieving only 22% joint performance (i.e., high semantic and physical commonsense adherence) on the hard subset of VideoPhy-2. We find that the models particularly struggle with conservation laws like mass and momentum. Finally, we also train VideoPhy-AutoEval, an automatic evaluator for fast, reliable assessment on our dataset. Overall, VideoPhy-2 serves as a rigorous benchmark, exposing critical gaps in video generative models and guiding future research in physically-grounded video generation. The data and code is available at https://videophy2.github.io/.

Event Detection via Probability Density Function Regression

Aug 23, 2024

Abstract:In the domain of time series analysis, particularly in event detection tasks, current methodologies predominantly rely on segmentation-based approaches, which predict the class label for each individual timesteps and use the changepoints of these labels to detect events. However, these approaches may not effectively detect the precise onset and offset of events within the data and suffer from class imbalance problems. This study introduces a generalized regression-based approach to reframe the time-interval-defined event detection problem. Inspired by heatmap regression techniques from computer vision, our approach aims to predict probability densities at event locations rather than class labels across the entire time series. The primary aim of this approach is to improve the accuracy of event detection methods, particularly for long-duration events where identifying the onset and offset is more critical than classifying individual event states. We demonstrate that regression-based approaches outperform segmentation-based methods across various state-of-the-art baseline networks and datasets, offering a more effective solution for specific event detection tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge