Chun Yang Tan

Exploiting Frequency Spectrum of Adversarial Images for General Robustness

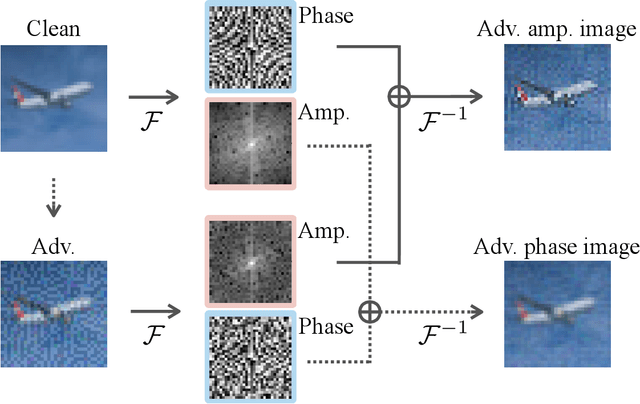

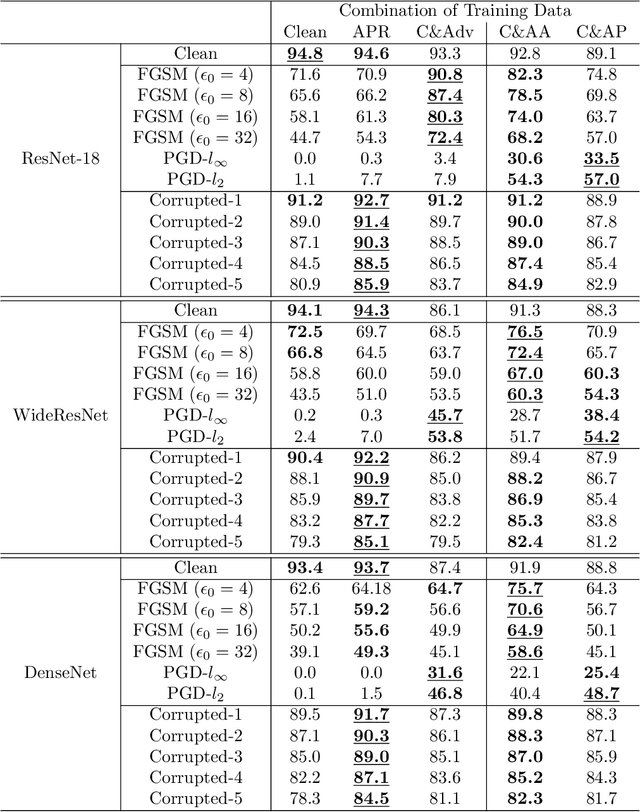

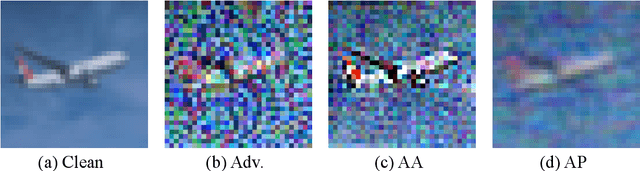

May 15, 2023Abstract:In recent years, there has been growing concern over the vulnerability of convolutional neural networks (CNNs) to image perturbations. However, achieving general robustness against different types of perturbations remains challenging, in which enhancing robustness to some perturbations (e.g., adversarial perturbations) may degrade others (e.g., common corruptions). In this paper, we demonstrate that adversarial training with an emphasis on phase components significantly improves model performance on clean, adversarial, and common corruption accuracies. We propose a frequency-based data augmentation method, Adversarial Amplitude Swap, that swaps the amplitude spectrum between clean and adversarial images to generate two novel training images: adversarial amplitude and adversarial phase images. These images act as substitutes for adversarial images and can be implemented in various adversarial training setups. Through extensive experiments, we demonstrate that our method enables the CNNs to gain general robustness against different types of perturbations and results in a uniform performance against all types of common corruptions.

Adversarial amplitude swap towards robust image classifiers

Apr 01, 2022

Abstract:The vulnerability of convolutional neural networks (CNNs) to image perturbations such as common corruptions and adversarial perturbations has recently been investigated from the perspective of frequency. In this study, we investigate the effect of the amplitude and phase spectra of adversarial images on the robustness of CNN classifiers. Extensive experiments revealed that the images generated by combining the amplitude spectrum of adversarial images and the phase spectrum of clean images accommodates moderate and general perturbations, and training with these images equips a CNN classifier with more general robustness, performing well under both common corruptions and adversarial perturbations. We also found that two types of overfitting (catastrophic overfitting and robust overfitting) can be circumvented by the aforementioned spectrum recombination. We believe that these results contribute to the understanding and the training of truly robust classifiers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge