Chuanchuan Wang

ARN-LSTM: A Multi-Stream Attention-Based Model for Action Recognition with Temporal Dynamics

Nov 04, 2024

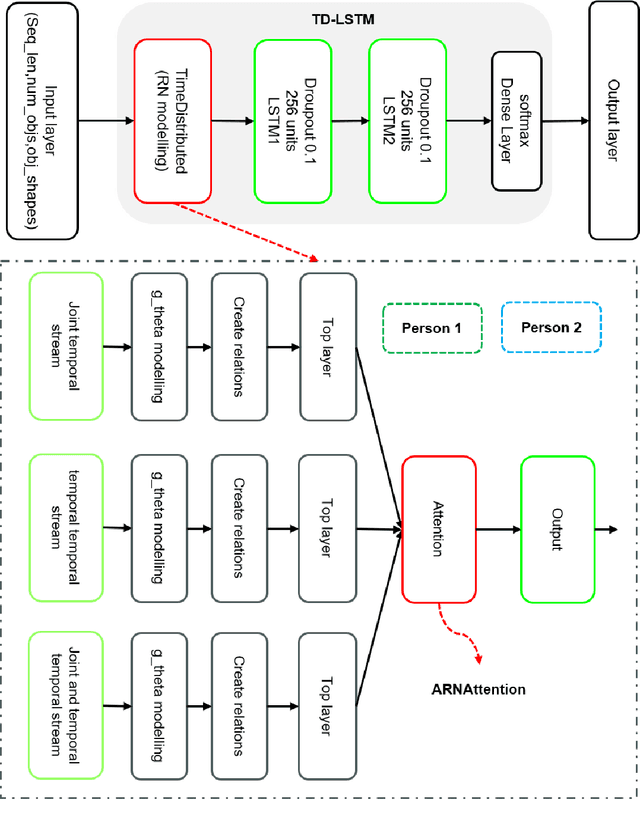

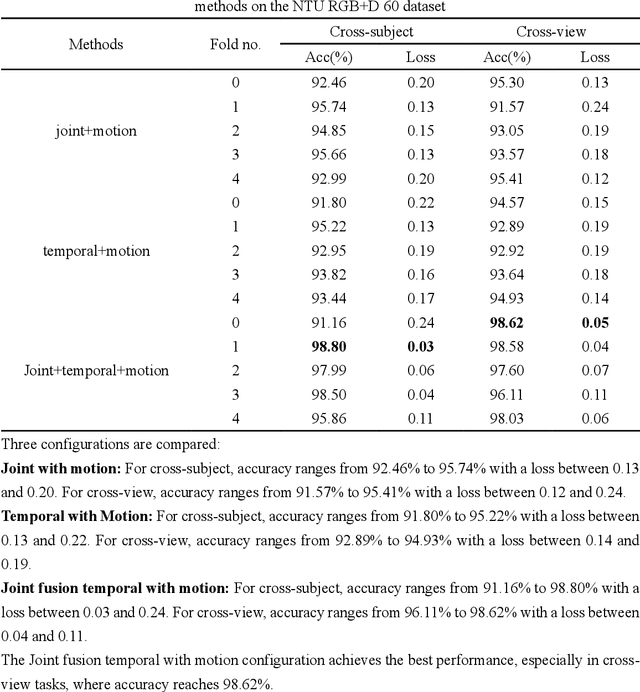

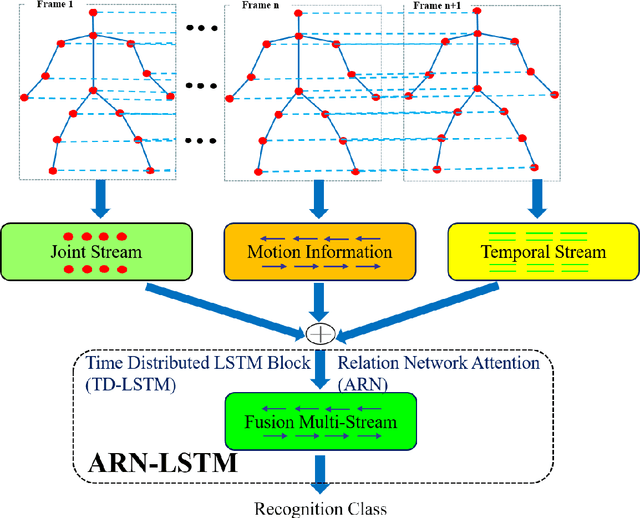

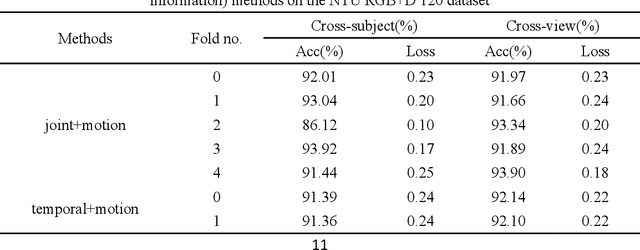

Abstract:This paper presents ARN-LSTM, a novel multi-stream action recognition model designed to address the challenge of simultaneously capturing spatial motion and temporal dynamics in action sequences. Traditional methods often focus solely on spatial or temporal features, limiting their ability to comprehend complex human activities fully. Our proposed model integrates joint, motion, and temporal information through a multi-stream fusion architecture. Specifically, it comprises a joint stream for extracting skeleton features, a temporal stream for capturing dynamic temporal features, and an ARN-LSTM block that utilizes Time-Distributed Long Short-Term Memory (TD-LSTM) layers followed by an Attention Relation Network (ARN) to model temporal relations. The outputs from these streams are fused in a fully connected layer to provide the final action prediction. Evaluations on the NTU RGB+D 60 and NTU RGB+D 120 datasets demonstrate the effectiveness of our model, achieving effective performance, particularly in group activity recognition.

Group Activity Recognition in Computer Vision: A Comprehensive Review, Challenges, and Future Perspectives

Jul 25, 2023

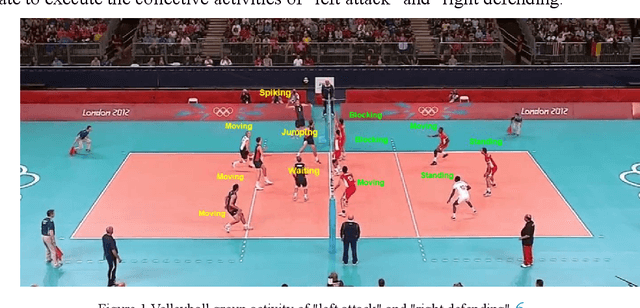

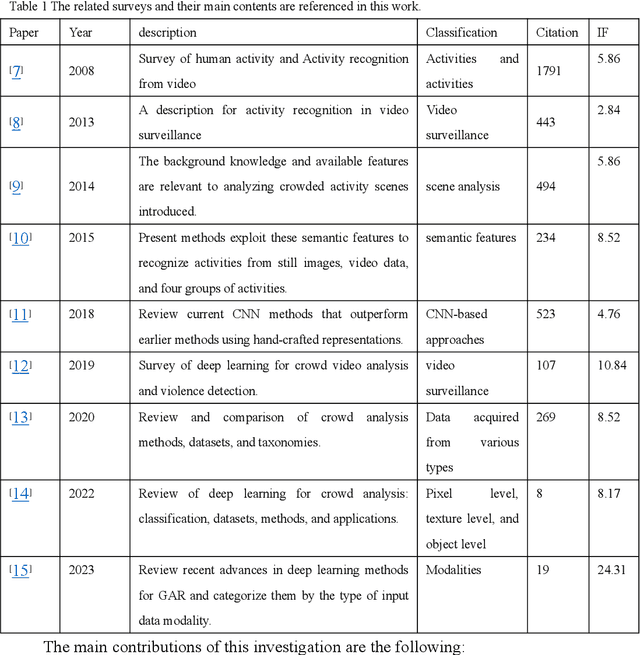

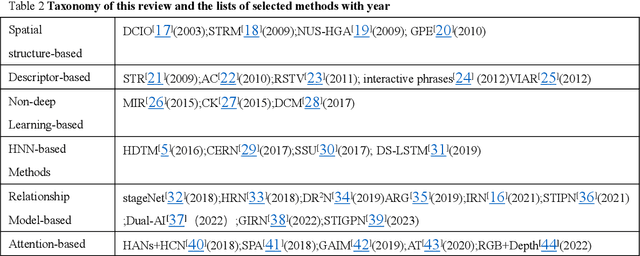

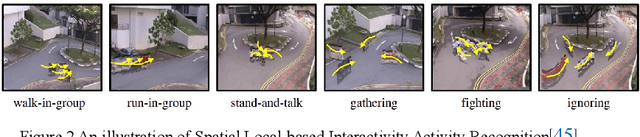

Abstract:Group activity recognition is a hot topic in computer vision. Recognizing activities through group relationships plays a vital role in group activity recognition. It holds practical implications in various scenarios, such as video analysis, surveillance, automatic driving, and understanding social activities. The model's key capabilities encompass efficiently modeling hierarchical relationships within a scene and accurately extracting distinctive spatiotemporal features from groups. Given this technology's extensive applicability, identifying group activities has garnered significant research attention. This work examines the current progress in technology for recognizing group activities, with a specific focus on global interactivity and activities. Firstly, we comprehensively review the pertinent literature and various group activity recognition approaches, from traditional methodologies to the latest methods based on spatial structure, descriptors, non-deep learning, hierarchical recurrent neural networks (HRNN), relationship models, and attention mechanisms. Subsequently, we present the relational network and relational architectures for each module. Thirdly, we investigate methods for recognizing group activity and compare their performance with state-of-the-art technologies. We summarize the existing challenges and provide comprehensive guidance for newcomers to understand group activity recognition. Furthermore, we review emerging perspectives in group activity recognition to explore new directions and possibilities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge