Christopher Garay

Final Report on MITRE Evaluations for the DARPA Big Mechanism Program

Nov 08, 2022

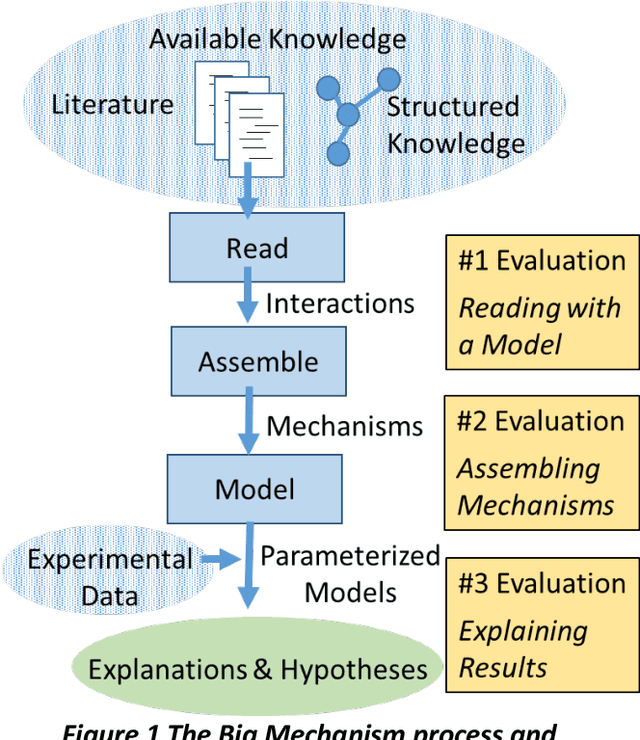

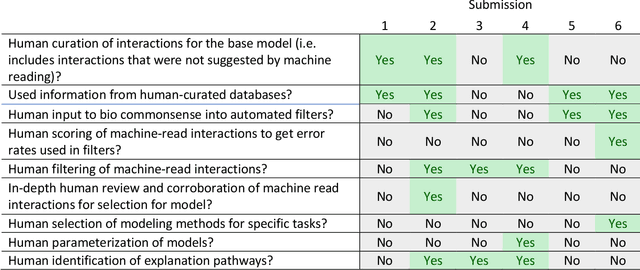

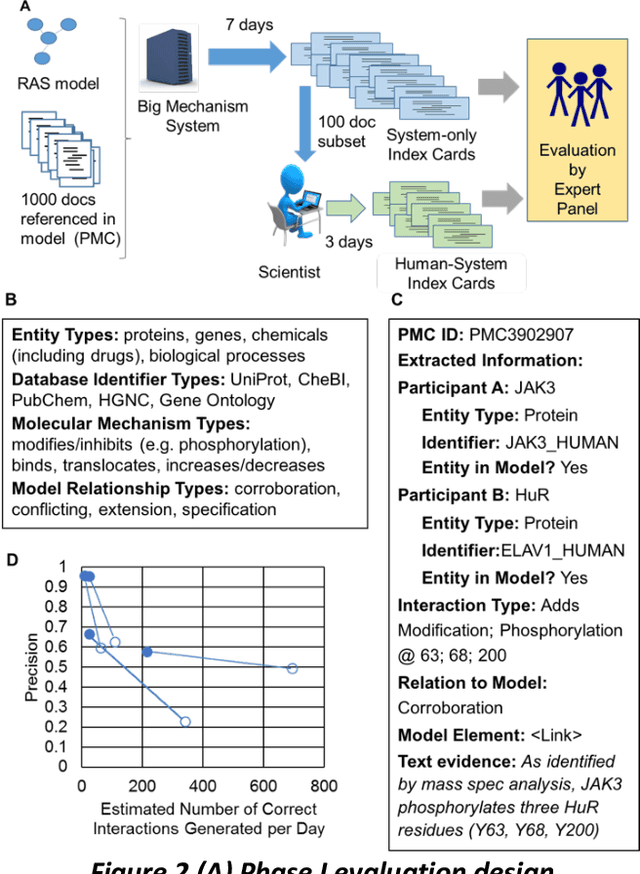

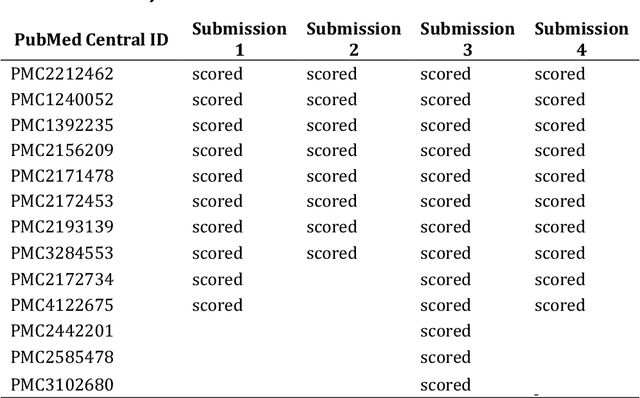

Abstract:This report presents the evaluation approach developed for the DARPA Big Mechanism program, which aimed at developing computer systems that will read research papers, integrate the information into a computer model of cancer mechanisms, and frame new hypotheses. We employed an iterative, incremental approach to the evaluation of the three phases of the program. In Phase I, we evaluated the ability of system and human teams ability to read-with-a-model to capture mechanistic information from the biomedical literature, integrated with information from expert curated biological databases. In Phase II we evaluated the ability of systems to assemble fragments of information into a mechanistic model. The Phase III evaluation focused on the ability of systems to provide explanations of experimental observations based on models assembled (largely automatically) by the Big Mechanism process. The evaluation for each phase built on earlier evaluations and guided developers towards creating capabilities for the new phase. The report describes our approach, including innovations such as a reference set (a curated data set limited to major findings of each paper) to assess the accuracy of systems in extracting mechanistic findings in the absence of a gold standard, and a method to evaluate model-based explanations of experimental data. Results of the evaluation and supporting materials are included in the appendices.

Hallmarks of Human-Machine Collaboration: A framework for assessment in the DARPA Communicating with Computers Program

Feb 09, 2021

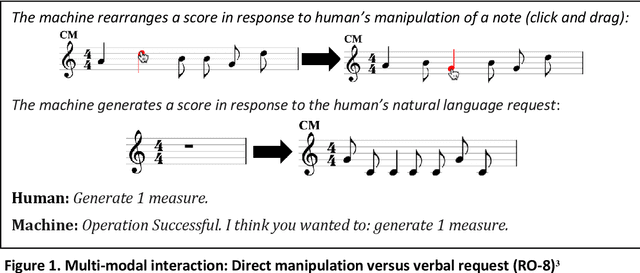

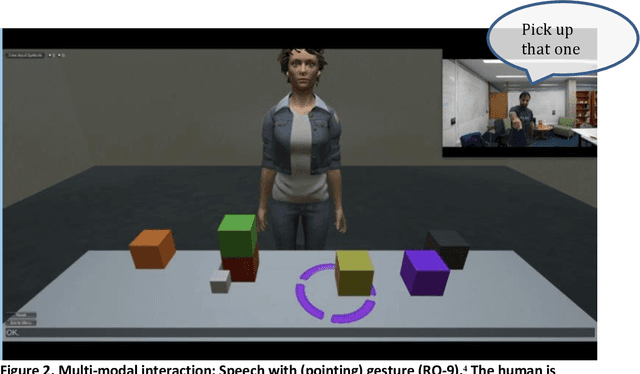

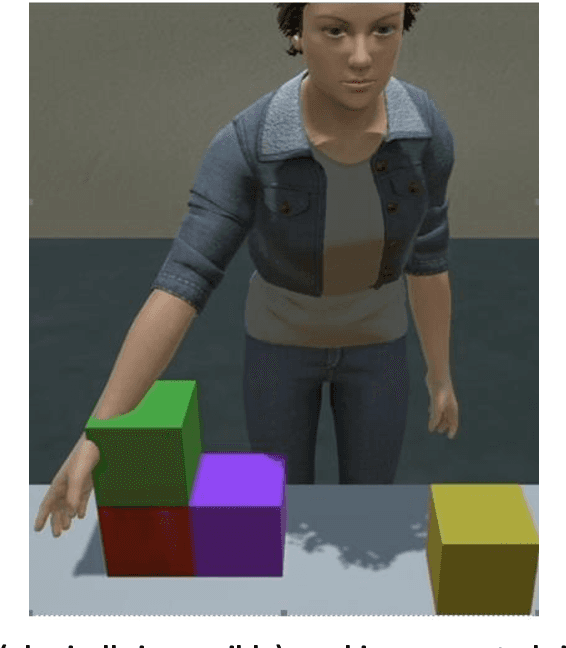

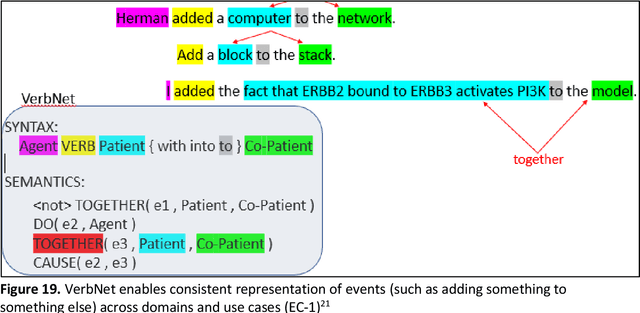

Abstract:There is a growing desire to create computer systems that can communicate effectively to collaborate with humans on complex, open-ended activities. Assessing these systems presents significant challenges. We describe a framework for evaluating systems engaged in open-ended complex scenarios where evaluators do not have the luxury of comparing performance to a single right answer. This framework has been used to evaluate human-machine creative collaborations across story and music generation, interactive block building, and exploration of molecular mechanisms in cancer. These activities are fundamentally different from the more constrained tasks performed by most contemporary personal assistants as they are generally open-ended, with no single correct solution, and often no obvious completion criteria. We identified the Key Properties that must be exhibited by successful systems. From there we identified "Hallmarks" of success -- capabilities and features that evaluators can observe that would be indicative of progress toward achieving a Key Property. In addition to being a framework for assessment, the Key Properties and Hallmarks are intended to serve as goals in guiding research direction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge