Christopher Briggs

Federated Learning for Short-term Residential Energy Demand Forecasting

May 27, 2021

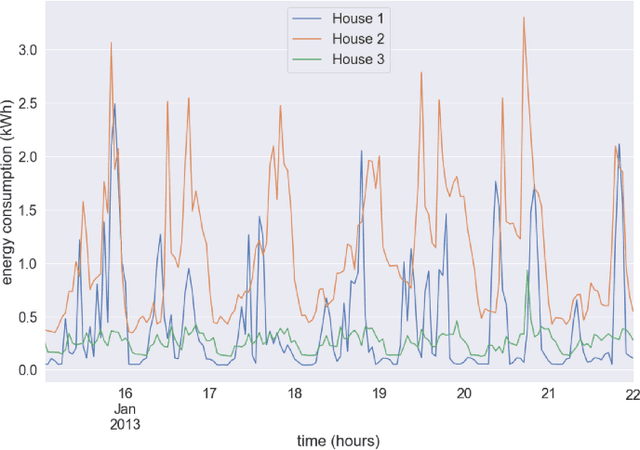

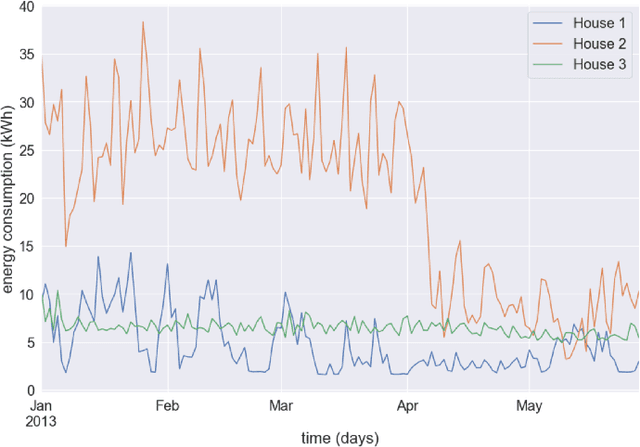

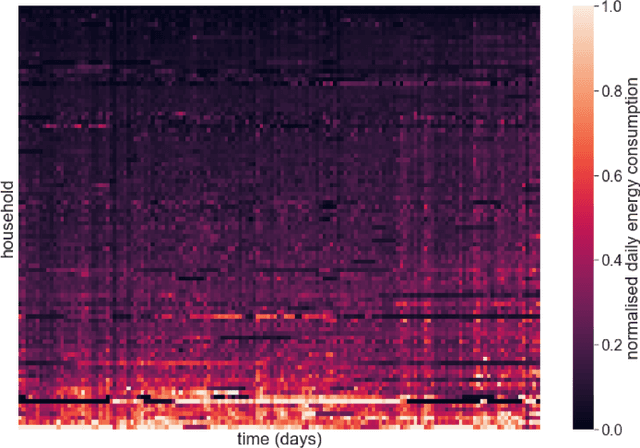

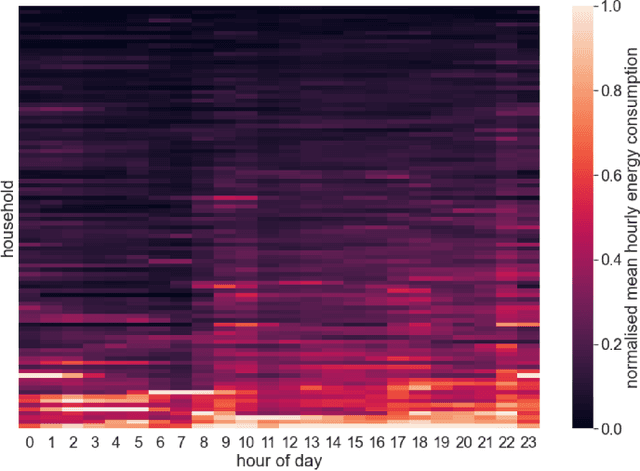

Abstract:Energy demand forecasting is an essential task performed within the energy industry to help balance supply with demand and maintain a stable load on the electricity grid. As supply transitions towards less reliable renewable energy generation, smart meters will prove a vital component to aid these forecasting tasks. However, smart meter take-up is low among privacy-conscious consumers that fear intrusion upon their fine-grained consumption data. In this work we propose and explore a federated learning (FL) based approach for training forecasting models in a distributed, collaborative manner whilst retaining the privacy of the underlying data. We compare two approaches: FL, and a clustered variant, FL+HC against a non-private, centralised learning approach and a fully private, localised learning approach. Within these approaches, we measure model performance using RMSE and computational efficiency via the number of samples required to train models under each scenario. In addition, we suggest the FL strategies are followed by a personalisation step and show that model performance can be improved by doing so. We show that FL+HC followed by personalisation can achieve a $\sim$5% improvement in model performance with a $\sim$10x reduction in computation compared to localised learning. Finally we provide advice on private aggregation of predictions for building a private end-to-end energy demand forecasting application.

Privacy Preserving Demand Forecasting to Encourage Consumer Acceptance of Smart Energy Meters

Dec 14, 2020Abstract:In this proposal paper we highlight the need for privacy preserving energy demand forecasting to allay a major concern consumers have about smart meter installations. High resolution smart meter data can expose many private aspects of a consumer's household such as occupancy, habits and individual appliance usage. Yet smart metering infrastructure has the potential to vastly reduce carbon emissions from the energy sector through improved operating efficiencies. We propose the application of a distributed machine learning setting known as federated learning for energy demand forecasting at various scales to make load prediction possible whilst retaining the privacy of consumers' raw energy consumption data.

Federated learning with hierarchical clustering of local updates to improve training on non-IID data

May 06, 2020

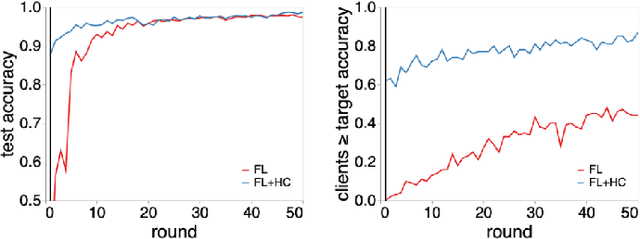

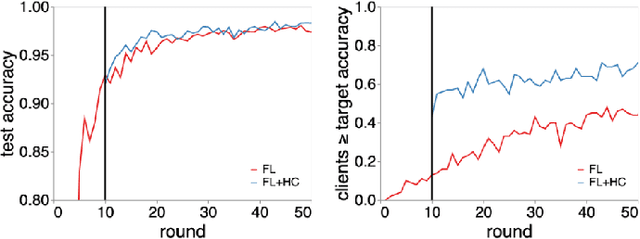

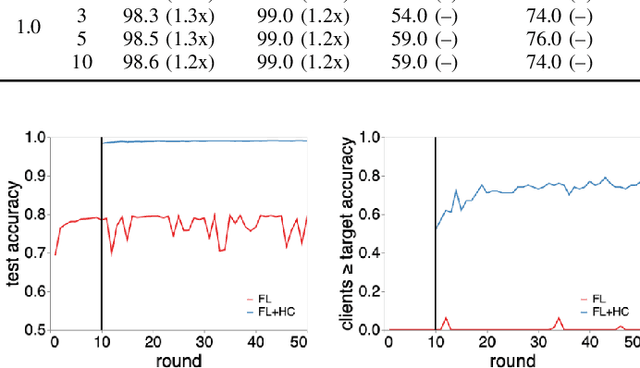

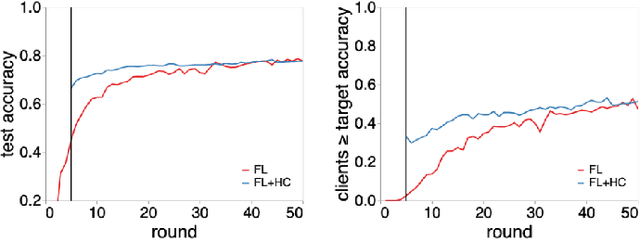

Abstract:Federated learning (FL) is a well established method for performing machine learning tasks over massively distributed data. However in settings where data is distributed in a non-iid (not independent and identically distributed) fashion -- as is typical in real world situations -- the joint model produced by FL suffers in terms of test set accuracy and/or communication costs compared to training on iid data. We show that learning a single joint model is often not optimal in the presence of certain types of non-iid data. In this work we present a modification to FL by introducing a hierarchical clustering step (FL+HC) to separate clusters of clients by the similarity of their local updates to the global joint model. Once separated, the clusters are trained independently and in parallel on specialised models. We present a robust empirical analysis of the hyperparameters for FL+HC for several iid and non-iid settings. We show how FL+HC allows model training to converge in fewer communication rounds (significantly so under some non-iid settings) compared to FL without clustering. Additionally, FL+HC allows for a greater percentage of clients to reach a target accuracy compared to standard FL. Finally we make suggestions for good default hyperparameters to promote superior performing specialised models without modifying the the underlying federated learning communication protocol.

A Review of Privacy Preserving Federated Learning for Private IoT Analytics

Apr 24, 2020

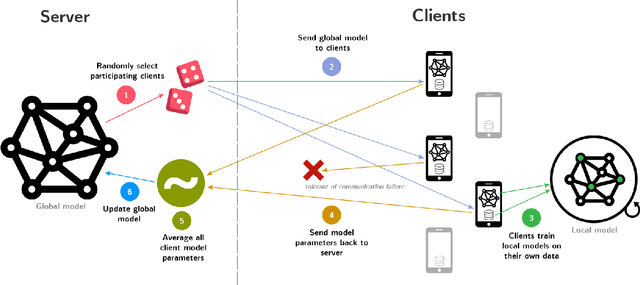

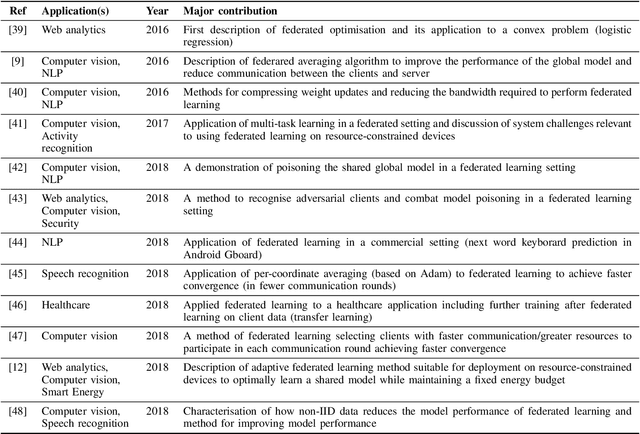

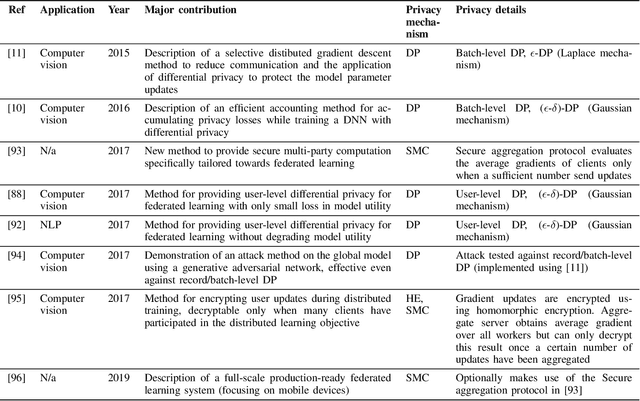

Abstract:The Internet-of-Things generates vast quantities of data, much of it attributable to an individual's activity and behaviour. Holding and processing such personal data in a central location presents a significant privacy risk to individuals (of being identified or of their sensitive data being leaked). However, analytics based on machine learning and in particular deep learning benefit greatly from large amounts of data to develop high performance predictive models. Traditionally, data and models are stored and processed in a data centre environment where models are trained in a single location. This work reviews research around an alternative approach to machine learning known as federated learning which seeks to train machine learning models in a distributed fashion on devices in the user's domain, rather than by a centralised entity. Furthermore, we review additional privacy preserving methods applied to federated learning used to protect individuals from being identified during training and once a model is trained. Throughout this review, we identify the strengths and weaknesses of different methods applied to federated learning and finally, we outline future directions for privacy preserving federated learning research, particularly focusing on Internet-of-Things applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge