Christoph S. Garbe

An Optimal Experimental Design Approach for Light Configurations in Photometric Stereo

Apr 11, 2022

Abstract:This paper presents a technique for finding the surface normal of an object from a set of images obtained under different lighting positions. The method presented is based on the principles of Photometric Stereo (PS) combined with Optimum Experimental Design (OED) and Parameter Estimation (PE). Unclear by the approach of photometric stereo, and many models based thereon, is how to position the light sources. So far, this is done by using heuristic approaches this leads to suboptimal and non-data driven positioning of the light sources. But what if the optimal positions of the light sources are calculated for photometric stereo? To this end, in this contribution, the effect of positioning the light sources on the quality of the normal vector for PS is evaluated. Furthermore, a new approach in this direction is derived and formulated. For the calculation of the surface normal of a Lambertian surface, the approach based on calibrated photometric stereo; for the estimation the optimal position of the light sources the approach is premised on parameter estimation and optimum experimental design. The approach is tested using synthetic and real-data. Based on results it can be seen that the surface normal estimated with the new method is more detailed than with conventional methods.

Automated Multiscale 3D Feature Learning for Vessels Segmentation in Thorax CT Images

Jan 06, 2019

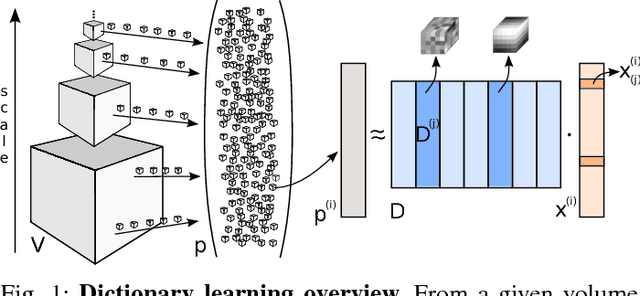

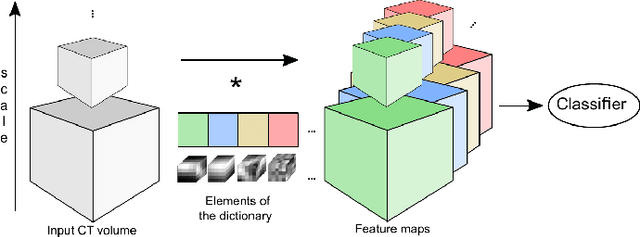

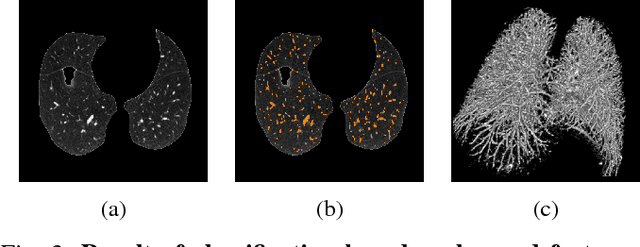

Abstract:We address the vessel segmentation problem by building upon the multiscale feature learning method of Kiros et al., which achieves the current top score in the VESSEL12 MICCAI challenge. Following their idea of feature learning instead of hand-crafted filters, we have extended the method to learn 3D features. The features are learned in an unsupervised manner in a multi-scale scheme using dictionary learning via least angle regression. The 3D feature kernels are further convolved with the input volumes in order to create feature maps. Those maps are used to train a supervised classifier with the annotated voxels. In order to process the 3D data with a large number of filters a parallel implementation has been developed. The algorithm has been applied on the example scans and annotations provided by the VESSEL12 challenge. We have compared our setup with Kiros et al. by running their implementation. Our current results show an improvement in accuracy over the slice wise method from 96.66$\pm$1.10% to 97.24$\pm$0.90%.

Fully Convolutional Deep Network Architectures for Automatic Short Glass Fiber Semantic Segmentation from CT scans

Jan 04, 2019

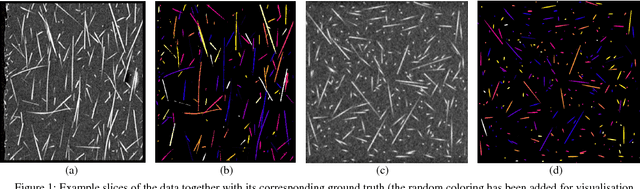

Abstract:We present the first attempt to perform short glass fiber semantic segmentation from X-ray computed tomography volumetric datasets at medium (3.9 {\mu}m isotropic) and low (8.3 {\mu}m isotropic) resolution using deep learning architectures. We performed experiments on both synthetic and real CT scans and evaluated deep fully convolutional architectures with both 2D and 3D kernels. Our artificial neural networks outperform existing methods at both medium and low resolution scans.

Reference Setup for Quantitative Comparison of Segmentation Techniques for Short Glass Fiber CT Data

Jan 04, 2019

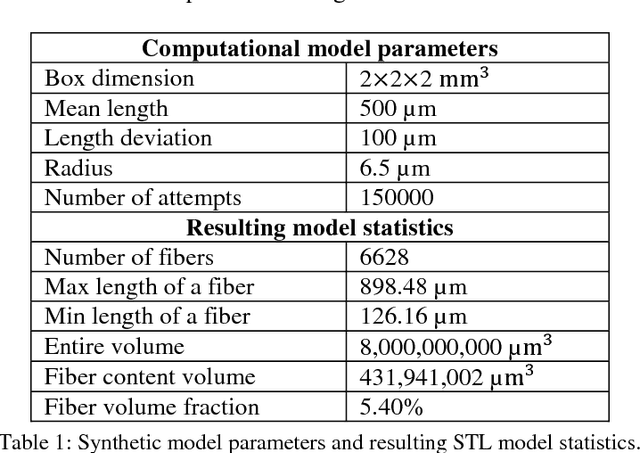

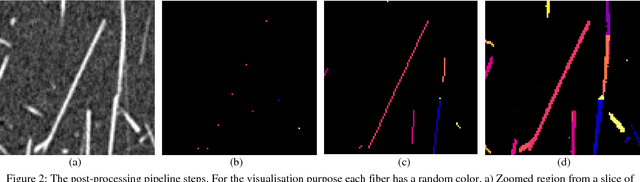

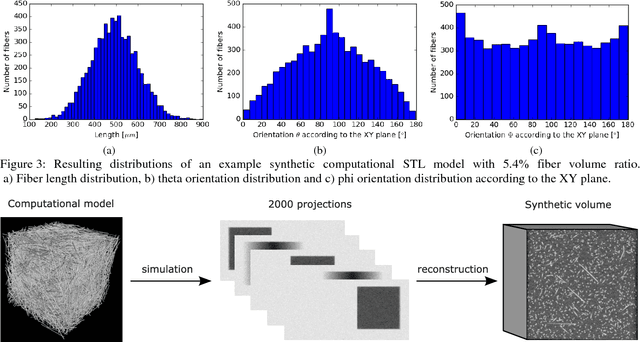

Abstract:Comparing different algorithms for segmenting glass fibers in industrial computed tomography (CT) scans is difficult due to the absence of a standard reference dataset. In this work, we introduce a set of annotated scans of short-fiber reinforced polymers (SFRP) as well as synthetically created CT volume data together with the evaluation metrics. We suggest both the metrics and this data set as a reference for studying the performance of different algorithms. The real scans were acquired by a Nikon MCT225 X-ray CT system. The simulated scans were created by the use of an in-house computational model and third-party commercial software. For both types of data, corresponding ground truth annotations have been prepared, including hand annotations for the real scans and STL models for the synthetic scans. Additionally, a Hessian-based Frangi vesselness filter for fiber segmentation has been implemented and open-sourced to serve as a reference for comparisons.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge