Ching-Te Chiu

PGT-Net: Progressive Guided Multi-task Neural Network for Small-area Wet Fingerprint Denoising and Recognition

Aug 14, 2023

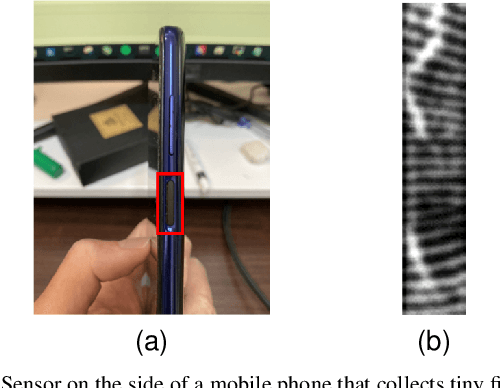

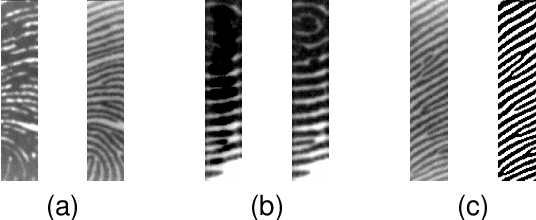

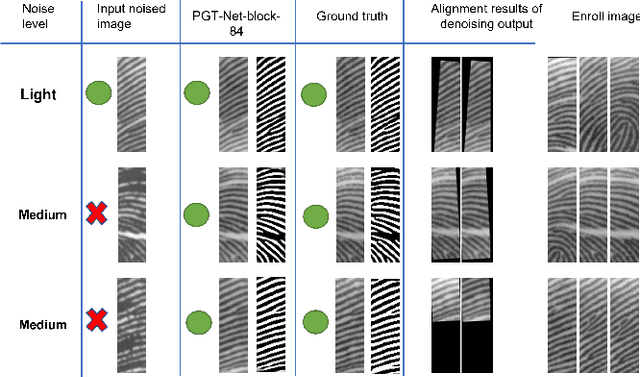

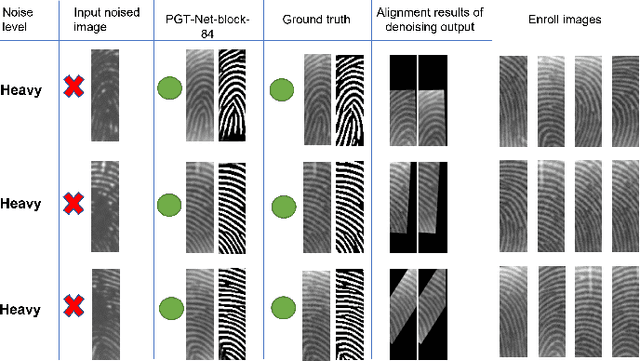

Abstract:Fingerprint recognition on mobile devices is an important method for identity verification. However, real fingerprints usually contain sweat and moisture which leads to poor recognition performance. In addition, for rolling out slimmer and thinner phones, technology companies reduce the size of recognition sensors by embedding them with the power button. Therefore, the limited size of fingerprint data also increases the difficulty of recognition. Denoising the small-area wet fingerprint images to clean ones becomes crucial to improve recognition performance. In this paper, we propose an end-to-end trainable progressive guided multi-task neural network (PGT-Net). The PGT-Net includes a shared stage and specific multi-task stages, enabling the network to train binary and non-binary fingerprints sequentially. The binary information is regarded as guidance for output enhancement which is enriched with the ridge and valley details. Moreover, a novel residual scaling mechanism is introduced to stabilize the training process. Experiment results on the FW9395 and FT-lightnoised dataset provided by FocalTech shows that PGT-Net has promising performance on the wet-fingerprint denoising and significantly improves the fingerprint recognition rate (FRR). On the FT-lightnoised dataset, the FRR of fingerprint recognition can be declined from 17.75% to 4.47%. On the FW9395 dataset, the FRR of fingerprint recognition can be declined from 9.45% to 1.09%.

EfficientNet-eLite: Extremely Lightweight and Efficient CNN Models for Edge Devices by Network Candidate Search

Sep 16, 2020

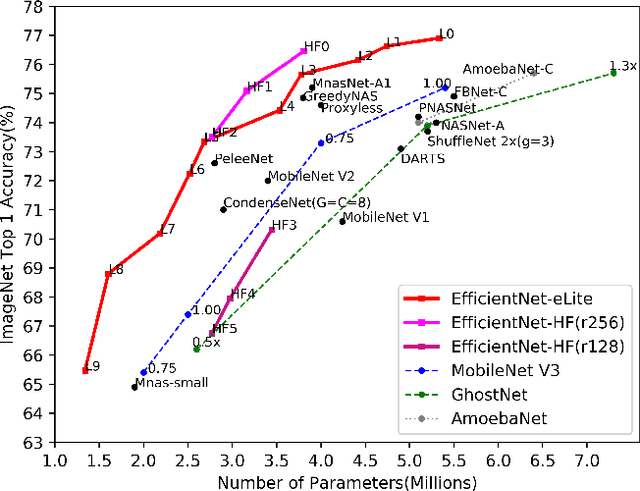

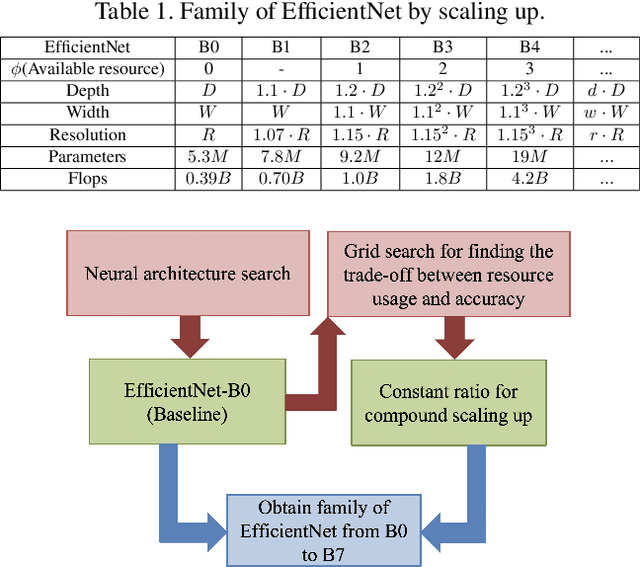

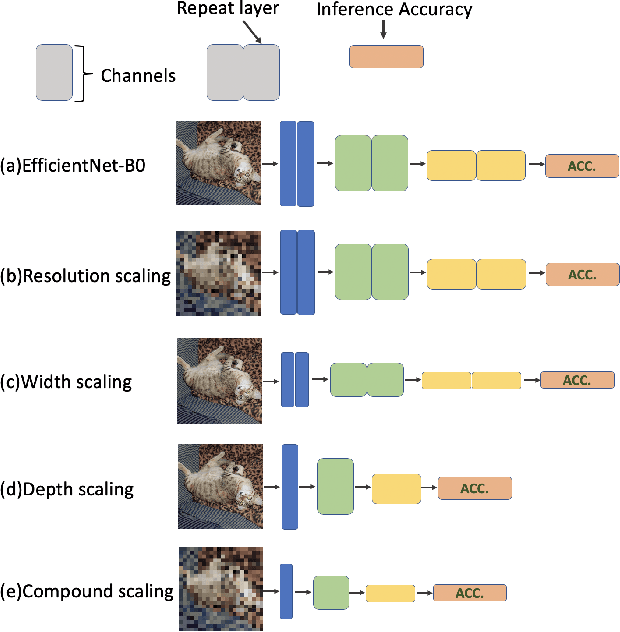

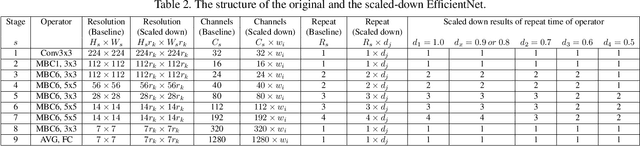

Abstract:Embedding Convolutional Neural Network (CNN) into edge devices for inference is a very challenging task because such lightweight hardware is not born to handle this heavyweight software, which is the common overhead from the modern state-of-the-art CNN models. In this paper, targeting at reducing the overhead with trading the accuracy as less as possible, we propose a novel of Network Candidate Search (NCS), an alternative way to study the trade-off between the resource usage and the performance through grouping concepts and elimination tournament. Besides, NCS can also be generalized across any neural network. In our experiment, we collect candidate CNN models from EfficientNet-B0 to be scaled down in varied way through width, depth, input resolution and compound scaling down, applying NCS to research the scaling-down trade-off. Meanwhile, a family of extremely lightweight EfficientNet is obtained, called EfficientNet-eLite. For further embracing the CNN edge application with Application-Specific Integrated Circuit (ASIC), we adjust the architectures of EfficientNet-eLite to build the more hardware-friendly version, EfficientNet-HF. Evaluation on ImageNet dataset, both proposed EfficientNet-eLite and EfficientNet-HF present better parameter usage and accuracy than the previous start-of-the-art CNNs. Particularly, the smallest member of EfficientNet-eLite is more lightweight than the best and smallest existing MnasNet with 1.46x less parameters and 0.56% higher accuracy. Code is available at https://github.com/Ching-Chen-Wang/EfficientNet-eLite

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge