Ching-Hsuan Chen

CoachAI: A Project for Microscopic Badminton Match Data Collection and Tactical Analysis

Jul 12, 2019

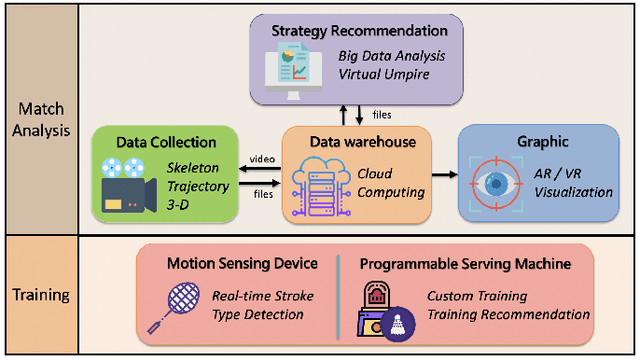

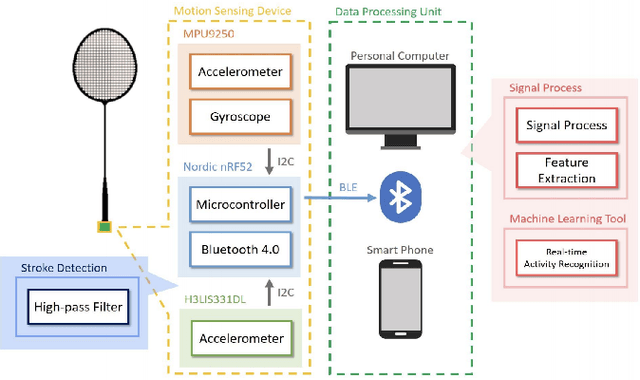

Abstract:Computer vision based object tracking has been used to annotate and augment sports video. For sports learning and training, video replay is often used in post-match review and training review for tactical analysis and movement analysis. For automatically and systematically competition data collection and tactical analysis, a project called CoachAI has been supported by the Ministry of Science and Technology, Taiwan. The proposed project also includes research of data visualization, connected training auxiliary devices, and data warehouse. Deep learning techniques will be used to develop video-based real-time microscopic competition data collection based on broadcast competition video. Machine learning techniques will be used to develop a tactical analysis. To reveal data in more understandable forms and to help in pre-match training, AR/VR techniques will be used to visualize data, tactics, and so on. In addition, training auxiliary devices including smart badminton rackets and connected serving machines will be developed based on the IoT technology to further utilize competition data and tactical data and boost training efficiency. Especially, the connected serving machines will be developed to perform specified tactics and to interact with players in their training.

TrackNet: A Deep Learning Network for Tracking High-speed and Tiny Objects in Sports Applications

Jul 08, 2019

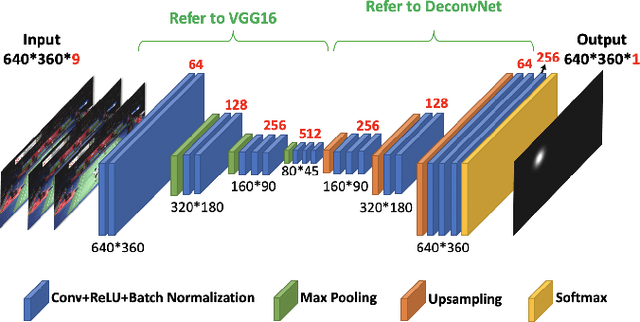

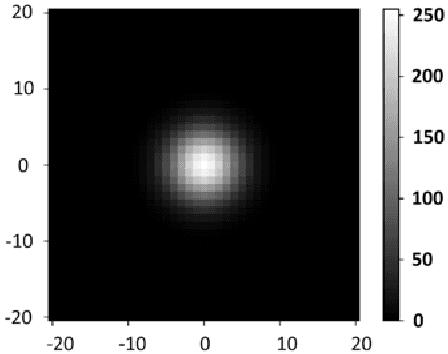

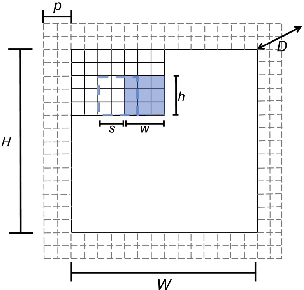

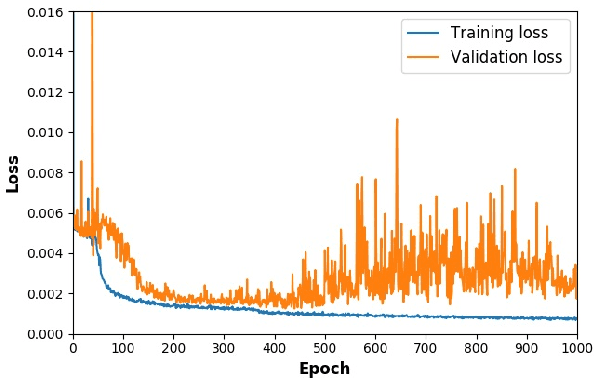

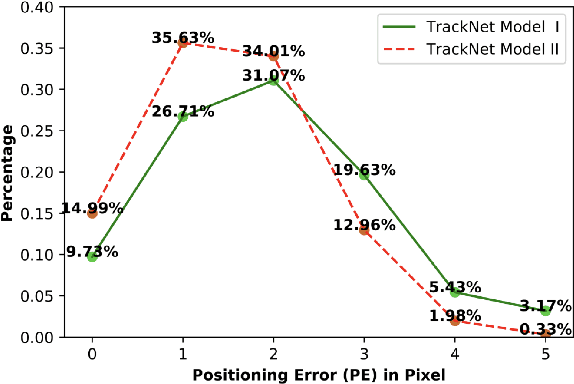

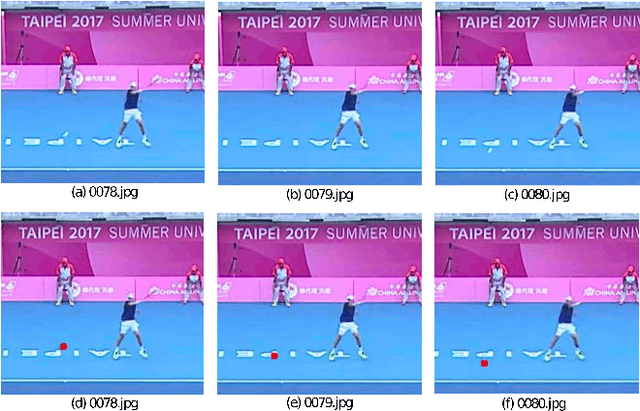

Abstract:Ball trajectory data are one of the most fundamental and useful information in the evaluation of players' performance and analysis of game strategies. Although vision-based object tracking techniques have been developed to analyze sport competition videos, it is still challenging to recognize and position a high-speed and tiny ball accurately. In this paper, we develop a deep learning network, called TrackNet, to track the tennis ball from broadcast videos in which the ball images are small, blurry, and sometimes with afterimage tracks or even invisible. The proposed heatmap-based deep learning network is trained to not only recognize the ball image from a single frame but also learn flying patterns from consecutive frames. TrackNet takes images with a size of $640\times360$ to generate a detection heatmap from either a single frame or several consecutive frames to position the ball and can achieve high precision even on public domain videos. The network is evaluated on the video of the men's singles final at the 2017 Summer Universiade, which is available on YouTube. The precision, recall, and F1-measure of TrackNet reach $99.7\%$, $97.3\%$, and $98.5\%$, respectively. To prevent overfitting, 9 additional videos are partially labeled together with a subset from the previous dataset to implement 10-fold cross-validation, and the precision, recall, and F1-measure are $95.3\%$, $75.7\%$, and $84.3\%$, respectively. A conventional image processing algorithm is also implemented to compare with TrackNet. Our experiments indicate that TrackNet outperforms conventional method by a big margin and achieves exceptional ball tracking performance. The dataset and demo video are available at https://nol.cs.nctu.edu.tw/ndo3je6av9/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge