Chieh-Chih Wang

Multi-modal Motion Prediction using Temporal Ensembling with Learning-based Aggregation

Oct 25, 2024

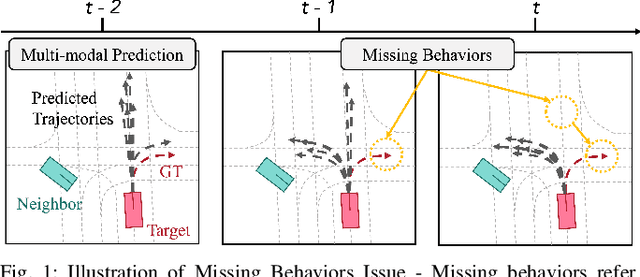

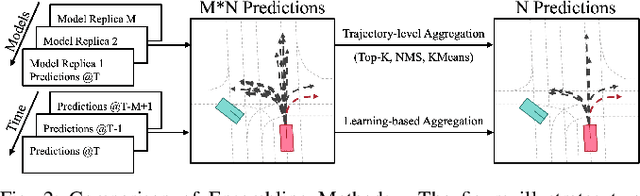

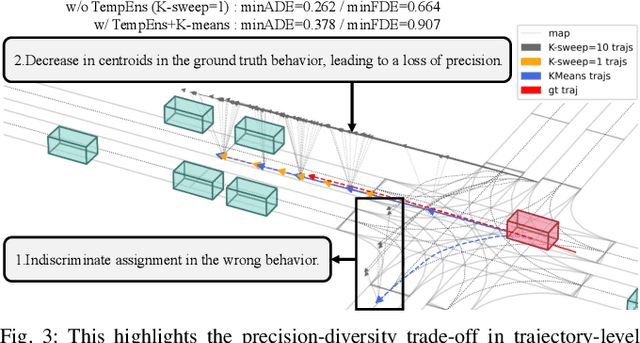

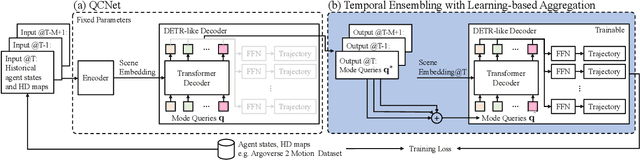

Abstract:Recent years have seen a shift towards learning-based methods for trajectory prediction, with challenges remaining in addressing uncertainty and capturing multi-modal distributions. This paper introduces Temporal Ensembling with Learning-based Aggregation, a meta-algorithm designed to mitigate the issue of missing behaviors in trajectory prediction, which leads to inconsistent predictions across consecutive frames. Unlike conventional model ensembling, temporal ensembling leverages predictions from nearby frames to enhance spatial coverage and prediction diversity. By confirming predictions from multiple frames, temporal ensembling compensates for occasional errors in individual frame predictions. Furthermore, trajectory-level aggregation, often utilized in model ensembling, is insufficient for temporal ensembling due to a lack of consideration of traffic context and its tendency to assign candidate trajectories with incorrect driving behaviors to final predictions. We further emphasize the necessity of learning-based aggregation by utilizing mode queries within a DETR-like architecture for our temporal ensembling, leveraging the characteristics of predictions from nearby frames. Our method, validated on the Argoverse 2 dataset, shows notable improvements: a 4% reduction in minADE, a 5% decrease in minFDE, and a 1.16% reduction in the miss rate compared to the strongest baseline, QCNet, highlighting its efficacy and potential in autonomous driving.

Radar Occupancy Prediction with Lidar Supervision while Preserving Long-Range Sensing and Penetrating Capabilities

Dec 08, 2021

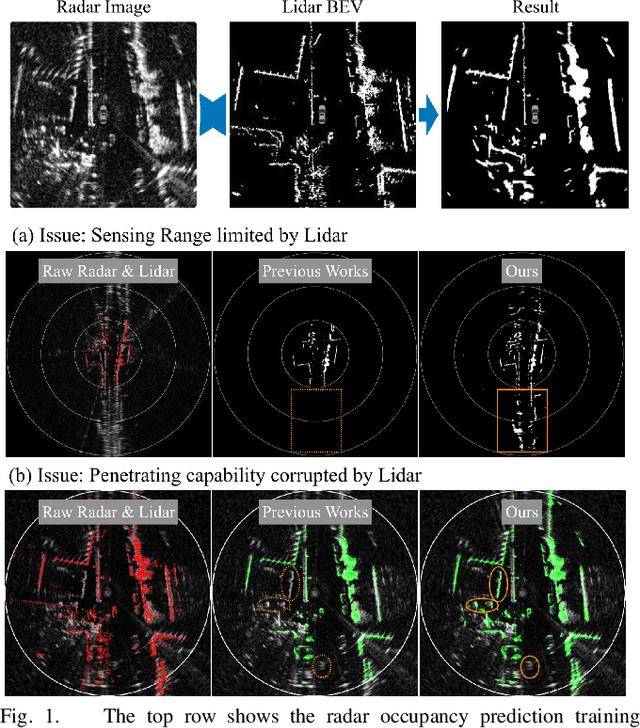

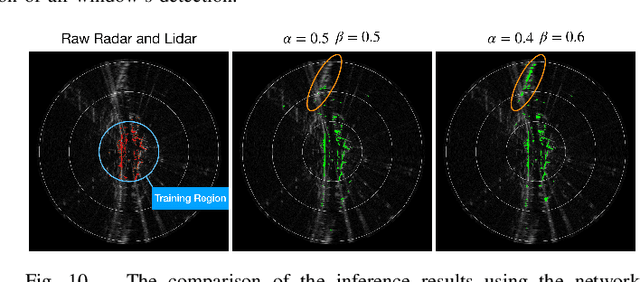

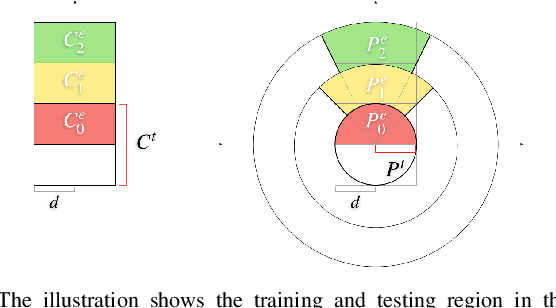

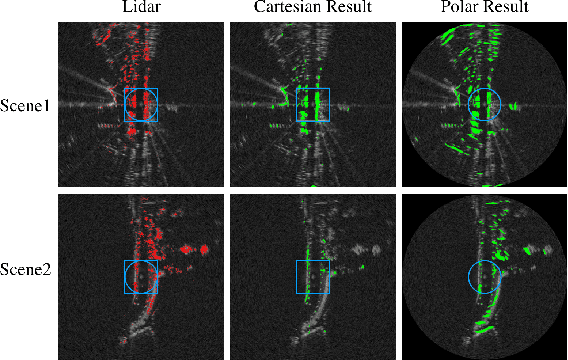

Abstract:Radar shows great potential for autonomous driving by accomplishing long-range sensing under diverse weather conditions. But radar is also a particularly challenging sensing modality due to the radar noises. Recent works have made enormous progress in classifying free and occupied spaces in radar images by leveraging lidar label supervision. However, there are still several unsolved issues. Firstly, the sensing distance of the results is limited by the sensing range of lidar. Secondly, the performance of the results is degenerated by lidar due to the physical sensing discrepancies between the two sensors. For example, some objects visible to lidar are invisible to radar, and some objects occluded in lidar scans are visible in radar images because of the radar's penetrating capability. These sensing differences cause false positive and penetrating capability degeneration, respectively. In this paper, we propose training data preprocessing and polar sliding window inference to solve the issues. The data preprocessing aims to reduce the effect caused by radar-invisible measurements in lidar scans. The polar sliding window inference aims to solve the limited sensing range issue by applying a near-range trained network to the long-range region. Instead of using common Cartesian representation, we propose to use polar representation to reduce the shape dissimilarity between long-range and near-range data. We find that extending a near-range trained network to long-range region inference in the polar space has 4.2 times better IoU than in Cartesian space. Besides, the polar sliding window inference can preserve the radar penetrating capability by changing the viewpoint of the inference region, which makes some occluded measurements seem non-occluded for a pretrained network.

A Normal Distribution Transform-Based Radar Odometry Designed For Scanning and Automotive Radars

Mar 16, 2021

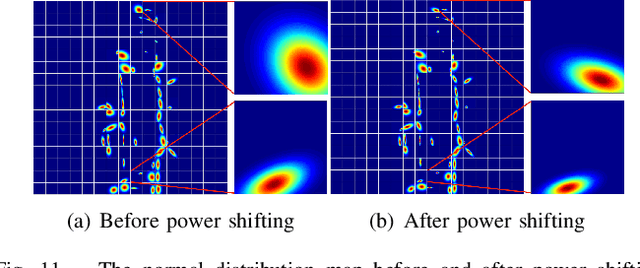

Abstract:Existing radar sensors can be classified into automotive and scanning radars. While most radar odometry (RO) methods are only designed for a specific type of radar, our RO method adapts to both scanning and automotive radars. Our RO is simple yet effective, where the pipeline consists of thresholding, probabilistic submap building, and an NDT-based radar scan matching. The proposed RO has been tested on two public radar datasets: the Oxford Radar RobotCar dataset and the nuScenes dataset, which provide scanning and automotive radar data respectively. The results show that our approach surpasses state-of-the-art RO using either automotive or scanning radar by reducing translational error by 51% and 30%, respectively, and rotational error by 17% and 29%, respectively. Besides, we show that our RO achieves centimeter-level accuracy as lidar odometry, and automotive and scanning RO have similar accuracy.

Bayesian Fisher's Discriminant for Functional Data

Dec 09, 2014

Abstract:We propose a Bayesian framework of Gaussian process in order to extend Fisher's discriminant to classify functional data such as spectra and images. The probability structure for our extended Fisher's discriminant is explicitly formulated, and we utilize the smoothness assumptions of functional data as prior probabilities. Existing methods which directly employ the smoothness assumption of functional data can be shown as special cases within this framework given corresponding priors while their estimates of the unknowns are one-step approximations to the proposed MAP estimates. Empirical results on various simulation studies and different real applications show that the proposed method significantly outperforms the other Fisher's discriminant methods for functional data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge