Radar Occupancy Prediction with Lidar Supervision while Preserving Long-Range Sensing and Penetrating Capabilities

Paper and Code

Dec 08, 2021

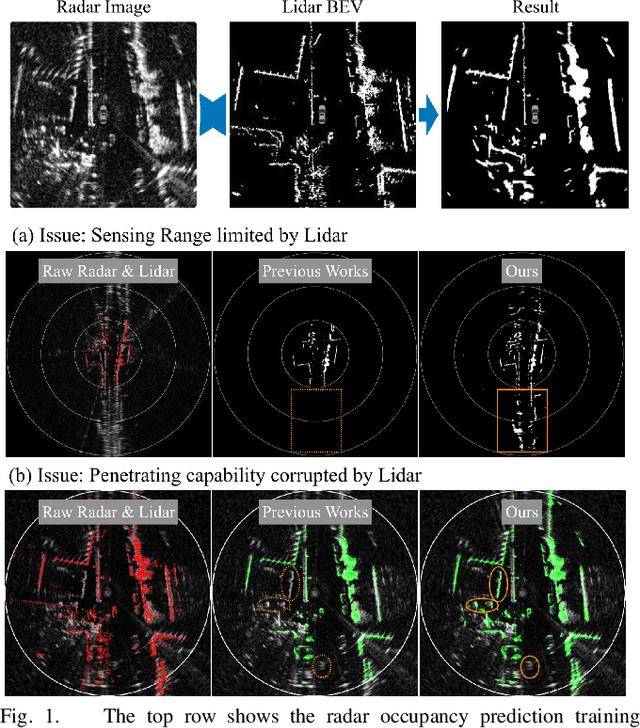

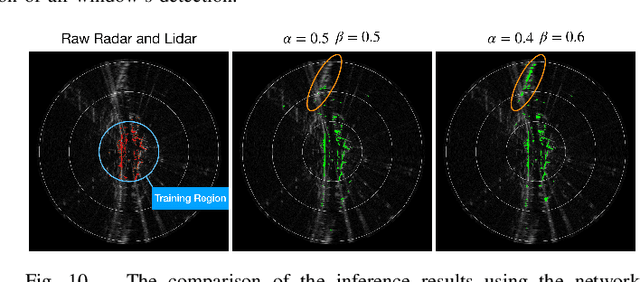

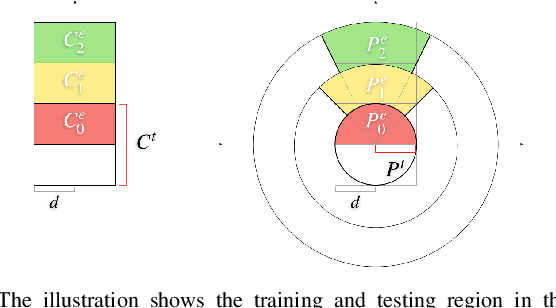

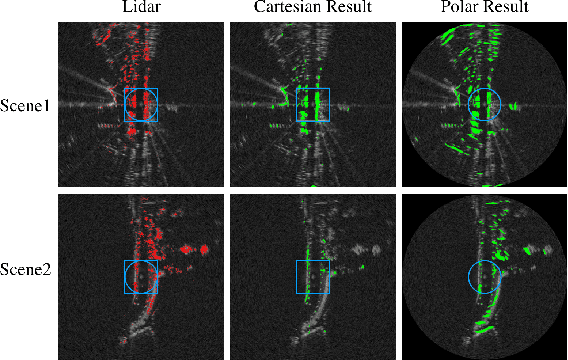

Radar shows great potential for autonomous driving by accomplishing long-range sensing under diverse weather conditions. But radar is also a particularly challenging sensing modality due to the radar noises. Recent works have made enormous progress in classifying free and occupied spaces in radar images by leveraging lidar label supervision. However, there are still several unsolved issues. Firstly, the sensing distance of the results is limited by the sensing range of lidar. Secondly, the performance of the results is degenerated by lidar due to the physical sensing discrepancies between the two sensors. For example, some objects visible to lidar are invisible to radar, and some objects occluded in lidar scans are visible in radar images because of the radar's penetrating capability. These sensing differences cause false positive and penetrating capability degeneration, respectively. In this paper, we propose training data preprocessing and polar sliding window inference to solve the issues. The data preprocessing aims to reduce the effect caused by radar-invisible measurements in lidar scans. The polar sliding window inference aims to solve the limited sensing range issue by applying a near-range trained network to the long-range region. Instead of using common Cartesian representation, we propose to use polar representation to reduce the shape dissimilarity between long-range and near-range data. We find that extending a near-range trained network to long-range region inference in the polar space has 4.2 times better IoU than in Cartesian space. Besides, the polar sliding window inference can preserve the radar penetrating capability by changing the viewpoint of the inference region, which makes some occluded measurements seem non-occluded for a pretrained network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge