Cheshta Arora

The Uli Dataset: An Exercise in Experience Led Annotation of oGBV

Nov 15, 2023

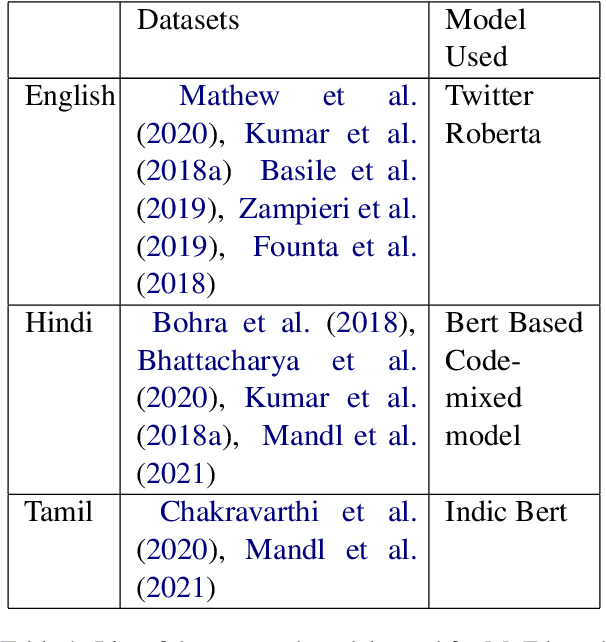

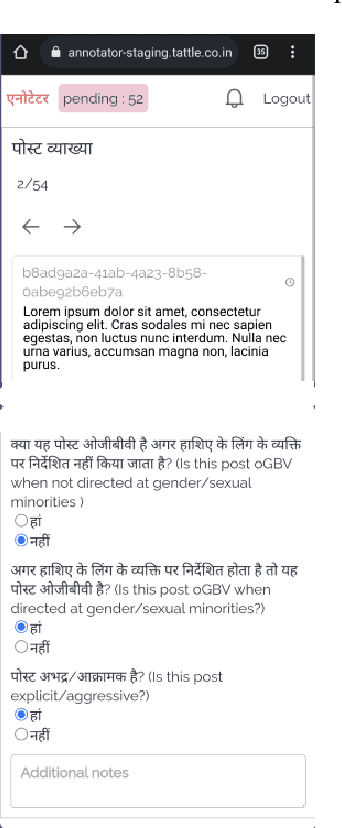

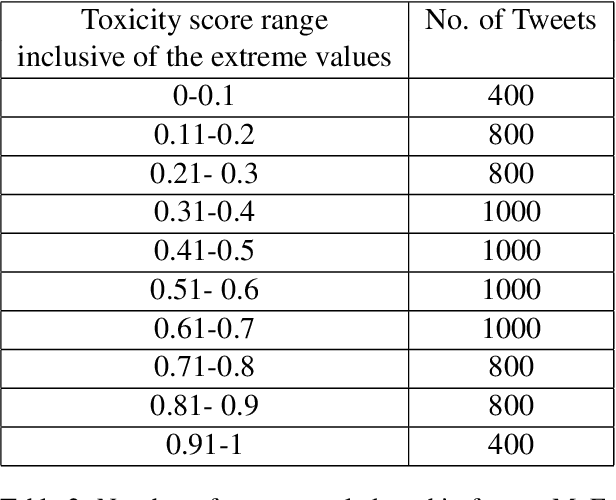

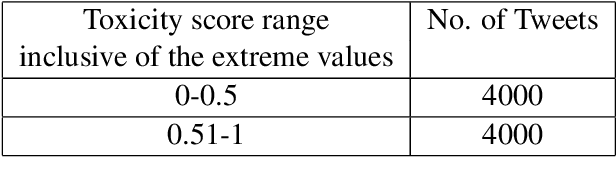

Abstract:Online gender based violence has grown concomitantly with adoption of the internet and social media. Its effects are worse in the Global majority where many users use social media in languages other than English. The scale and volume of conversations on the internet has necessitated the need for automated detection of hate speech, and more specifically gendered abuse. There is, however, a lack of language specific and contextual data to build such automated tools. In this paper we present a dataset on gendered abuse in three languages- Hindi, Tamil and Indian English. The dataset comprises of tweets annotated along three questions pertaining to the experience of gender abuse, by experts who identify as women or a member of the LGBTQIA community in South Asia. Through this dataset we demonstrate a participatory approach to creating datasets that drive AI systems.

On the Injunction of XAIxArt

Sep 12, 2023Abstract:The position paper highlights the range of concerns that are engulfed in the injunction of explainable artificial intelligence in art (XAIxArt). Through a series of quick sub-questions, it points towards the ambiguities concerning 'explanation' and the postpositivist tradition of 'relevant explanation'. Rejecting both 'explanation' and 'relevant explanation', the paper takes a stance that XAIxArt is a symptom of insecurity of the anthropocentric notion of art and a nostalgic desire to return to outmoded notions of authorship and human agency. To justify this stance, the paper makes a distinction between an ornamentation model of explanation to a model of explanation as sense-making.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge