Charles B Delahunt

How Good Are Synthetic Medical Images? An Empirical Study with Lung Ultrasound

Oct 05, 2023Abstract:Acquiring large quantities of data and annotations is known to be effective for developing high-performing deep learning models, but is difficult and expensive to do in the healthcare context. Adding synthetic training data using generative models offers a low-cost method to deal effectively with the data scarcity challenge, and can also address data imbalance and patient privacy issues. In this study, we propose a comprehensive framework that fits seamlessly into model development workflows for medical image analysis. We demonstrate, with datasets of varying size, (i) the benefits of generative models as a data augmentation method; (ii) how adversarial methods can protect patient privacy via data substitution; (iii) novel performance metrics for these use cases by testing models on real holdout data. We show that training with both synthetic and real data outperforms training with real data alone, and that models trained solely with synthetic data approach their real-only counterparts. Code is available at https://github.com/Global-Health-Labs/US-DCGAN.

Insect cyborgs: Biological feature generators improve machine learning accuracy on limited data

Aug 23, 2018

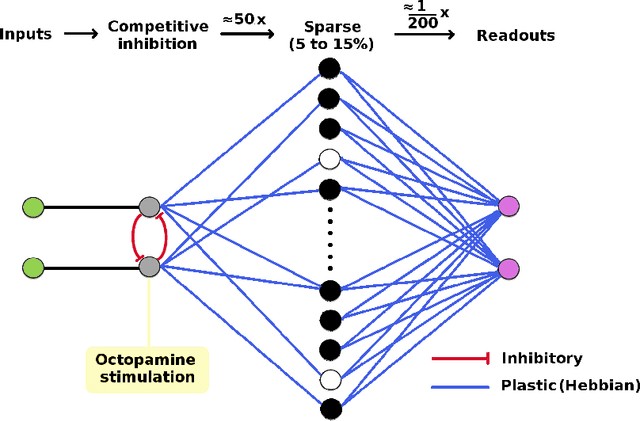

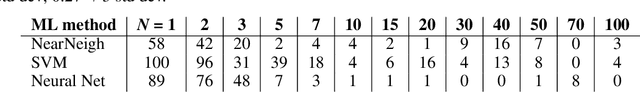

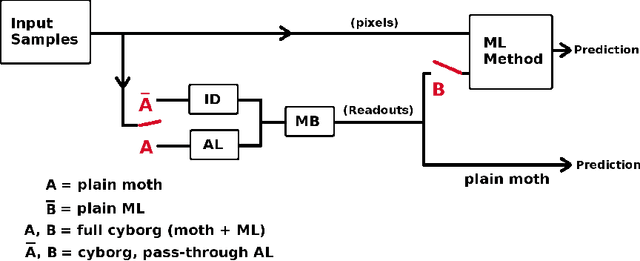

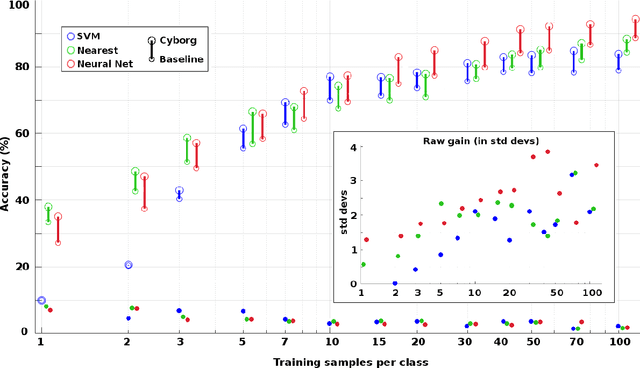

Abstract:Despite many successes, machine learning (ML) methods such as neural nets often struggle to learn given small training sets. In contrast, biological neural nets (BNNs) excel at fast learning. We can thus look to BNNs for tools to improve performance of ML methods in this low-data regime. The insect olfactory network, though simple, can learn new odors very rapidly. Its two key structures are a layer with competitive inhibition (the Antennal Lobe, AL), followed by a high dimensional sparse plastic layer (the Mushroom Body, MB). This AL-MB network can rapidly learn not only odors but also handwritten digits, better in fact than standard ML methods in the few-shot regime. In this work, we deploy the AL-MB network as an automatic feature generator, using its Readout Neurons as additional features for standard ML classifiers. We hypothesize that the AL-MB structure has a strong intrinsic clustering ability; and that its Readout Neurons, used as input features, will boost the performance of ML methods. We find that these "insect cyborgs", ie classifiers that are part-moth and part-ML method, deliver significantly better performance than baseline ML methods alone on a generic (non-spatial) 85-feature, 10-class task derived from the MNIST dataset. Accuracy improves by an average of 6% to 33% for N < 15 training samples per class, and by 6% to 10% for N > 15. Remarkably, these moth-generated features increase ML accuracy even when the ML method's baseline accuracy already exceeds the AL-MB's own limited capacity. The two structures in the AL-MB, a competitive inhibition layer and a high-dimensional sparse layer with Hebbian plasticity, are novel in the context of artificial NNs but endemic in BNNs. We believe they can be deployed either prepended as feature generators or inserted as layers into deep NNs, to potentially improve ML performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge