Changyan Chi

If I Hear You Correctly: Building and Evaluating Interview Chatbots with Active Listening Skills

Feb 05, 2020

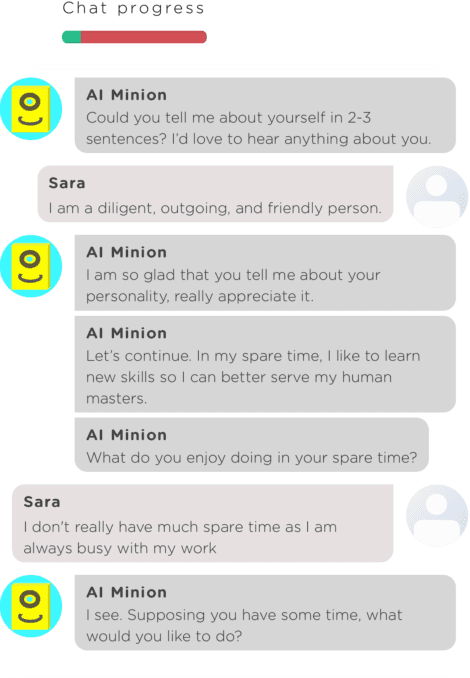

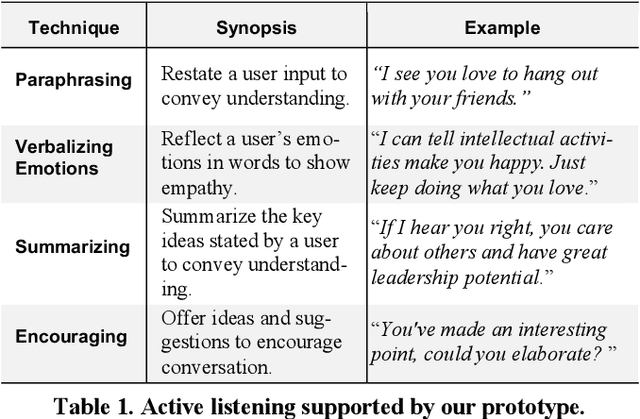

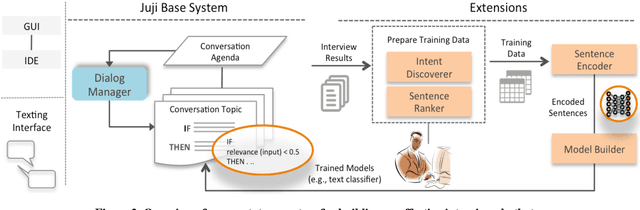

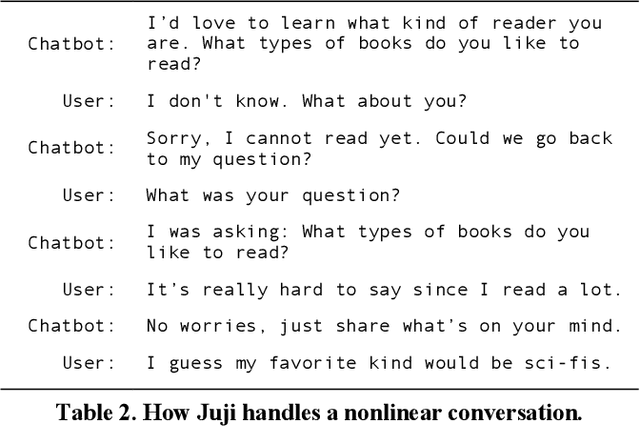

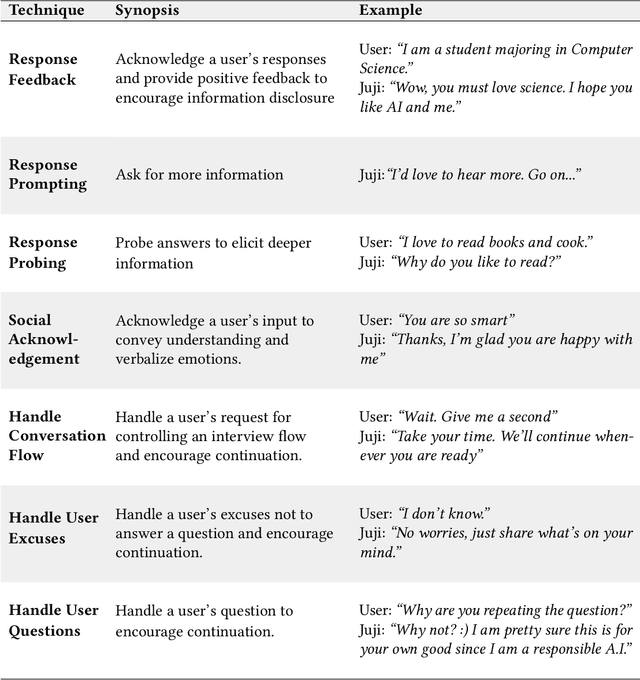

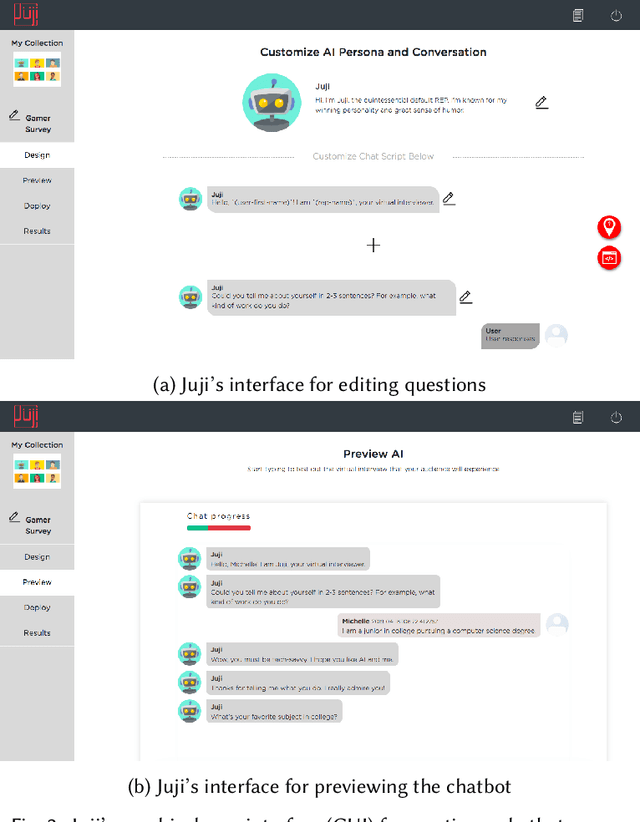

Abstract:Interview chatbots engage users in a text-based conversation to draw out their views and opinions. It is, however, challenging to build effective interview chatbots that can handle user free-text responses to open-ended questions and deliver engaging user experience. As the first step, we are investigating the feasibility and effectiveness of using publicly available, practical AI technologies to build effective interview chatbots. To demonstrate feasibility, we built a prototype scoped to enable interview chatbots with a subset of active listening skills - the abilities to comprehend a user's input and respond properly. To evaluate the effectiveness of our prototype, we compared the performance of interview chatbots with or without active listening skills on four common interview topics in a live evaluation with 206 users. Our work presents practical design implications for building effective interview chatbots, hybrid chatbot platforms, and empathetic chatbots beyond interview tasks.

Tell Me About Yourself: Using an AI-Powered Chatbot to Conduct Conversational Surveys

May 25, 2019

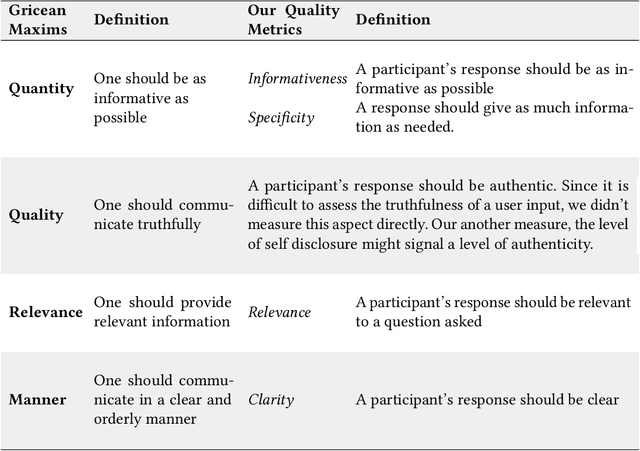

Abstract:The rise of increasingly more powerful chatbots offers a new way to collect information through conversational surveys, where a chatbot asks open-ended questions, interprets a user's free-text responses, and probes answers when needed. To investigate the effectiveness and limitations of such a chatbot in conducting surveys, we conducted a field study involving about 600 participants. In this study, half of the participants took a typical online survey on Qualtrics and the other half interacted with an AI-powered chatbot to complete a conversational survey. Our detailed analysis of over 5200 free-text responses revealed that the chatbot drove a significantly higher level of participant engagement and elicited significantly better quality responses in terms of relevance, depth, and readability. Based on our results, we discuss design implications for creating AI-powered chatbots to conduct effective surveys and beyond.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge