Changwoo Park

Learning Dynamic Manipulation Skills from Haptic-Play

Jul 28, 2022

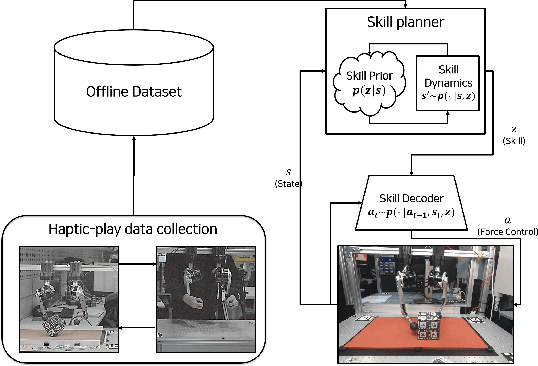

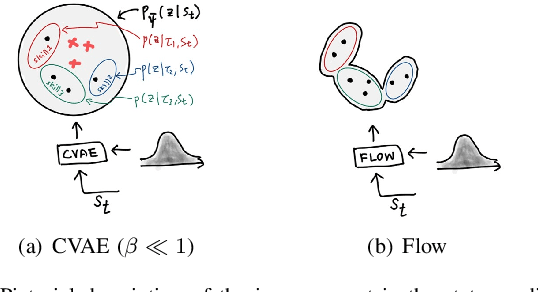

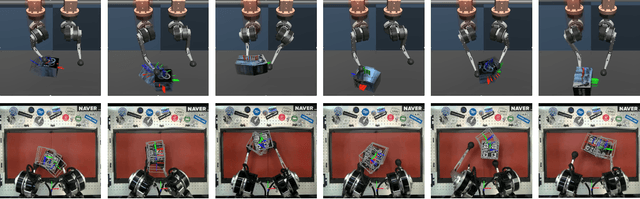

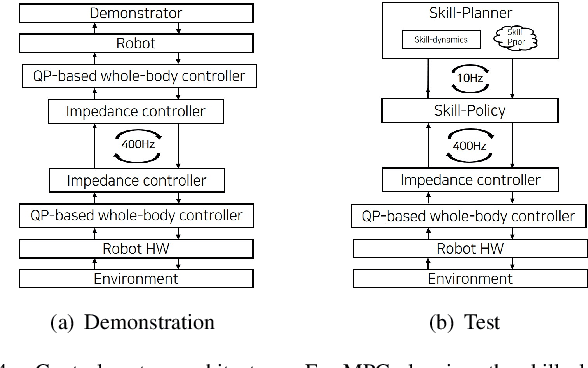

Abstract:In this paper, we propose a data-driven skill learning approach to solve highly dynamic manipulation tasks entirely from offline teleoperated play data. We use a bilateral teleoperation system to continuously collect a large set of dexterous and agile manipulation behaviors, which is enabled by providing direct force feedback to the operator. We jointly learn the state conditional latent skill distribution and skill decoder network in the form of goal-conditioned policy and skill conditional state transition dynamics using a two-stage generative modeling framework. This allows one to perform robust model-based planning, both online and offline planning methods, in the learned skill-space to accomplish any given downstream tasks at test time. We provide both simulated and real-world dual-arm box manipulation experiments showing that a sequence of force-controlled dynamic manipulation skills can be composed in real-time to successfully configure the box to the randomly selected target position and orientation; please refer to the supplementary video, https://youtu.be/LA5B236ILzM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge