Chaitra Hedge

Indoor Localization and Multi-person Tracking Using Privacy Preserving Distributed Camera Network with Edge Computing

May 08, 2023

Abstract:Localization of individuals in a built environment is a growing research topic. Estimating the positions, face orientation (or gaze direction) and trajectories of people through space has many uses, such as in crowd management, security, and healthcare. In this work, we present an open-source, low-cost, scalable and privacy-preserving edge computing framework for multi-person localization, i.e. estimating the positions, orientations, and trajectories of multiple people in an indoor space. Our computing framework consists of 38 Tensor Processing Unit (TPU)-enabled edge computing camera systems placed in the ceiling of the indoor therapeutic space. The edge compute systems are connected to an on-premise fog server through a secure and private network. A multi-person detection algorithm and a pose estimation model run on the edge TPU in real-time to collect features which are used, instead of raw images, for downstream computations. This ensures the privacy of individuals in the space, reduces data transmission/storage and improves scalability. We implemented a Kalman filter-based multi-person tracking method and a state-of-the-art body orientation estimation method to determine the positions and facing orientations of multiple people simultaneously in the indoor space. For our study site with size of 18,000 square feet, our system demonstrated an average localization error of 1.41 meters, a multiple-object tracking accuracy score of 62%, and a mean absolute body orientation error of 29{\deg}, which is sufficient for understanding group activity behaviors in indoor environments. Additionally, our study provides practical guidance for deploying the proposed system by analyzing various elements of the camera installation with respect to tracking accuracy.

Activity Classification Using Unsupervised Domain Transfer from Body Worn Sensors

Apr 20, 2023

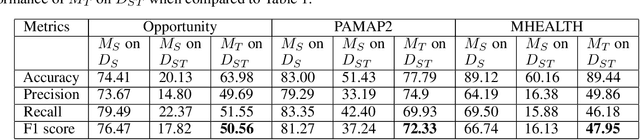

Abstract:Activity classification has become a vital feature of wearable health tracking devices. As innovation in this field grows, wearable devices worn on different parts of the body are emerging. To perform activity classification on a new body location, labeled data corresponding to the new locations are generally required, but this is expensive to acquire. In this work, we present an innovative method to leverage an existing activity classifier, trained on Inertial Measurement Unit (IMU) data from a reference body location (the source domain), in order to perform activity classification on a new body location (the target domain) in an unsupervised way, i.e. without the need for classification labels at the new location. Specifically, given an IMU embedding model trained to perform activity classification at the source domain, we train an embedding model to perform activity classification at the target domain by replicating the embeddings at the source domain. This is achieved using simultaneous IMU measurements at the source and target domains. The replicated embeddings at the target domain are used by a classification model that has previously been trained on the source domain to perform activity classification at the target domain. We have evaluated the proposed methods on three activity classification datasets PAMAP2, MHealth, and Opportunity, yielding high F1 scores of 67.19%, 70.40% and 68.34%, respectively when the source domain is the wrist and the target domain is the torso.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge