Chahan Vidal-Gorène

CJM, LIPN

Under-resourced studies of under-resourced languages: lemmatization and POS-tagging with LLM annotators for historical Armenian, Georgian, Greek and Syriac

Feb 17, 2026Abstract:Low-resource languages pose persistent challenges for Natural Language Processing tasks such as lemmatization and part-of-speech (POS) tagging. This paper investigates the capacity of recent large language models (LLMs), including GPT-4 variants and open-weight Mistral models, to address these tasks in few-shot and zero-shot settings for four historically and linguistically diverse under-resourced languages: Ancient Greek, Classical Armenian, Old Georgian, and Syriac. Using a novel benchmark comprising aligned training and out-of-domain test corpora, we evaluate the performance of foundation models across lemmatization and POS-tagging, and compare them with PIE, a task-specific RNN baseline. Our results demonstrate that LLMs, even without fine-tuning, achieve competitive or superior performance in POS-tagging and lemmatization across most languages in few-shot settings. Significant challenges persist for languages characterized by complex morphology and non-Latin scripts, but we demonstrate that LLMs are a credible and relevant option for initiating linguistic annotation tasks in the absence of data, serving as an effective aid for annotation.

New Results for the Text Recognition of Arabic Maghrib{ī} Manuscripts -- Managing an Under-resourced Script

Nov 29, 2022Abstract:HTR models development has become a conventional step for digital humanities projects. The performance of these models, often quite high, relies on manual transcription and numerous handwritten documents. Although the method has proven successful for Latin scripts, a similar amount of data is not yet achievable for scripts considered poorly-endowed, like Arabic scripts. In that respect, we are introducing and assessing a new modus operandi for HTR models development and fine-tuning dedicated to the Arabic Maghrib{\=i} scripts. The comparison between several state-of-the-art HTR demonstrates the relevance of a word-based neural approach specialized for Arabic, capable to achieve an error rate below 5% with only 10 pages manually transcribed. These results open new perspectives for Arabic scripts processing and more generally for poorly-endowed languages processing. This research is part of the development of RASAM dataset in partnership with the GIS MOMM and the BULAC.

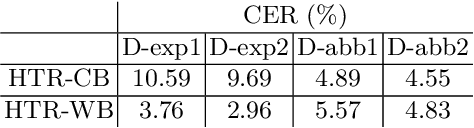

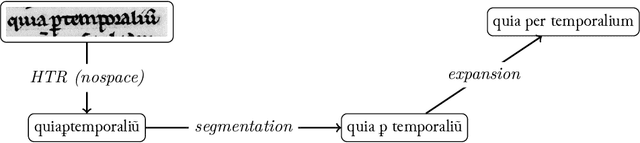

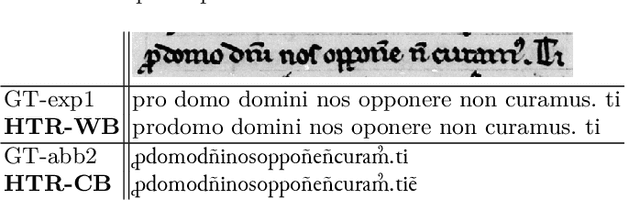

Handling Heavily Abbreviated Manuscripts: HTR engines vs text normalisation approaches

Jul 07, 2021

Abstract:Although abbreviations are fairly common in handwritten sources, particularly in medieval and modern Western manuscripts, previous research dealing with computational approaches to their expansion is scarce. Yet abbreviations present particular challenges to computational approaches such as handwritten text recognition and natural language processing tasks. Often, pre-processing ultimately aims to lead from a digitised image of the source to a normalised text, which includes expansion of the abbreviations. We explore different setups to obtain such a normalised text, either directly, by training HTR engines on normalised (i.e., expanded, disabbreviated) text, or by decomposing the process into discrete steps, each making use of specialist models for recognition, word segmentation and normalisation. The case studies considered here are drawn from the medieval Latin tradition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge