Carlos Purves

Tiny, On-Device Decision Makers with the MiniConv Library

Dec 17, 2025

Abstract:Reinforcement learning (RL) has achieved strong results, but deploying visual policies on resource-constrained edge devices remains challenging due to computational cost and communication latency. Many deployments therefore offload policy inference to a remote server, incurring network round trips and requiring transmission of high-dimensional observations. We introduce a split-policy architecture in which a small on-device encoder, implemented as OpenGL fragment-shader passes for broad embedded GPU support, transforms each observation into a compact feature tensor that is transmitted to a remote policy head. In RL, this communication overhead manifests as closed-loop decision latency rather than only per-request inference latency. The proposed approach reduces transmitted data, lowers decision latency in bandwidth-limited settings, and reduces server-side compute per request, whilst achieving broadly comparable learning performance by final return (mean over the final 100 episodes) in single-run benchmarks, with modest trade-offs in mean return. We evaluate across an NVIDIA Jetson Nano, a Raspberry Pi 4B, and a Raspberry Pi Zero 2 W, reporting learning results, on-device execution behaviour under sustained load, and end-to-end decision latency and scalability measurements under bandwidth shaping. Code for training, deployment, and measurement is released as open source.

Goal-Conditioned Reinforcement Learning in the Presence of an Adversary

Nov 13, 2022Abstract:Reinforcement learning has seen increasing applications in real-world contexts over the past few years. However, physical environments are often imperfect and policies that perform well in simulation might not achieve the same performance when applied elsewhere. A common approach to combat this is to train agents in the presence of an adversary. An adversary acts to destabilise the agent, which learns a more robust policy and can better handle realistic conditions. Many real-world applications of reinforcement learning also make use of goal-conditioning: this is particularly useful in the context of robotics, as it allows the agent to act differently, depending on which goal is selected. Here, we focus on the problem of goal-conditioned learning in the presence of an adversary. We first present DigitFlip and CLEVR-Play, two novel goal-conditioned environments that support acting against an adversary. Next, we propose EHER and CHER -- two HER-based algorithms for goal-conditioned learning -- and evaluate their performance. Finally, we unify the two threads and introduce IGOAL: a novel framework for goal-conditioned learning in the presence of an adversary. Experimental results show that combining IGOAL with EHER allows agents to significantly outperform existing approaches, when acting against both random and competent adversaries.

The PlayStation Reinforcement Learning Environment (PSXLE)

Dec 12, 2019

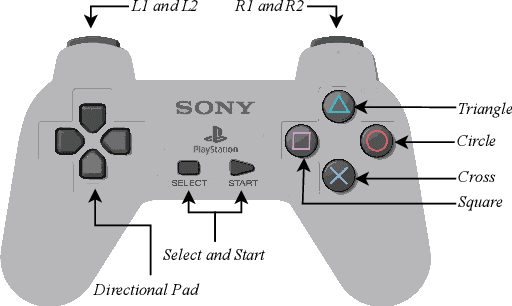

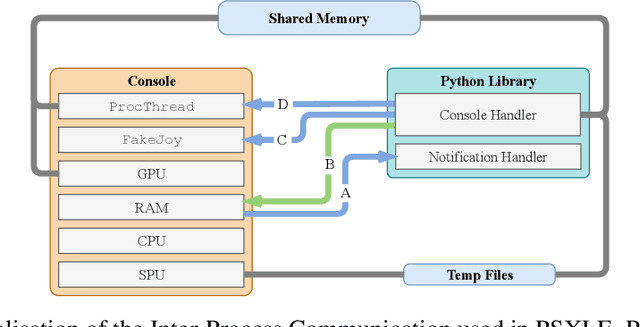

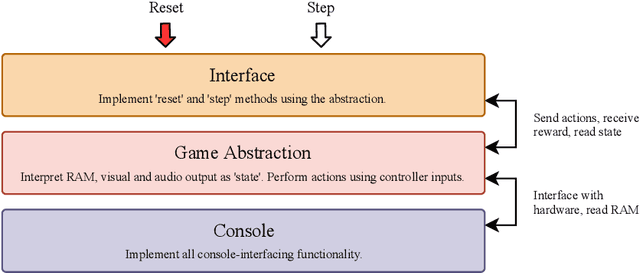

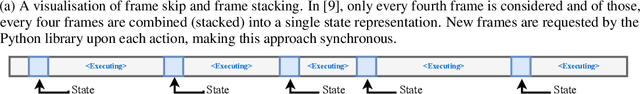

Abstract:We propose a new benchmark environment for evaluating Reinforcement Learning (RL) algorithms: the PlayStation Learning Environment (PSXLE), a PlayStation emulator modified to expose a simple control API that enables rich game-state representations. We argue that the PlayStation serves as a suitable progression for agent evaluation and propose a framework for such an evaluation. We build an action-driven abstraction for a PlayStation game with support for the OpenAI Gym interface and demonstrate its use by running OpenAI Baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge