Carlos Lopez Leiva

The Importance of the Instantaneous Phase for classification using Convolutional Neural Networks

Jul 01, 2022

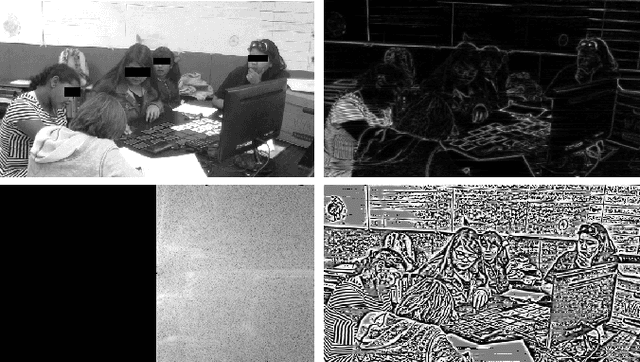

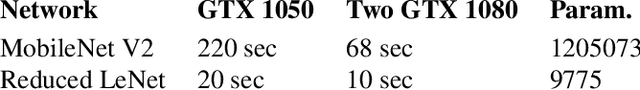

Abstract:Large-scale training of Convolutional Neural Networks (CNN) is extremely demanding in terms of computational resources. Also, for specific applications, the standard use of transfer learning also tends to require far more resources than what may be needed. This work examines the impact of using AM-FM representations as input images for CNN classification applications. A comparison was made between AM-FM components combinations and grayscale images as inputs for reduced and complete networks. The results showed that only the phase component produced significant predictions within a simple network. Neither IA or gray scale image were able to induce any learning in the system. Furthermore, the FM results were 7x faster during training and used 123x less parameters compared to state-of-the-art MobileNetV2 architecture, while maintaining comparable performance (AUC of 0.78 vs 0.79).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge