Carlos Henrique Grossi Ferreira

Computing the Shattering Coefficient of Supervised Learning Algorithms

May 14, 2018

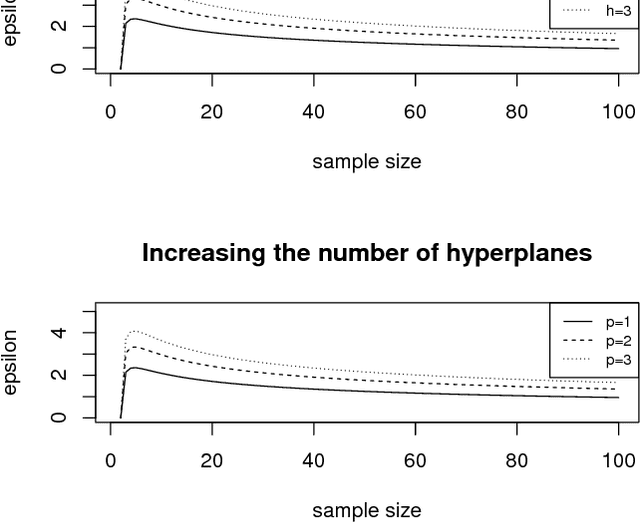

Abstract:The Statistical Learning Theory (SLT) provides the theoretical guarantees for supervised machine learning based on the Empirical Risk Minimization Principle (ERMP). Such principle defines an upper bound to ensure the uniform convergence of the empirical risk Remp(f), i.e., the error measured on a given data sample, to the expected value of risk R(f) (a.k.a. actual risk), which depends on the Joint Probability Distribution P(X x Y) mapping input examples x in X to class labels y in Y. The uniform convergence is only ensured when the Shattering coefficient N(F,2n) has a polynomial growing behavior. This paper proves the Shattering coefficient for any Hilbert space H containing the input space X and discusses its effects in terms of learning guarantees for supervised machine algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge