Carlos Aliaga

Neural Relighting with Subsurface Scattering by Learning the Radiance Transfer Gradient

Jun 15, 2023Abstract:Reconstructing and relighting objects and scenes under varying lighting conditions is challenging: existing neural rendering methods often cannot handle the complex interactions between materials and light. Incorporating pre-computed radiance transfer techniques enables global illumination, but still struggles with materials with subsurface scattering effects. We propose a novel framework for learning the radiance transfer field via volume rendering and utilizing various appearance cues to refine geometry end-to-end. This framework extends relighting and reconstruction capabilities to handle a wider range of materials in a data-driven fashion. The resulting models produce plausible rendering results in existing and novel conditions. We will release our code and a novel light stage dataset of objects with subsurface scattering effects publicly available.

Estimation of Spectral Biophysical Skin Properties from Captured RGB Albedo

Jan 26, 2022

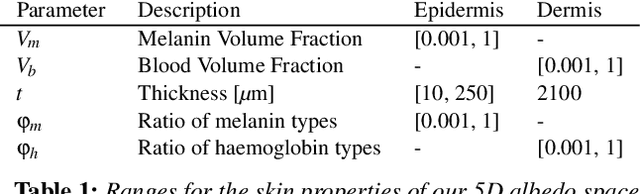

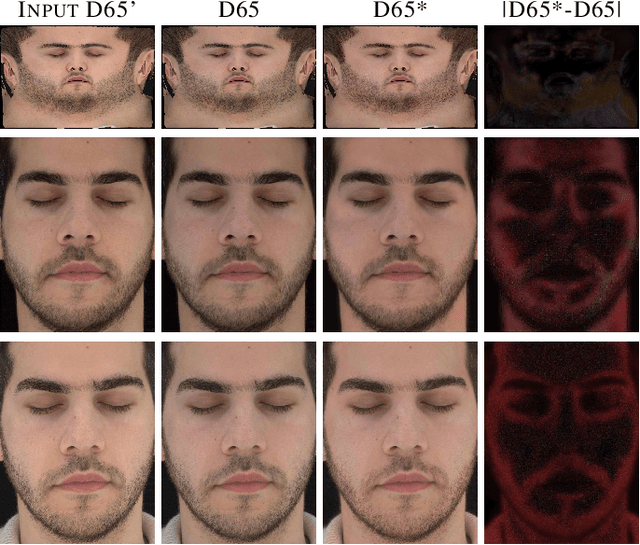

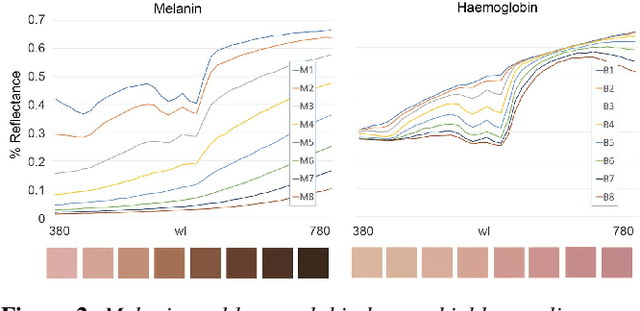

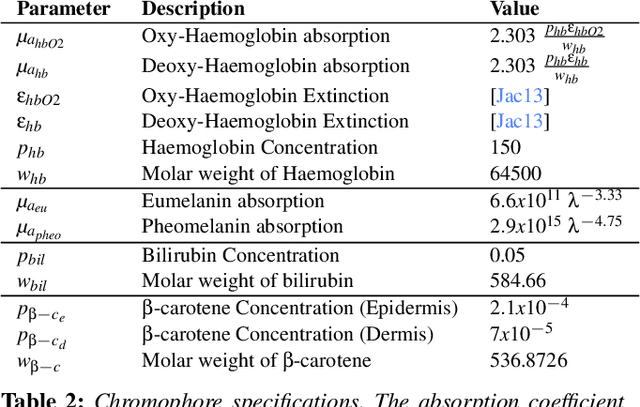

Abstract:We present a new method to reconstruct and manipulate the spectral properties of human skin from simple RGB albedo captures. To this end, we leverage Monte Carlo light simulation over an accurate biophysical human skin layering model parameterized by its most important components, thereby covering a plausible range of colors. The practical complexity of the model allows us to learn the inverse mapping from any albedo to its most probable associated skin properties. Our technique can faithfully reproduce any skin type, being expressive enough to automatically handle more challenging areas like the lips or imperfections in the face. Thanks to the smoothness of the skin parameters maps recovered, the albedo can be robustly edited through meaningful biophysical properties.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge