Cameron Kyle-Davidson

Generating Memorable Images Based on Human Visual Memory Schemas

May 06, 2020

Abstract:This research study proposes using Generative Adversarial Networks (GAN) that incorporate a two-dimensional measure of human memorability to generate memorable or non-memorable images of scenes. The memorability of the generated images is evaluated by modelling Visual Memory Schemas (VMS), which correspond to mental representations that human observers use to encode an image into memory. The VMS model is based upon the results of memory experiments conducted on human observers, and provides a 2D map of memorability. We impose a memorability constraint upon the latent space of a GAN by employing a VMS map prediction model as an auxiliary loss. We assess the difference in memorability between images generated to be memorable or non-memorable through an independent computational measure of memorability, and additionally assess the effect of memorability on the realness of the generated images.

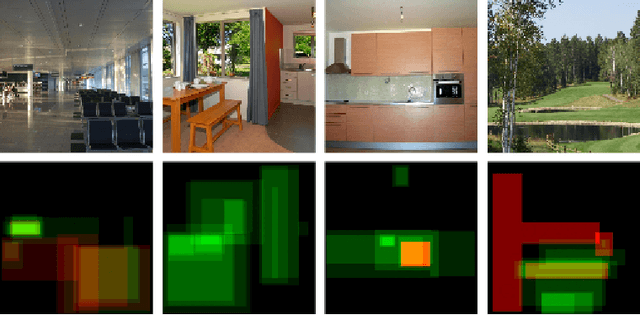

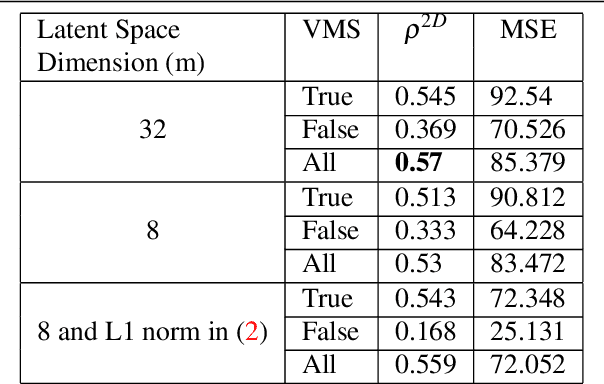

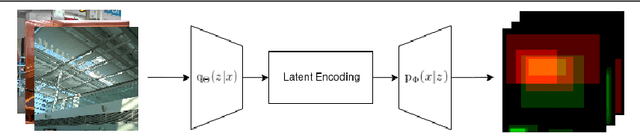

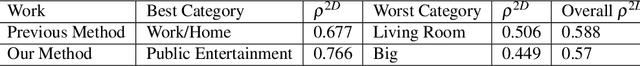

Predicting Visual Memory Schemas with Variational Autoencoders

Jul 19, 2019

Abstract:Visual memory schema (VMS) maps show which regions of an image cause that image to be remembered or falsely remembered. Previous work has succeeded in generating low resolution VMS maps using convolutional neural networks. We instead approach this problem as an image-to-image translation task making use of a variational autoencoder. This approach allows us to generate higher resolution dual channel images that represent visual memory schemas, allowing us to evaluate predicted true memorability and false memorability separately. We also evaluate the relationship between VMS maps, predicted VMS maps, ground truth memorability scores, and predicted memorability scores.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge