C. Patrick Yue

FPGA-based Acceleration of Neural Network for Image Classification using Vitis AI

Dec 30, 2024

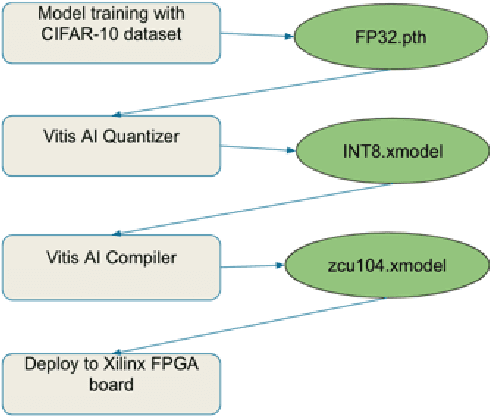

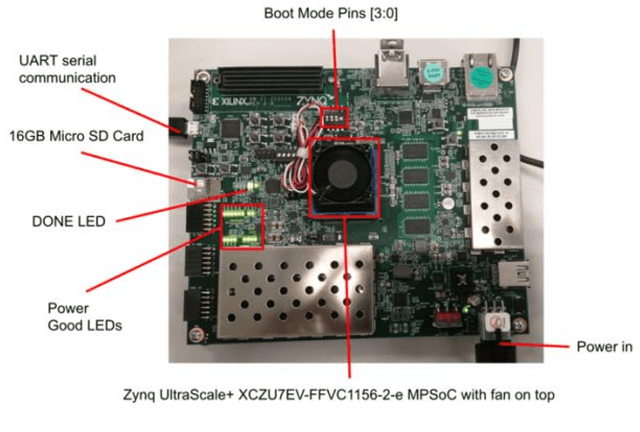

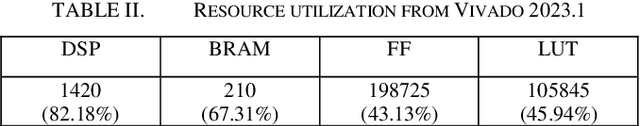

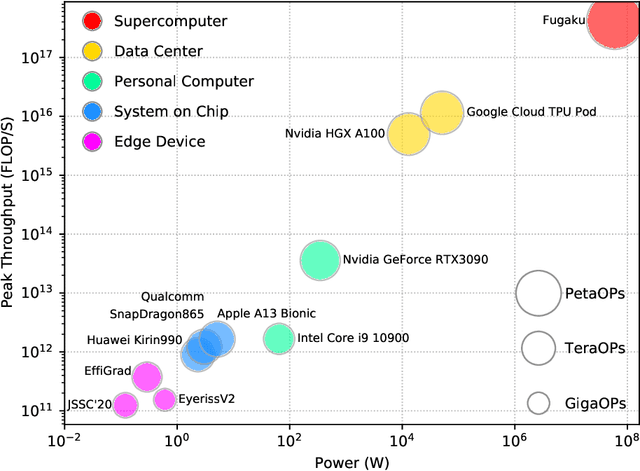

Abstract:In recent years, Convolutional Neural Networks (CNNs) have been widely adopted in computer vision. Complex CNN architecture running on CPU or GPU has either insufficient throughput or prohibitive power consumption. Hence, there is a need to have dedicated hardware to accelerate the computation workload to solve these limitations. In this paper, we accelerate a CNN for image classification with the CIFAR-10 dataset using Vitis-AI on Xilinx Zynq UltraScale+ MPSoC ZCU104 FPGA evaluation board. The work achieves 3.33-5.82x higher throughput and 3.39-6.30x higher energy efficiency than CPU and GPU baselines. It shows the potential to extract 2D features for downstream tasks, such as depth estimation and 3D reconstruction.

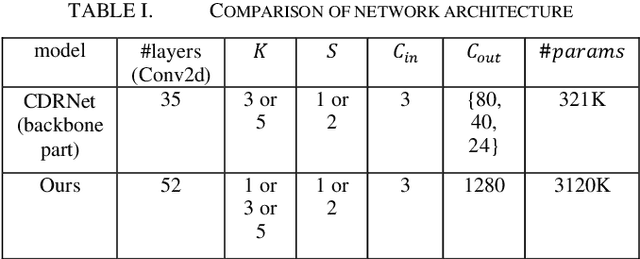

Cross-Dimensional Refined Learning for Real-Time 3D Visual Perception from Monocular Video

Mar 16, 2023Abstract:We present a novel real-time capable learning method that jointly perceives a 3D scene's geometry structure and semantic labels. Recent approaches to real-time 3D scene reconstruction mostly adopt a volumetric scheme, where a truncated signed distance function (TSDF) is directly regressed. However, these volumetric approaches tend to focus on the global coherence of their reconstructions, which leads to a lack of local geometrical detail. To overcome this issue, we propose to leverage the latent geometrical prior knowledge in 2D image features by explicit depth prediction and anchored feature generation, to refine the occupancy learning in TSDF volume. Besides, we find that this cross-dimensional feature refinement methodology can also be adopted for the semantic segmentation task. Hence, we proposed an end-to-end cross-dimensional refinement neural network (CDRNet) to extract both 3D mesh and 3D semantic labeling in real time. The experiment results show that the proposed method achieves state-of-the-art 3D perception efficiency on multiple datasets, which indicates the great potential of our method for industrial applications.

Efficient Training Convolutional Neural Networks on Edge Devices with Gradient-pruned Sign-symmetric Feedback Alignment

Mar 04, 2021

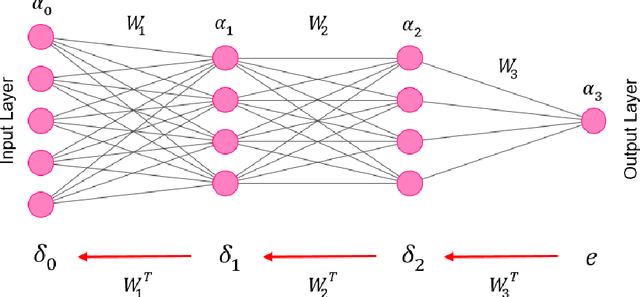

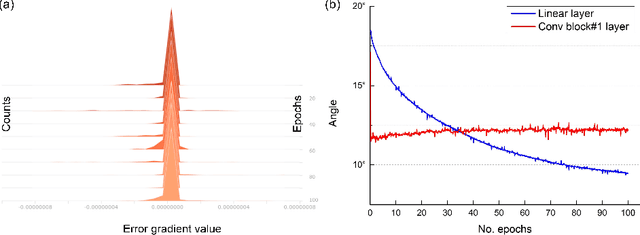

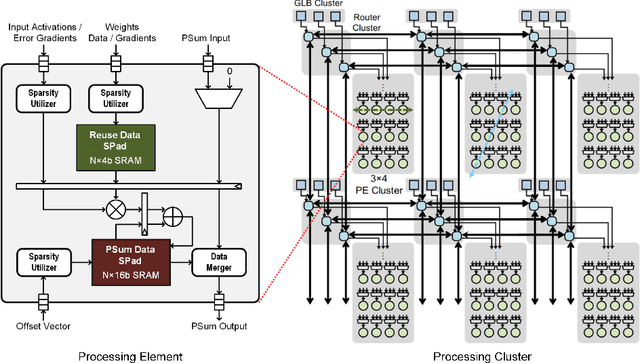

Abstract:With the prosperity of mobile devices, the distributed learning approach enabling model training with decentralized data has attracted wide research. However, the lack of training capability for edge devices significantly limits the energy efficiency of distributed learning in real life. This paper describes a novel approach of training DNNs exploiting the redundancy and the weight asymmetry potential of conventional backpropagation. We demonstrate that with negligible classification accuracy loss, the proposed approach outperforms the prior arts by 5x in terms of energy efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge