C. De Wagter

Autonomous drone race: A computationally efficient vision-based navigation and control strategy

Sep 16, 2018

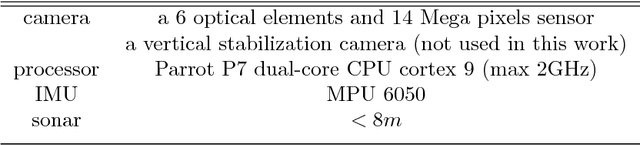

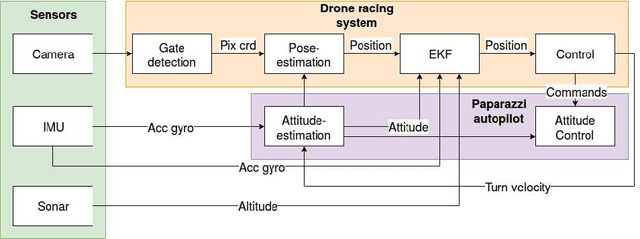

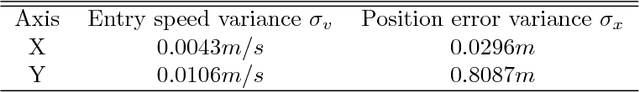

Abstract:Drone racing is becoming a popular sport where human pilots have to control their drones to fly at high speed through complex environments and pass a number of gates in a pre-defined sequence. In this paper, we develop an autonomous system for drones to race fully autonomously using only onboard resources. Instead of commonly used visual navigation methods, such as simultaneous localization and mapping and visual inertial odometry, which are computationally expensive for micro aerial vehicles (MAVs), we developed the highly efficient snake gate detection algorithm for visual navigation, which can detect the gate at 20HZ on a Parrot Bebop drone. Then, with the gate detection result, we developed a robust pose estimation algorithm which has better tolerance to detection noise than a state-of-the-art perspective-n-point method. During the race, sometimes the gates are not in the drone's field of view. For this case, a state prediction-based feed-forward control strategy is developed to steer the drone to fly to the next gate. Experiments show that the drone can fly a half-circle with 1.5m radius within 2 seconds with only 30cm error at the end of the circle without any position feedback. Finally, the whole system is tested in a complex environment (a showroom in the faculty of Aerospace Engineering, TU Delft). The result shows that the drone can complete the track of 15 gates with a speed of 1.5m/s which is faster than the speeds exhibited at the 2016 and 2017 IROS autonomous drone races.

Optical-Flow based Self-Supervised Learning of Obstacle Appearance applied to MAV Landing

Aug 17, 2017

Abstract:Monocular optical flow has been widely used to detect obstacles in Micro Air Vehicles (MAVs) during visual navigation. However, this approach requires significant movement, which reduces the efficiency of navigation and may even introduce risks in narrow spaces. In this paper, we introduce a novel setup of self-supervised learning (SSL), in which optical flow cues serve as a scaffold to learn the visual appearance of obstacles in the environment. We apply it to a landing task, in which initially 'surface roughness' is estimated from the optical flow field in order to detect obstacles. Subsequently, a linear regression function is learned that maps appearance features represented by texton distributions to the roughness estimate. After learning, the MAV can detect obstacles by just analyzing a still image. This allows the MAV to search for a landing spot without moving. We first demonstrate this principle to work with offline tests involving images captured from an on-board camera, and then demonstrate the principle in flight. Although surface roughness is a property of the entire flow field in the global image, the appearance learning even allows for the pixel-wise segmentation of obstacles.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge