H. W. Ho

Optical-Flow based Self-Supervised Learning of Obstacle Appearance applied to MAV Landing

Aug 17, 2017

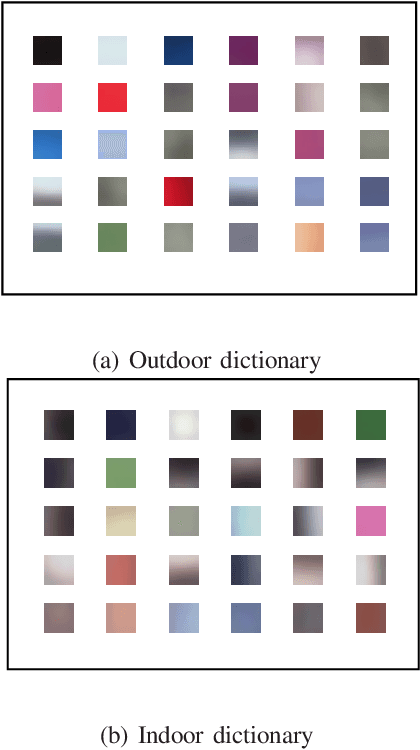

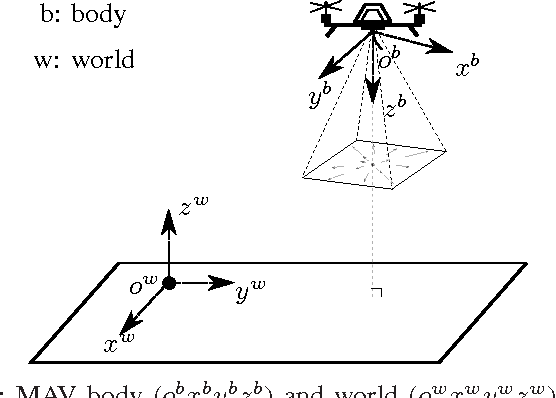

Abstract:Monocular optical flow has been widely used to detect obstacles in Micro Air Vehicles (MAVs) during visual navigation. However, this approach requires significant movement, which reduces the efficiency of navigation and may even introduce risks in narrow spaces. In this paper, we introduce a novel setup of self-supervised learning (SSL), in which optical flow cues serve as a scaffold to learn the visual appearance of obstacles in the environment. We apply it to a landing task, in which initially 'surface roughness' is estimated from the optical flow field in order to detect obstacles. Subsequently, a linear regression function is learned that maps appearance features represented by texton distributions to the roughness estimate. After learning, the MAV can detect obstacles by just analyzing a still image. This allows the MAV to search for a landing spot without moving. We first demonstrate this principle to work with offline tests involving images captured from an on-board camera, and then demonstrate the principle in flight. Although surface roughness is a property of the entire flow field in the global image, the appearance learning even allows for the pixel-wise segmentation of obstacles.

Adaptive Control Strategy for Constant Optical Flow Divergence Landing

Sep 21, 2016

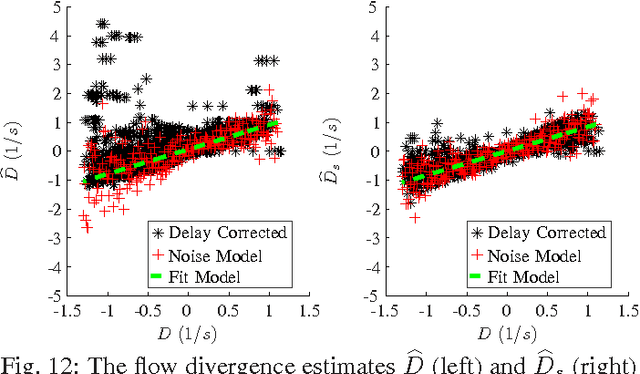

Abstract:Bio-inspired methods can provide efficient solutions to perform autonomous landing for Micro Air Vehicles (MAVs). Flying insects such as honeybees perform vertical landings by keeping flow divergence constant. This leads to an exponential decay of both height and vertical velocity, and allows for smooth and safe landings. However, the presence of noise and delay in obtaining flow divergence estimates will cause instability of the landing when the control gains are not adapted to the height. In this paper, we propose a strategy that deals with this fundamental problem of optical flow control. The key to the strategy lies in the use of a recent theory that allows the MAV to see distance by means of its control instability. At the start of a landing, the MAV detects the height by means of an oscillating movement and sets the control gains accordingly. Then, during descent, the gains are reduced exponentially, with mechanisms in place to reduce or increase the gains if the actual trajectory deviates too much from an ideal constant divergence landing. Real-world experiments demonstrate stable landings of the MAV in both indoor and windy outdoor environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge