Bumuthu Dilshan

Realistic, Animatable Human Reconstructions for Virtual Fit-On

Oct 16, 2022

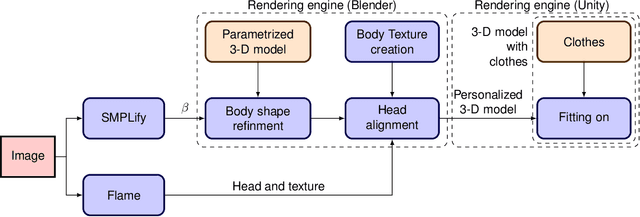

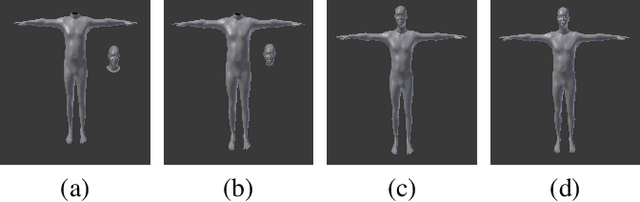

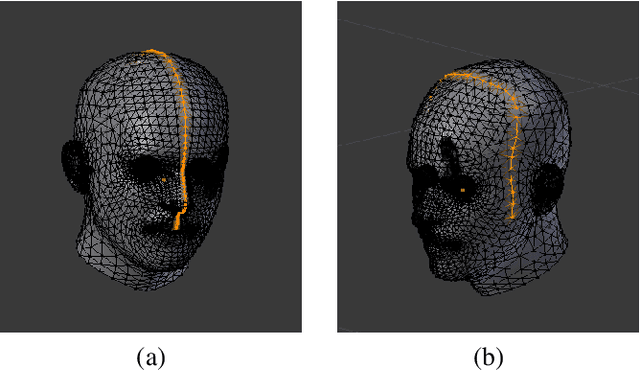

Abstract:We present an end-to-end virtual try-on pipeline, that can fit different clothes on a personalized 3-D human model, reconstructed using a single RGB image. Our main idea is to construct an animatable 3-D human model and try-on different clothes in a 3-D virtual environment. The existing frame by frame volumetric reconstruction of 3-D human models are highly resource-demanding and do not allow clothes switching. Moreover, existing virtual fit-on systems also lack realism due to predominantly being 2-D or not using user's features in the reconstruction. These shortcomings are due to either the human body or clothing model being 2-D or not having the user's facial features in the dressed model. We solve these problems by manipulating a parametric representation of the 3-D human body model and stitching a head model reconstructed from the actual image. Fitting the 3-D clothing models on the parameterized human model is also adjustable to the body shape of the input image. Our reconstruction results, in comparison with recent existing work, are more visually-pleasing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge