Bruce Nguyen

A Comparative Study of Diabetes Prediction Based on Lifestyle Factors Using Machine Learning

Mar 06, 2025Abstract:Diabetes is a prevalent chronic disease with significant health and economic burdens worldwide. Early prediction and diagnosis can aid in effective management and prevention of complications. This study explores the use of machine learning models to predict diabetes based on lifestyle factors using data from the Behavioral Risk Factor Surveillance System (BRFSS) 2015 survey. The dataset consists of 21 lifestyle and health-related features, capturing aspects such as physical activity, diet, mental health, and socioeconomic status. Three classification models, Decision Tree, K-Nearest Neighbors (KNN), and Logistic Regression, are implemented and evaluated to determine their predictive performance. The models are trained and tested using a balanced dataset, and their performances are assessed based on accuracy, precision, recall, and F1-score. The results indicate that the Decision Tree, KNN, and Logistic Regression achieve an accuracy of 0.74, 0.72, and 0.75, respectively, with varying strengths in precision and recall. The findings highlight the potential of machine learning in diabetes prediction and suggest future improvements through feature selection and ensemble learning techniques.

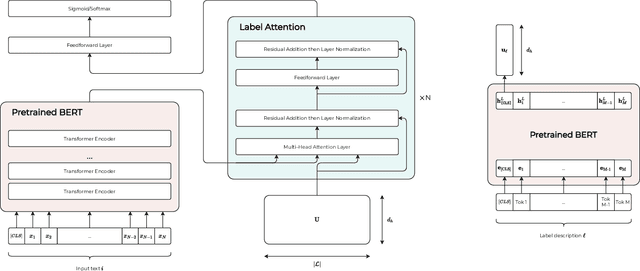

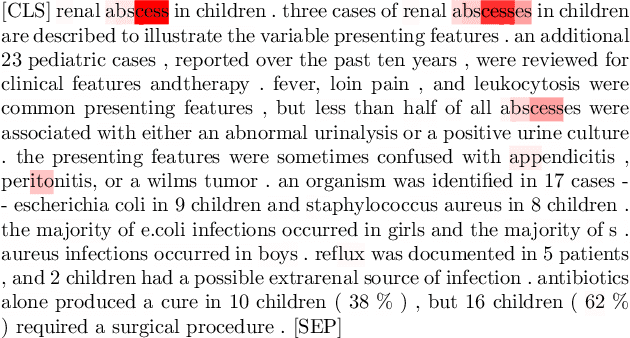

Fine-tuning Pretrained Language Models with Label Attention for Explainable Biomedical Text Classification

Aug 28, 2021

Abstract:The massive growth of digital biomedical data is making biomedical text indexing and classification increasingly important. Accordingly, previous research has devised numerous deep learning techniques focused on using feedforward, convolutional or recurrent neural architectures. More recently, fine-tuned transformers-based pretrained models (PTMs) have demonstrated superior performance compared to such models in many natural language processing tasks. However, the direct use of PTMs in the biomedical domain is only limited to the target documents, ignoring the rich semantic information in the label descriptions. In this paper, we develop an improved label attention-based architecture to inject semantic label description into the fine-tuning process of PTMs. Results on two public medical datasets show that the proposed fine-tuning scheme outperforms the conventionally fine-tuned PTMs and prior state-of-the-art models. Furthermore, we show that fine-tuning with the label attention mechanism is interpretable in the interpretability study.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge