Brian Sifringer

Images in Discrete Choice Modeling: Addressing Data Isomorphism in Multi-Modality Inputs

Dec 22, 2023Abstract:This paper explores the intersection of Discrete Choice Modeling (DCM) and machine learning, focusing on the integration of image data into DCM's utility functions and its impact on model interpretability. We investigate the consequences of embedding high-dimensional image data that shares isomorphic information with traditional tabular inputs within a DCM framework. Our study reveals that neural network (NN) components learn and replicate tabular variable representations from images when co-occurrences exist, thereby compromising the interpretability of DCM parameters. We propose and benchmark two methodologies to address this challenge: architectural design adjustments to segregate redundant information, and isomorphic information mitigation through source information masking and inpainting. Our experiments, conducted on a semi-synthetic dataset, demonstrate that while architectural modifications prove inconclusive, direct mitigation at the data source shows to be a more effective strategy in maintaining the integrity of DCM's interpretable parameters. The paper concludes with insights into the applicability of our findings in real-world settings and discusses the implications for future research in hybrid modeling that combines complex data modalities. Full control of tabular and image data congruence is attained by using the MIT moral machine dataset, and both inputs are merged into a choice model by deploying the Learning Multinomial Logit (L-MNL) framework.

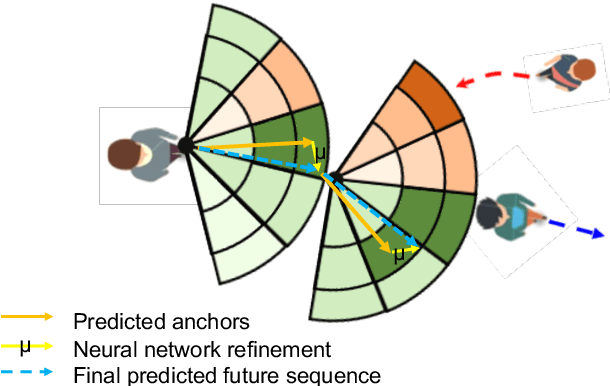

Interpretable Social Anchors for Human Trajectory Forecasting in Crowds

May 07, 2021

Abstract:Human trajectory forecasting in crowds, at its core, is a sequence prediction problem with specific challenges of capturing inter-sequence dependencies (social interactions) and consequently predicting socially-compliant multimodal distributions. In recent years, neural network-based methods have been shown to outperform hand-crafted methods on distance-based metrics. However, these data-driven methods still suffer from one crucial limitation: lack of interpretability. To overcome this limitation, we leverage the power of discrete choice models to learn interpretable rule-based intents, and subsequently utilise the expressibility of neural networks to model scene-specific residual. Extensive experimentation on the interaction-centric benchmark TrajNet++ demonstrates the effectiveness of our proposed architecture to explain its predictions without compromising the accuracy.

Let Me Not Lie: Learning MultiNomial Logit

Dec 23, 2018

Abstract:Discrete choice models generally assume that model specification is known a priori. In practice, determining the utility specification for a particular application remains a difficult task and model misspecification may lead to biased parameter estimates. In this paper, we propose a new mathematical framework for estimating choice models in which the systematic part of the utility specification is divided into an interpretable part and a learning representation part that aims at automatically discovering a good utility specification from available data. We show the effectiveness of our framework by augmenting the utility specification of the Multinomial Logit Model (MNL) with a new non-linear representation arising from a Neural Network (NN). This leads to a new choice model referred to as the Learning Multinomial Logit (L-MNL) model. Our experiments show that our L-MNL model outperformed the traditional MNL models and existing hybrid neural network models both in terms of predictive performance and accuracy in parameter estimation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge