Brian Lu

Generalization of RLVR Using Causal Reasoning as a Testbed

Dec 23, 2025Abstract:Reinforcement learning with verifiable rewards (RLVR) has emerged as a promising paradigm for post-training large language models (LLMs) on complex reasoning tasks. Yet, the conditions under which RLVR yields robust generalization remain poorly understood. This paper provides an empirical study of RLVR generalization in the setting of probabilistic inference over causal graphical models. This setting offers two natural axes along which to examine generalization: (i) the level of the probabilistic query -- associational, interventional, or counterfactual -- and (ii) the structural complexity of the query, measured by the size of its relevant subgraph. We construct datasets of causal graphs and queries spanning these difficulty axes and fine-tune Qwen-2.5-Instruct models using RLVR or supervised fine-tuning (SFT). We vary both the model scale (3B-32B) and the query level included in training. We find that RLVR yields stronger within-level and across-level generalization than SFT, but only for specific combinations of model size and training query level. Further analysis shows that RLVR's effectiveness depends on the model's initial reasoning competence. With sufficient initial competence, RLVR improves an LLM's marginalization strategy and reduces errors in intermediate probability calculations, producing substantial accuracy gains, particularly on more complex queries. These findings show that RLVR can improve specific causal reasoning subskills, with its benefits emerging only when the model has sufficient initial competence.

SHACL-SKOS Based Knowledge Representation of Material Safety Data Sheet (SDS) for the Pharmaceutical Industry

Feb 11, 2025

Abstract:We report the development of a knowledge representation and reasoning (KRR) system built on hybrid SHACL-SKOS ontologies for globally harmonized system (GHS) material Safety Data Sheets (SDS) to enhance chemical safety communication and regulatory compliance. SDS are comprehensive documents containing safety and handling information for chemical substances. Thus, they are an essential part of workplace safety and risk management. However, the vast number of Safety Data Sheets from multiple organizations, manufacturers, and suppliers that produce and distribute chemicals makes it challenging to centralize and access SDS documents through a single repository. To accomplish the underlying issues of data exchange related to chemical shipping and handling, we construct SDS related controlled vocabulary and conditions validated by SHACL, and knowledge systems of similar domains linked via SKOS. The resulting hybrid ontologies aim to provide standardized yet adaptable representations of SDS information, facilitating better data sharing, retrieval, and integration across various platforms. This paper outlines our SHACL-SKOS system architectural design and showcases our implementation for an industrial application streamlining the generation of a composite shipping cover sheet.

Let's Think Var-by-Var: Large Language Models Enable Ad Hoc Probabilistic Reasoning

Dec 03, 2024

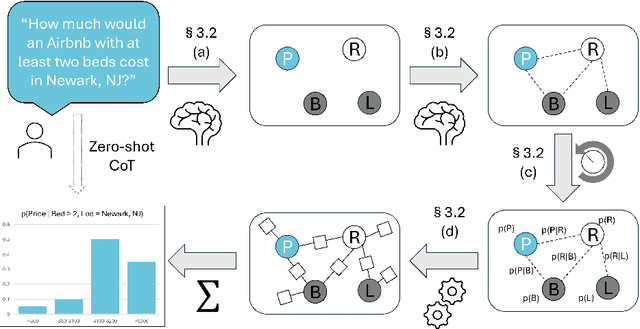

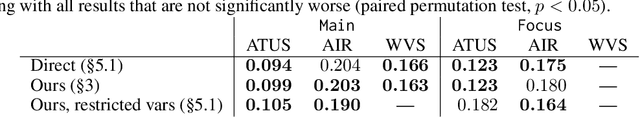

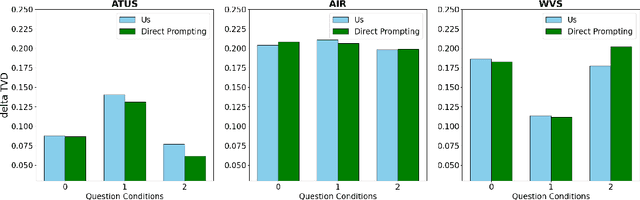

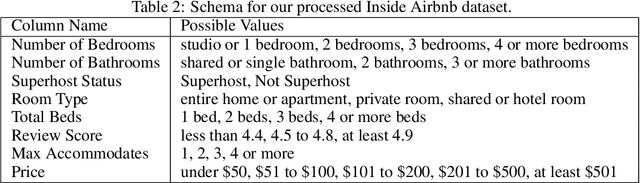

Abstract:A hallmark of intelligence is the ability to flesh out underspecified situations using "common sense." We propose to extract that common sense from large language models (LLMs), in a form that can feed into probabilistic inference. We focus our investigation on $\textit{guesstimation}$ questions such as "How much are Airbnb listings in Newark, NJ?" Formulating a sensible answer without access to data requires drawing on, and integrating, bits of common knowledge about how $\texttt{Price}$ and $\texttt{Location}$ may relate to other variables, such as $\texttt{Property Type}$. Our framework answers such a question by synthesizing an $\textit{ad hoc}$ probabilistic model. First we prompt an LLM to propose a set of random variables relevant to the question, followed by moment constraints on their joint distribution. We then optimize the joint distribution $p$ within a log-linear family to maximize the overall constraint satisfaction. Our experiments show that LLMs can successfully be prompted to propose reasonable variables, and while the proposed numerical constraints can be noisy, jointly optimizing for their satisfaction reconciles them. When evaluated on probabilistic questions derived from three real-world tabular datasets, we find that our framework performs comparably to a direct prompting baseline in terms of total variation distance from the dataset distribution, and is similarly robust to noise.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge