Branislav Popovic

Multi-Modal Concurrent Transmission

Feb 09, 2024Abstract:This paper introduces a novel physical-layer method labelled as Multi-Modal Concurrent Transmission (MMCT) for efficient transmission of multiple data streams with different reliability-latency performance requirements. The MMCT arranges data from multiple streams within a same physical-layer transport block wherein stream-specific modulation and coding scheme (MCS) selection is combined with joint mapping of modulated codewords to Multiple-Input Multiple-Output spatial layers and frequency resources. Mapping to spatial-frequency resources with higher Signal-to-Noise Ratios (SNRs) provides the required performance boost for the more demanding streams. In tactile internet applications, wherein haptic feedback/actuation and audio-video streams flow in parallel, the method provides significant SNR and spectral efficiency enhancements compared to conventional 3GPP New Radio (NR) transmission methods.

* 6 pages, 4 figures, 1 table

Feedback is Good, Active Feedback is Better: Block Attention Active Feedback Codes

Nov 03, 2022

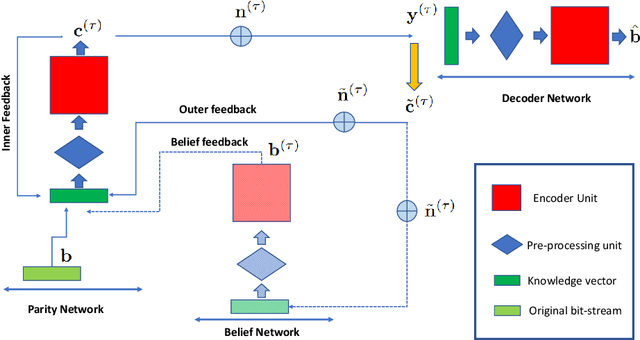

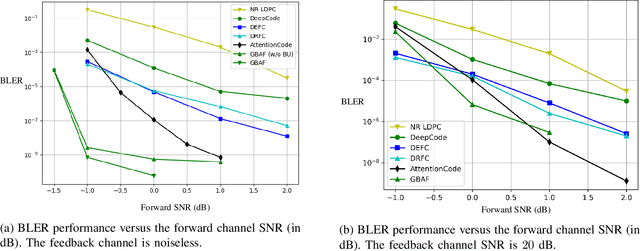

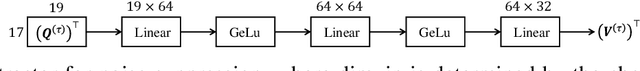

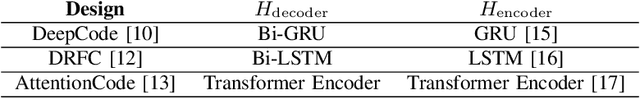

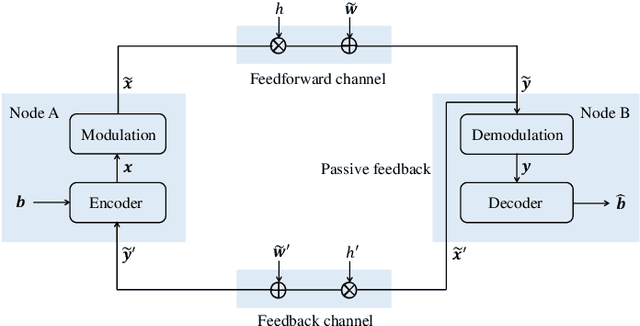

Abstract:Deep neural network (DNN)-assisted channel coding designs, such as low-complexity neural decoders for existing codes, or end-to-end neural-network-based auto-encoder designs are gaining interest recently due to their improved performance and flexibility; particularly for communication scenarios in which high-performing structured code designs do not exist. Communication in the presence of feedback is one such communication scenario, and practical code design for feedback channels has remained an open challenge in coding theory for many decades. Recently, DNN-based designs have shown impressive results in exploiting feedback. In particular, generalized block attention feedback (GBAF) codes, which utilizes the popular transformer architecture, achieved significant improvement in terms of the block error rate (BLER) performance. However, previous works have focused mainly on passive feedback, where the transmitter observes a noisy version of the signal at the receiver. In this work, we show that GBAF codes can also be used for channels with active feedback. We implement a pair of transformer architectures, at the transmitter and the receiver, which interact with each other sequentially, and achieve a new state-of-the-art BLER performance, especially in the low SNR regime.

All you need is feedback: Communication with block attention feedback codes

Jun 19, 2022

Abstract:Deep learning based channel code designs have recently gained interest as an alternative to conventional coding algorithms, particularly for channels for which existing codes do not provide effective solutions. Communication over a feedback channel is one such problem, for which promising results have recently been obtained by employing various deep learning architectures. In this paper, we introduce a novel learning-aided code design for feedback channels, called generalized block attention feedback (GBAF) codes, which i) employs a modular architecture that can be implemented using different neural network architectures; ii) provides order-of-magnitude improvements in the probability of error compared to existing designs; and iii) can transmit at desired code rates.

AttentionCode: Ultra-Reliable Feedback Codes for Short-Packet Communications

May 30, 2022

Abstract:Ultra-reliable short-packet communication is a major challenge in future wireless networks with critical applications. To achieve ultra-reliable communications beyond 99.999%, this paper envisions a new interaction-based communication paradigm that exploits the feedback from the receiver for the sixth generation (6G) communication networks and beyond. We present AttentionCode, a new class of feedback codes leveraging deep learning (DL) technologies. The underpinnings of AttentionCode are three architectural innovations: AttentionNet, input restructuring, and adaptation to fading channels, accompanied by several training methods, including large-batch training, distributed learning, look-ahead optimizer, training-test signal-to-noise ratio (SNR) mismatch, and curriculum learning. The training methods can potentially be generalized to other wireless communication applications with machine learning. Numerical experiments verify that AttentionCode establishes a new state of the art among all DL-based feedback codes in both additive white Gaussian noise (AWGN) channels and fading channels. In AWGN channels with noiseless feedback, for example, AttentionCode achieves a block error rate (BLER) of $10^{-7}$ when the forward channel SNR is 0dB for a block size of 50 bits, demonstrating the potential of AttentionCode to provide ultra-reliable short-packet communications for 6G.

DRF Codes: Deep SNR-Robust Feedback Codes

Dec 22, 2021

Abstract:We present a new deep-neural-network (DNN) based error correction code for fading channels with output feedback, called deep SNR-robust feedback (DRF) code. At the encoder, parity symbols are generated by a long short term memory (LSTM) network based on the message as well as the past forward channel outputs observed by the transmitter in a noisy fashion. The decoder uses a bi-directional LSTM architecture along with a signal to noise ratio (SNR)-aware attention NN to decode the message. The proposed code overcomes two major shortcomings of the previously proposed DNN-based codes over channels with passive output feedback: (i) the SNR-aware attention mechanism at the decoder enables reliable application of the same trained NN over a wide range of SNR values; (ii) curriculum training with batch-size scheduling is used to speed up and stabilize training while improving the SNR-robustness of the resulting code. We show that the DRF codes significantly outperform state-of-the-art in terms of both the SNR-robustness and the error rate in additive white Gaussian noise (AWGN) channel with feedback. In fading channels with perfect phase compensation at the receiver, DRF codes learn to efficiently exploit knowledge of the instantaneous fading amplitude (which is available to the encoder through feedback) to reduce the overhead and complexity associated with channel estimation at the decoder. Finally, we show the effectiveness of DRF codes in multicast channels with feedback, where linear feedback codes are known to be strictly suboptimal.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge