Brandon Gower-Winter

Performative Drift Resistant Classification Using Generative Domain Adversarial Networks

Apr 01, 2025

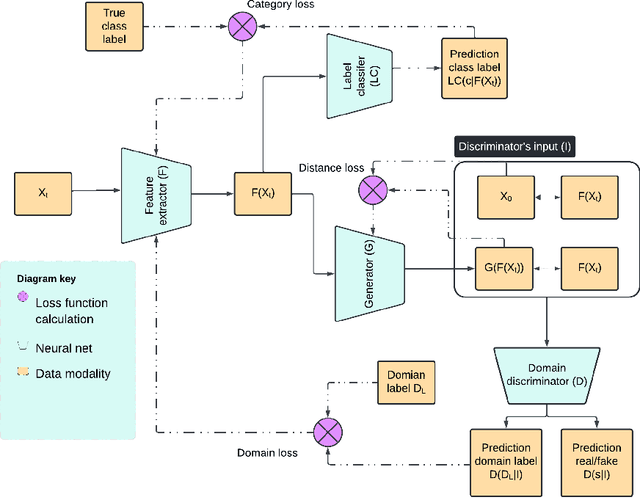

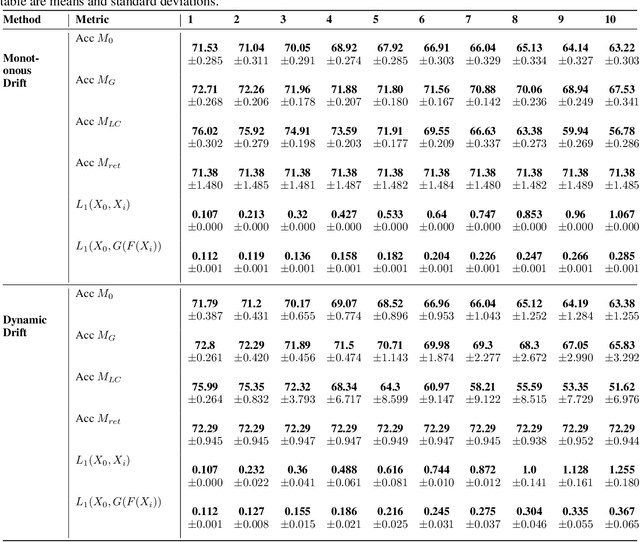

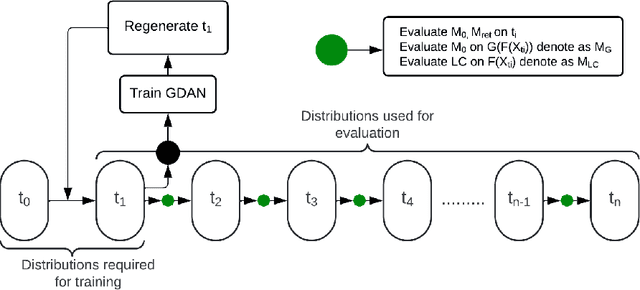

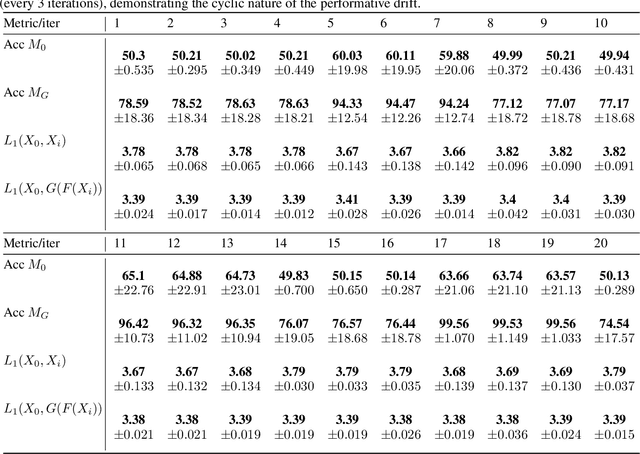

Abstract:Performative Drift is a special type of Concept Drift that occurs when a model's predictions influence the future instances the model will encounter. In these settings, retraining is not always feasible. In this work, we instead focus on drift understanding as a method for creating drift-resistant classifiers. To achieve this, we introduce the Generative Domain Adversarial Network (GDAN) which combines both Domain and Generative Adversarial Networks. Using GDAN, domain-invariant representations of incoming data are created and a generative network is used to reverse the effects of performative drift. Using semi-real and synthetic data generators, we empirically evaluate GDAN's ability to provide drift-resistant classification. Initial results are promising with GDAN limiting performance degradation over several timesteps. Additionally, GDAN's generative network can be used in tandem with other models to limit their performance degradation in the presence of performative drift. Lastly, we highlight the relationship between model retraining and the unpredictability of performative drift, providing deeper insights into the challenges faced when using traditional Concept Drift mitigation strategies in the performative setting.

Identifying Predictions That Influence the Future: Detecting Performative Concept Drift in Data Streams

Dec 13, 2024Abstract:Concept Drift has been extensively studied within the context of Stream Learning. However, it is often assumed that the deployed model's predictions play no role in the concept drift the system experiences. Closer inspection reveals that this is not always the case. Automated trading might be prone to self-fulfilling feedback loops. Likewise, malicious entities might adapt to evade detectors in the adversarial setting resulting in a self-negating feedback loop that requires the deployed models to constantly retrain. Such settings where a model may induce concept drift are called performative. In this work, we investigate this phenomenon. Our contributions are as follows: First, we define performative drift within a stream learning setting and distinguish it from other causes of drift. We introduce a novel type of drift detection task, aimed at identifying potential performative concept drift in data streams. We propose a first such performative drift detection approach, called CheckerBoard Performative Drift Detection (CB-PDD). We apply CB-PDD to both synthetic and semi-synthetic datasets that exhibit varying degrees of self-fulfilling feedback loops. Results are positive with CB-PDD showing high efficacy, low false detection rates, resilience to intrinsic drift, comparability to other drift detection techniques, and an ability to effectively detect performative drift in semi-synthetic datasets. Secondly, we highlight the role intrinsic (traditional) drift plays in obfuscating performative drift and discuss the implications of these findings as well as the limitations of CB-PDD.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge