Brandon B. G. Khoo

Transferable Class-Modelling for Decentralized Source Attribution of GAN-Generated Images

Mar 18, 2022

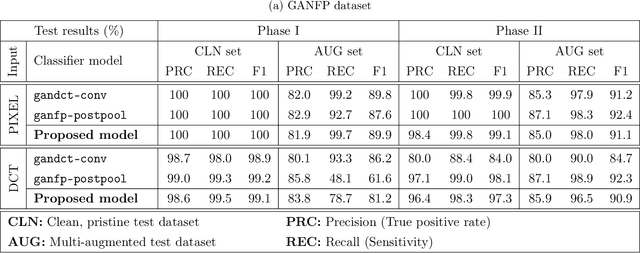

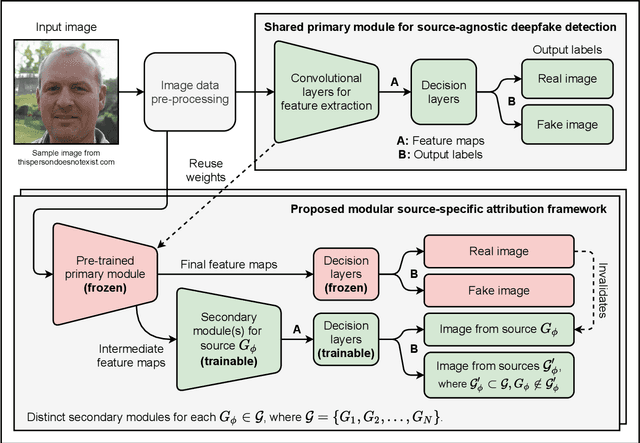

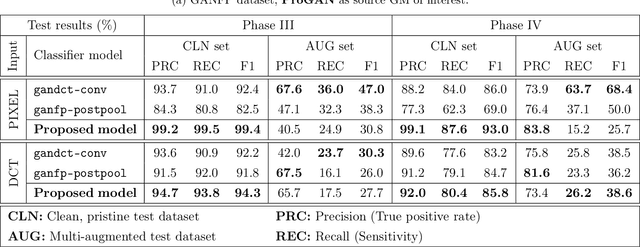

Abstract:GAN-generated deepfakes as a genre of digital images are gaining ground as both catalysts of artistic expression and malicious forms of deception, therefore demanding systems to enforce and accredit their ethical use. Existing techniques for the source attribution of synthetic images identify subtle intrinsic fingerprints using multiclass classification neural nets limited in functionality and scalability. Hence, we redefine the deepfake detection and source attribution problems as a series of related binary classification tasks. We leverage transfer learning to rapidly adapt forgery detection networks for multiple independent attribution problems, by proposing a semi-decentralized modular design to solve them simultaneously and efficiently. Class activation mapping is also demonstrated as an effective means of feature localization for model interpretation. Our models are determined via experimentation to be competitive with current benchmarks, and capable of decent performance on human portraits in ideal conditions. Decentralized fingerprint-based attribution is found to retain validity in the presence of novel sources, but is more susceptible to type II errors that intensify with image perturbations and attributive uncertainty. We describe both our conceptual framework and model prototypes for further enhancement when investigating the technical limits of reactive deepfake attribution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge