Bolin Liu

Deep Learning with Inaccurate Training Data for Image Restoration

Nov 18, 2018

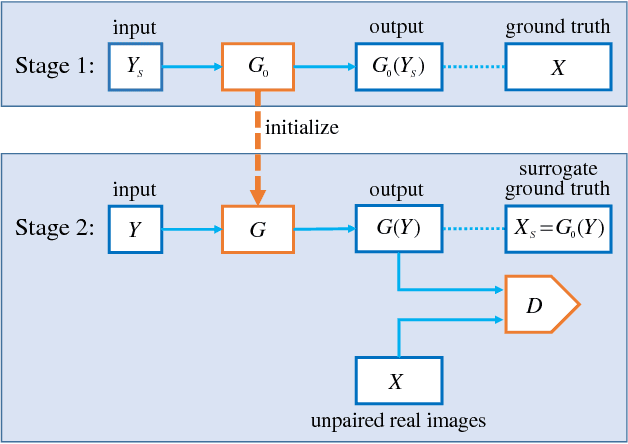

Abstract:In many applications of deep learning, particularly those in image restoration, it is either very difficult, prohibitively expensive, or outright impossible to obtain paired training data precisely as in the real world. In such cases, one is forced to use synthesized paired data to train the deep convolutional neural network (DCNN). However, due to the unavoidable generalization error in statistical learning, the synthetically trained DCNN often performs poorly on real world data. To overcome this problem, we propose a new general training method that can compensate for, to a large extent, the generalization errors of synthetically trained DCNNs.

Demoiréing of Camera-Captured Screen Images Using Deep Convolutional Neural Network

Apr 11, 2018

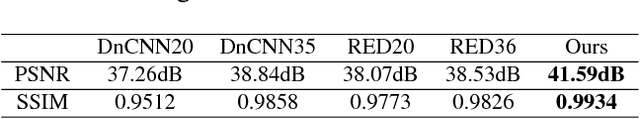

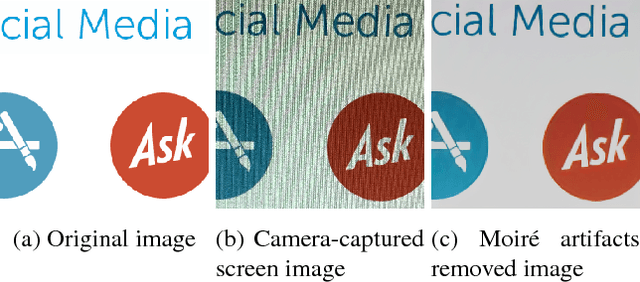

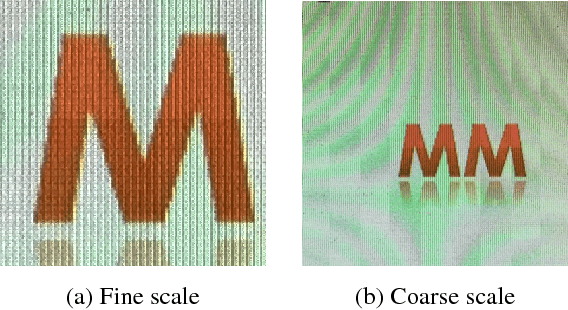

Abstract:Taking photos of optoelectronic displays is a direct and spontaneous way of transferring data and keeping records, which is widely practiced. However, due to the analog signal interference between the pixel grids of the display screen and camera sensor array, objectionable moir\'e (alias) patterns appear in captured screen images. As the moir\'e patterns are structured and highly variant, they are difficult to be completely removed without affecting the underneath latent image. In this paper, we propose an approach of deep convolutional neural network for demoir\'eing screen photos. The proposed DCNN consists of a coarse-scale network and a fine-scale network. In the coarse-scale network, the input image is first downsampled and then processed by stacked residual blocks to remove the moir\'e artifacts. After that, the fine-scale network upsamples the demoir\'ed low-resolution image back to the original resolution. Extensive experimental results have demonstrated that the proposed technique can efficiently remove the moir\'e patterns for camera acquired screen images; the new technique outperforms the existing ones.

Fast Screening Algorithm for Rotation and Scale Invariant Template Matching

Jul 19, 2017

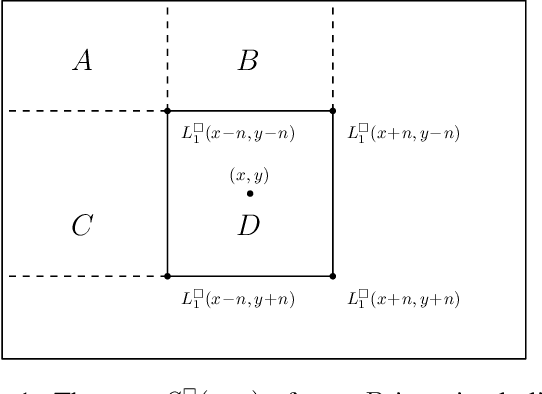

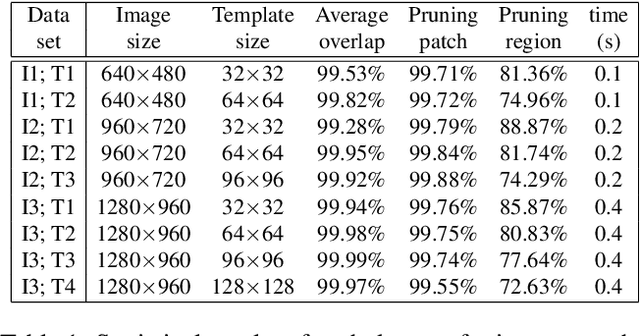

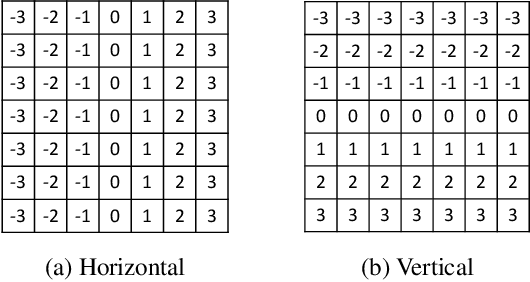

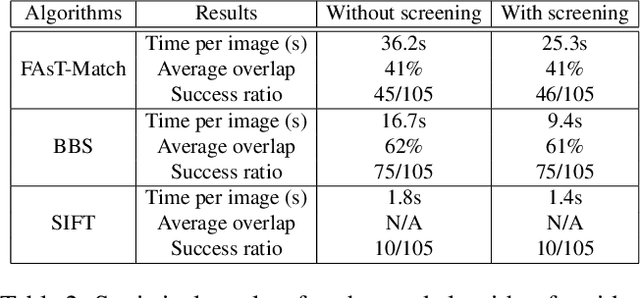

Abstract:This paper presents a generic pre-processor for expediting conventional template matching techniques. Instead of locating the best matched patch in the reference image to a query template via exhaustive search, the proposed algorithm rules out regions with no possible matches with minimum computational efforts. While working on simple patch features, such as mean, variance and gradient, the fast pre-screening is highly discriminative. Its computational efficiency is gained by using a novel octagonal-star-shaped template and the inclusion-exclusion principle to extract and compare patch features. Moreover, it can handle arbitrary rotation and scaling of reference images effectively. Extensive experiments demonstrate that the proposed algorithm greatly reduces the search space while never missing the best match.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge