Boglarka Ecsedi

Model merging with SVD to tie the Knots

Oct 25, 2024

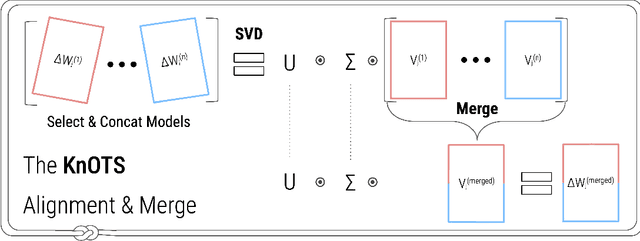

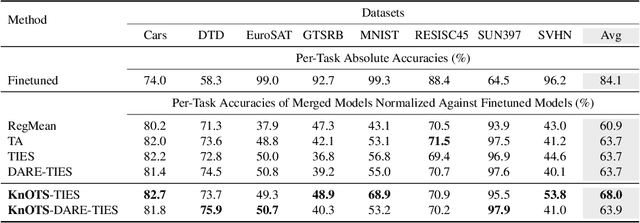

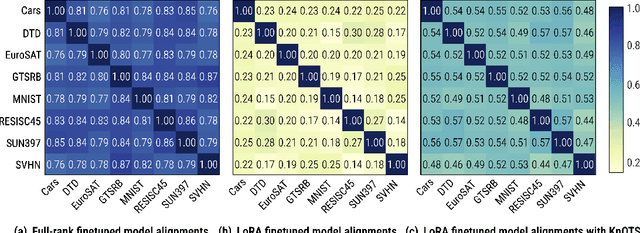

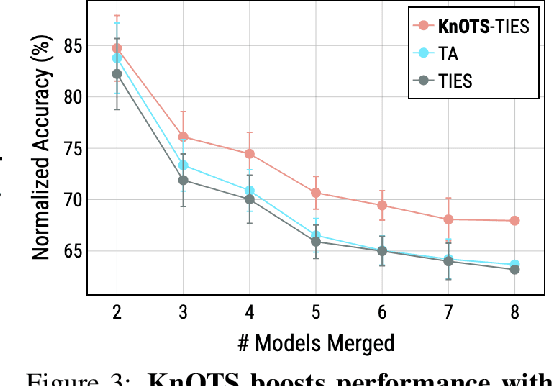

Abstract:Recent model merging methods demonstrate that the parameters of fully-finetuned models specializing in distinct tasks can be combined into one model capable of solving all tasks without retraining. Yet, this success does not transfer well when merging LoRA finetuned models. We study this phenomenon and observe that the weights of LoRA finetuned models showcase a lower degree of alignment compared to their fully-finetuned counterparts. We hypothesize that improving this alignment is key to obtaining better LoRA model merges, and propose KnOTS to address this problem. KnOTS uses the SVD to jointly transform the weights of different LoRA models into an aligned space, where existing merging methods can be applied. In addition, we introduce a new benchmark that explicitly evaluates whether merged models are general models. Notably, KnOTS consistently improves LoRA merging by up to 4.3% across several vision and language benchmarks, including our new setting. We release our code at: https://github.com/gstoica27/KnOTS.

AUGCAL: Improving Sim2Real Adaptation by Uncertainty Calibration on Augmented Synthetic Images

Dec 19, 2023

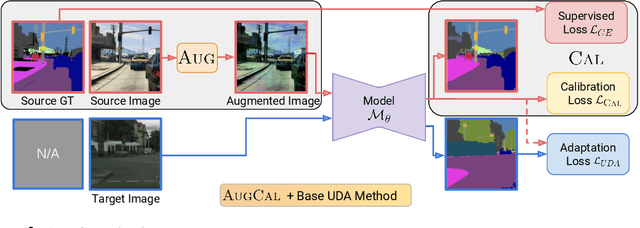

Abstract:Synthetic data (SIM) drawn from simulators have emerged as a popular alternative for training models where acquiring annotated real-world images is difficult. However, transferring models trained on synthetic images to real-world applications can be challenging due to appearance disparities. A commonly employed solution to counter this SIM2REAL gap is unsupervised domain adaptation, where models are trained using labeled SIM data and unlabeled REAL data. Mispredictions made by such SIM2REAL adapted models are often associated with miscalibration - stemming from overconfident predictions on real data. In this paper, we introduce AUGCAL, a simple training-time patch for unsupervised adaptation that improves SIM2REAL adapted models by - (1) reducing overall miscalibration, (2) reducing overconfidence in incorrect predictions and (3) improving confidence score reliability by better guiding misclassification detection - all while retaining or improving SIM2REAL performance. Given a base SIM2REAL adaptation algorithm, at training time, AUGCAL involves replacing vanilla SIM images with strongly augmented views (AUG intervention) and additionally optimizing for a training time calibration loss on augmented SIM predictions (CAL intervention). We motivate AUGCAL using a brief analytical justification of how to reduce miscalibration on unlabeled REAL data. Through our experiments, we empirically show the efficacy of AUGCAL across multiple adaptation methods, backbones, tasks and shifts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge