Bijan Pesaran

Deep Cross-Subject Mapping of Neural Activity

Jul 13, 2020

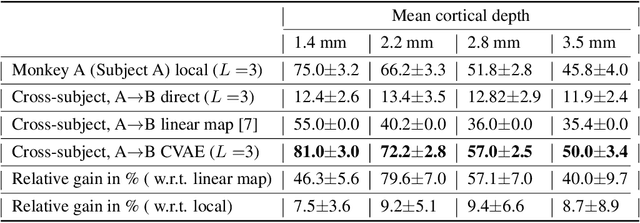

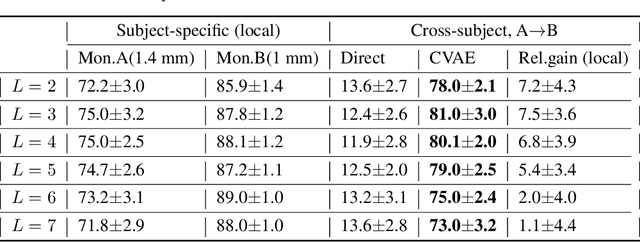

Abstract:In this paper, we demonstrate that a neural decoder trained on neural activity signals of one subject can be used to \textit{robustly} decode the motor intentions of a different subject with high reliability. This is achieved in spite of the non-stationary nature of neural activity signals and the subject-specific variations of the recording conditions. Our proposed algorithm for cross-subject mapping of neural activity is based on deep conditional generative models. We verify the results on an experimental data set in which two macaque monkeys perform memory-guided visual saccades to one of eight target locations.

Cross-subject Decoding of Eye Movement Goals from Local Field Potentials

Nov 21, 2019

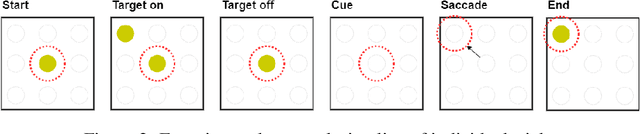

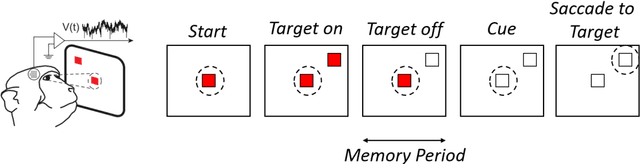

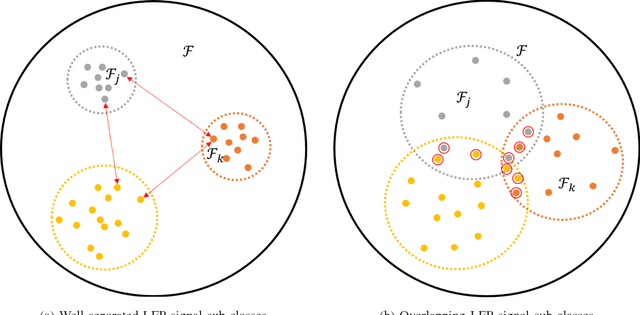

Abstract:Objective. We consider the cross-subject decoding problem from local field potential (LFP) activity, where training data collected from the pre-frontal cortex of a subject (source) is used to decode intended motor actions in another subject (destination). Approach. We propose a novel pre-processing technique, referred to as data centering, which is used to adapt the feature space of the source to the feature space of the destination. The key ingredients of data centering are the transfer functions used to model the deterministic component of the relationship between the source and destination feature spaces. We also develop an efficient data-driven estimation approach for linear transfer functions that uses the first and second order moments of the class-conditional distributions. Main result. We apply our techniques for cross-subject decoding of eye movement directions in an experiment where two macaque monkeys perform memory-guided visual saccades to one of eight target locations. The results show peak cross-subject decoding performance of $80\%$, which marks a substantial improvement over random choice decoder. Significance. The analyses presented herein demonstrate that the data centering is a viable novel technique for reliable cross-subject brain-computer interfacing.

Minimax-optimal decoding of movement goals from local field potentials using complex spectral features

Jan 29, 2019

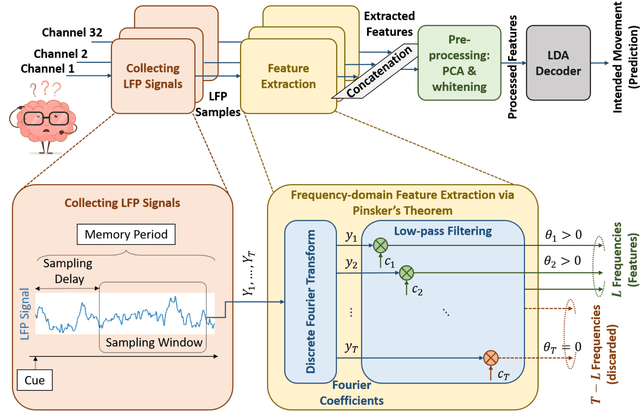

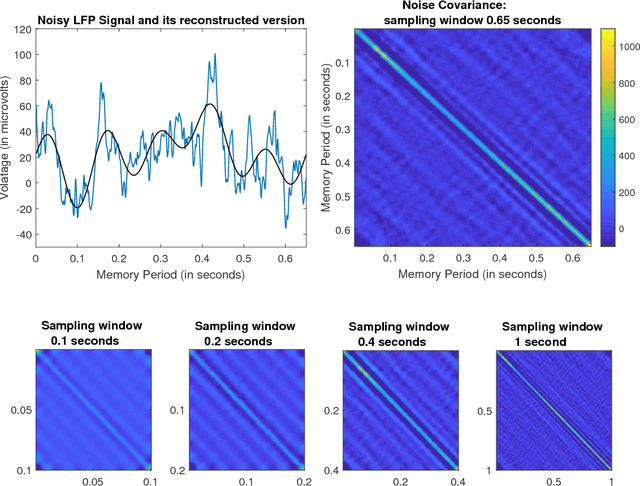

Abstract:We consider the problem of predicting eye movement goals from local field potentials (LFP) recorded through a multielectrode array in the macaque prefrontal cortex. The monkey is tasked with performing memory-guided saccades to one of eight targets during which LFP activity is recorded and used to train a decoder. Previous reports have mainly relied on the spectral amplitude of the LFPs as a feature in the decoding step to limited success, while neglecting the phase without proper theoretical justification. This paper formulates the problem of decoding eye movement intentions in a statistically optimal framework and uses Gaussian sequence modeling and Pinsker's theorem to generate minimax-optimal estimates of the LFP signals which are later used as features in the decoding step. The approach is shown to act as a low-pass filter and each LFP in the feature space is represented via its complex Fourier coefficients after appropriate shrinking such that higher frequency components are attenuated; this way, the phase information inherently present in the LFP signal is naturally embedded into the feature space. The proposed complex spectrum-based decoder achieves prediction accuracy of up to $94\%$ at superficial electrode depths near the surface of the prefrontal cortex, which marks a significant performance improvement over conventional power spectrum-based decoders.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge