Bihai Zhang

VVRec: Reconstruction Attacks on DL-based Volumetric Video Upstreaming via Latent Diffusion Model with Gamma Distribution

Feb 25, 2025

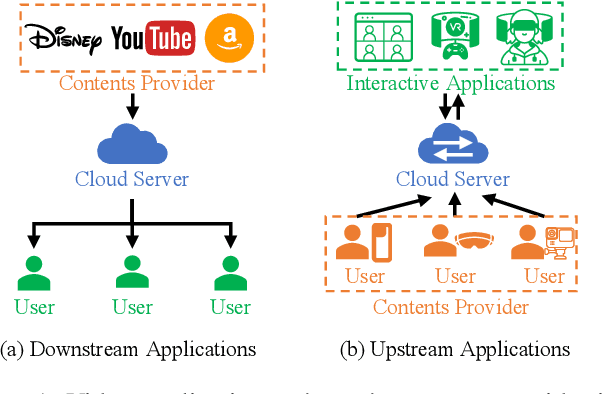

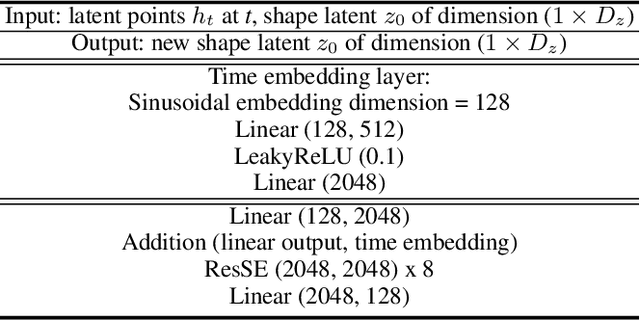

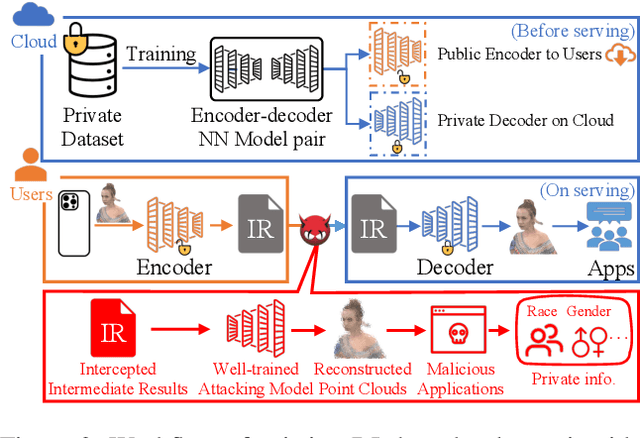

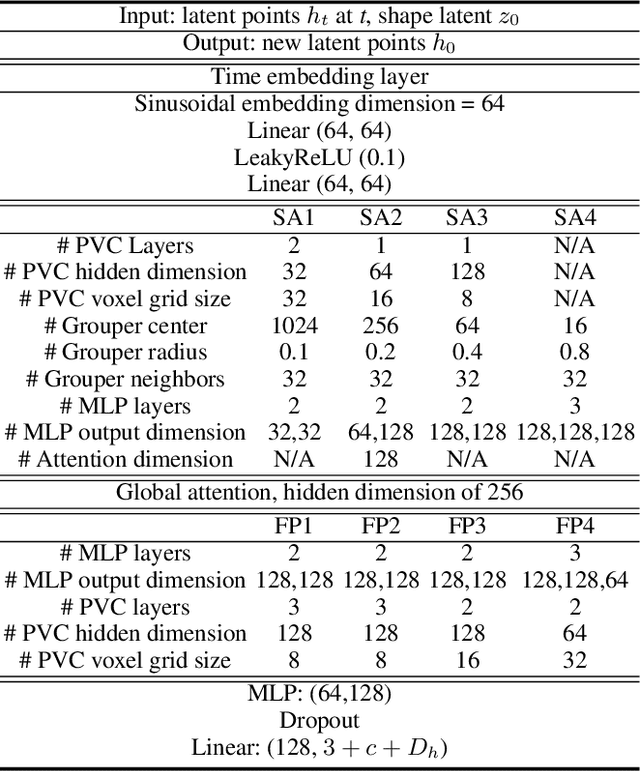

Abstract:With the popularity of 3D volumetric video applications, such as Autonomous Driving, Virtual Reality, and Mixed Reality, current developers have turned to deep learning for compressing volumetric video frames, i.e., point clouds for video upstreaming. The latest deep learning-based solutions offer higher efficiency, lower distortion, and better hardware support compared to traditional ones like MPEG and JPEG. However, privacy threats arise, especially reconstruction attacks targeting to recover the original input point cloud from the intermediate results. In this paper, we design VVRec, to the best of our knowledge, which is the first targeting DL-based Volumetric Video Reconstruction attack scheme. VVRec demonstrates the ability to reconstruct high-quality point clouds from intercepted transmission intermediate results using four well-trained neural network modules we design. Leveraging the latest latent diffusion models with Gamma distribution and a refinement algorithm, VVRec excels in reconstruction quality, color recovery, and surpasses existing defenses. We evaluate VVRec using three volumetric video datasets. The results demonstrate that VVRec achieves 64.70dB reconstruction accuracy, with an impressive 46.39% reduction of distortion over baselines.

Privacy Protectability: An Information-theoretical Approach

May 25, 2023Abstract:Recently, inference privacy has attracted increasing attention. The inference privacy concern arises most notably in the widely deployed edge-cloud video analytics systems, where the cloud needs the videos captured from the edge. The video data can contain sensitive information and subject to attack when they are transmitted to the cloud for inference. Many privacy protection schemes have been proposed. Yet, the performance of a scheme needs to be determined by experiments or inferred by analyzing the specific case. In this paper, we propose a new metric, \textit{privacy protectability}, to characterize to what degree a video stream can be protected given a certain video analytics task. Such a metric has strong operational meaning. For example, low protectability means that it may be necessary to set up an overall secure environment. We can also evaluate a privacy protection scheme, e.g., assume it obfuscates the video data, what level of protection this scheme has achieved after obfuscation. Our definition of privacy protectability is rooted in information theory and we develop efficient algorithms to estimate the metric. We use experiments on real data to validate that our metric is consistent with empirical measurements on how well a video stream can be protected for a video analytics task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge