Bhaskar Sen

Calculation of Sub-bands {1,2,5,6} for 64-Point Complex FFT and Its extension to N Point FFT

Mar 08, 2022

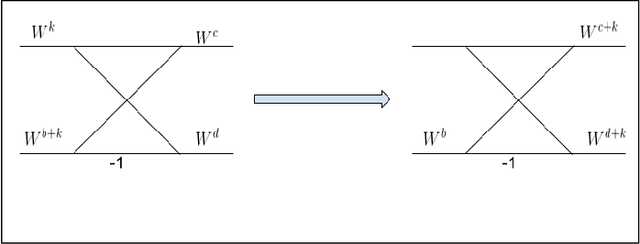

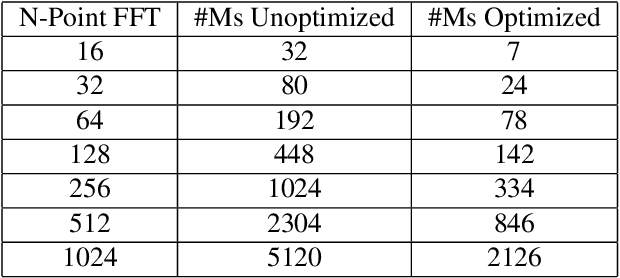

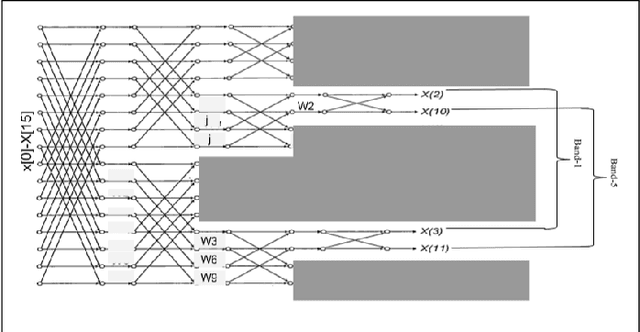

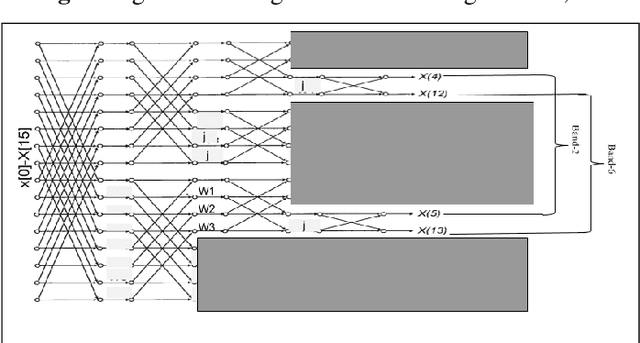

Abstract:FFT algorithm is one of the most applied algorithmsin digital signal processing. Digital signal processing hasgradually become important in biomedical application. Herehardware implementation of FFTs have found useful appli-cations for bio-wearable devices. However, for these devices, low-power and low-area are of utmost importance.In this report, we investigate a sub-structure of decimation-in-frequency (DIF) FFT where a number of sub-bands areof interest to us. Specifically, we divide the range of frequencies into 8 sub-bands (0-7) and calculate 4 of them( 1,2,5,6). We show that using concepts likepushingandradix22, the number of complex multiplications can be dras-tically reduced for 16-point, 32-point and 64-point FFTswhile computing those specific bands. Later, we also extendit toN= 2n-point FFT based on optimized 64-point FFTstructure. The number of complex multiplications is furtherreduced usingmerge-FFT. Our results show that the numberof multiplications (and hence power) can be reduced greatlyusing our optimized structure compared to an unoptimizedstructure. This can find application in biomedical signal processing specifically while computingp ower spectral density of a physiological time series where reducing computational power is of utmost importance

Support-BERT: Predicting Quality of Question-Answer Pairs in MSDN using Deep Bidirectional Transformer

May 17, 2020

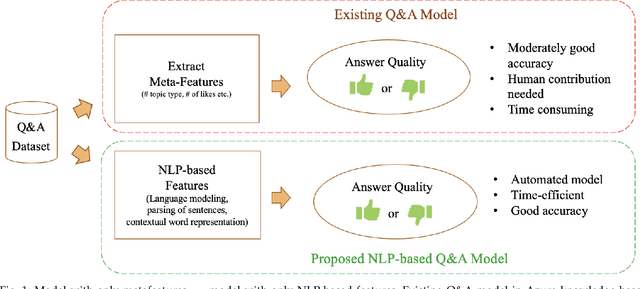

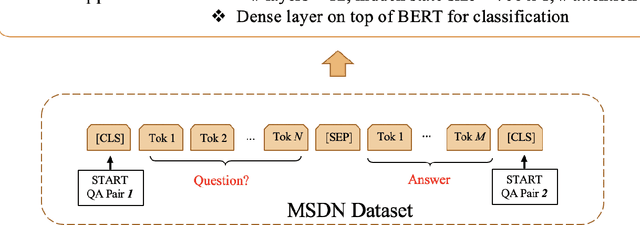

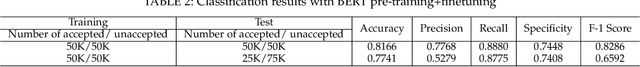

Abstract:Quality of questions and answers from community support websites (e.g. Microsoft Developers Network, Stackoverflow, Github, etc.) is difficult to define and a prediction model of quality questions and answers is even more challenging to implement. Previous works have addressed the question quality models and answer quality models separately using meta-features like number of up-votes, trustworthiness of the person posting the questions or answers, titles of the post, and context naive natural language processing features. However, there is a lack of an integrated question-answer quality model for community question answering websites in the literature. In this brief paper, we tackle the quality Q&A modeling problems from the community support websites using a recently developed deep learning model using bidirectional transformers. We investigate the applicability of transfer learning on Q&A quality modeling using Bidirectional Encoder Representations from Transformers (BERT) trained on a separate tasks originally using Wikipedia. It is found that a further pre-training of BERT model along with finetuning on the Q&As extracted from Microsoft Developer Network (MSDN) can boost the performance of automated quality prediction to more than 80%. Furthermore, the implementations are carried out for deploying the finetuned model in real-time scenario using AzureML in Azure knowledge base system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge