Benjamin Pyenson

Beyond Tracking: Using Deep Learning to Discover Novel Interactions in Biological Swarms

Aug 20, 2021

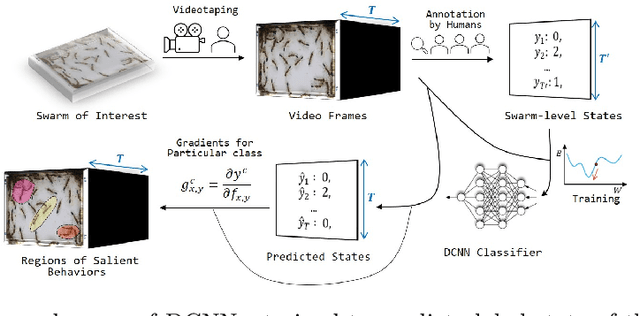

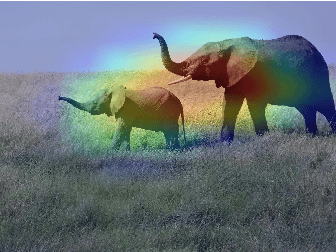

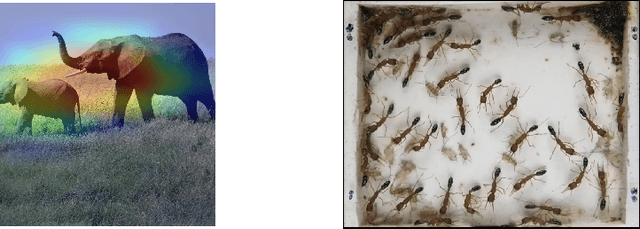

Abstract:Most deep-learning frameworks for understanding biological swarms are designed to fit perceptive models of group behavior to individual-level data (e.g., spatial coordinates of identified features of individuals) that have been separately gathered from video observations. Despite considerable advances in automated tracking, these methods are still very expensive or unreliable when tracking large numbers of animals simultaneously. Moreover, this approach assumes that the human-chosen features include sufficient features to explain important patterns in collective behavior. To address these issues, we propose training deep network models to predict system-level states directly from generic graphical features from the entire view, which can be relatively inexpensive to gather in a completely automated fashion. Because the resulting predictive models are not based on human-understood predictors, we use explanatory modules (e.g., Grad-CAM) that combine information hidden in the latent variables of the deep-network model with the video data itself to communicate to a human observer which aspects of observed individual behaviors are most informative in predicting group behavior. This represents an example of augmented intelligence in behavioral ecology -- knowledge co-creation in a human-AI team. As proof of concept, we utilize a 20-day video recording of a colony of over 50 Harpegnathos saltator ants to showcase that, without any individual annotations provided, a trained model can generate an "importance map" across the video frames to highlight regions of important behaviors, such as dueling (which the AI has no a priori knowledge of), that play a role in the resolution of reproductive-hierarchy re-formation. Based on the empirical results, we also discuss the potential use and current challenges.

Identification of Abnormal States in Videos of Ants Undergoing Social Phase Change

Sep 18, 2020

Abstract:Biology is both an important application area and a source of motivation for development of advanced machine learning techniques. Although much attention has been paid to large and complex data sets resulting from high-throughput sequencing, advances in high-quality video recording technology have begun to generate similarly rich data sets requiring sophisticated techniques from both computer vision and time-series analysis. Moreover, just as studying gene expression patterns in one organism can reveal general principles that apply to other organisms, the study of complex social interactions in an experimentally tractable model system, such as a laboratory ant colony, can provide general principles about the dynamics many other social groups. Here, we focus on one such example from the study of reproductive regulation in small laboratory colonies of $\sim$50 Harpgenathos ants. These ants can be artificially induced to begin a $\sim$20 day process of hierarchy reformation. Although the conclusion of this process is conspicuous to a human observer, it is still unclear which behaviors during the transients are contributing to the process. To address this issue, we explore the potential application of One-class Classification (OC) to the detection of abnormal states in ant colonies for which behavioral data is only available for the normal societal conditions during training. Specifically, we build upon the Deep Support Vector Data Description (DSVDD) and introduce the Inner-Outlier Generator (IO-GEN) that synthesizes fake "inner outlier" observations during training that are near the center of the DSVDD data description. We show that IO-GEN increases the reliability of the final OC classifier relative to other DSVDD baselines. This method can be used to screen video frames for which additional human observation is needed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge