Benito Buchheim

Controlling Human Shape and Pose in Text-to-Image Diffusion Models via Domain Adaptation

Nov 07, 2024Abstract:We present a methodology for conditional control of human shape and pose in pretrained text-to-image diffusion models using a 3D human parametric model (SMPL). Fine-tuning these diffusion models to adhere to new conditions requires large datasets and high-quality annotations, which can be more cost-effectively acquired through synthetic data generation rather than real-world data. However, the domain gap and low scene diversity of synthetic data can compromise the pretrained model's visual fidelity. We propose a domain-adaptation technique that maintains image quality by isolating synthetically trained conditional information in the classifier-free guidance vector and composing it with another control network to adapt the generated images to the input domain. To achieve SMPL control, we fine-tune a ControlNet-based architecture on the synthetic SURREAL dataset of rendered humans and apply our domain adaptation at generation time. Experiments demonstrate that our model achieves greater shape and pose diversity than the 2d pose-based ControlNet, while maintaining the visual fidelity and improving stability, proving its usefulness for downstream tasks such as human animation.

Controlling Geometric Abstraction and Texture for Artistic Images

Jul 31, 2023

Abstract:We present a novel method for the interactive control of geometric abstraction and texture in artistic images. Previous example-based stylization methods often entangle shape, texture, and color, while generative methods for image synthesis generally either make assumptions about the input image, such as only allowing faces or do not offer precise editing controls. By contrast, our holistic approach spatially decomposes the input into shapes and a parametric representation of high-frequency details comprising the image's texture, thus enabling independent control of color and texture. Each parameter in this representation controls painterly attributes of a pipeline of differentiable stylization filters. The proposed decoupling of shape and texture enables various options for stylistic editing, including interactive global and local adjustments of shape, stroke, and painterly attributes such as surface relief and contours. Additionally, we demonstrate optimization-based texture style-transfer in the parametric space using reference images and text prompts, as well as the training of single- and arbitrary style parameter prediction networks for real-time texture decomposition.

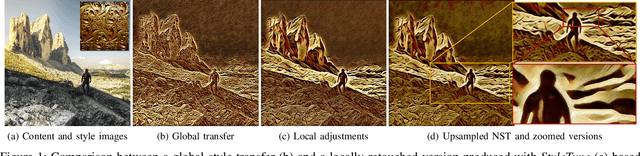

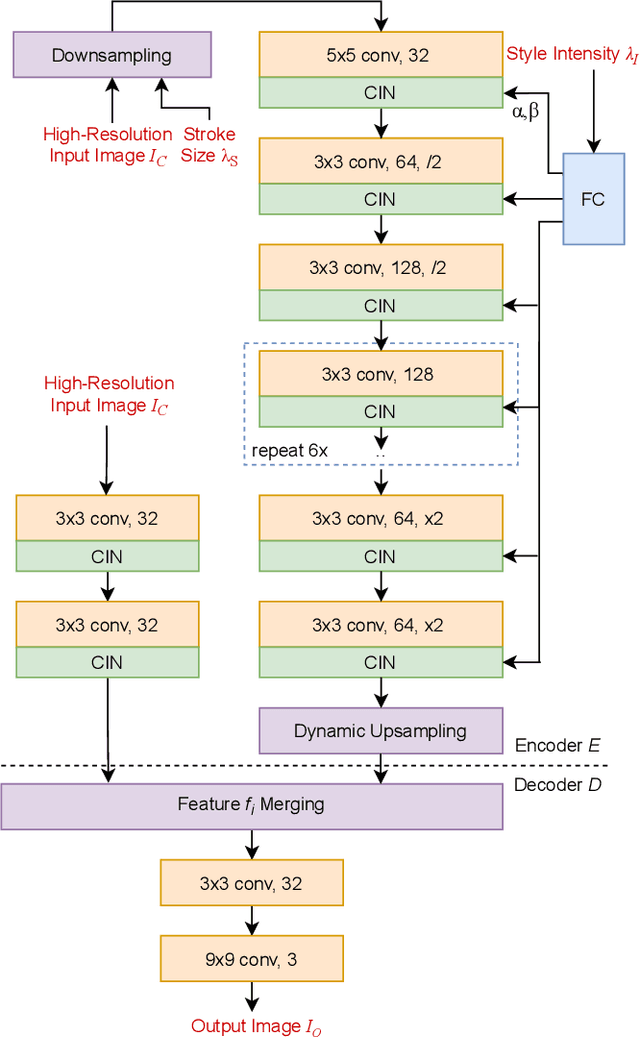

Interactive Multi-level Stroke Control for Neural Style Transfer

Jun 25, 2021

Abstract:We present StyleTune, a mobile app for interactive multi-level control of neural style transfers that facilitates creative adjustments of style elements and enables high output fidelity. In contrast to current mobile neural style transfer apps, StyleTune supports users to adjust both the size and orientation of style elements, such as brushstrokes and texture patches, on a global as well as local level. To this end, we propose a novel stroke-adaptive feed-forward style transfer network, that enables control over stroke size and intensity and allows a larger range of edits than current approaches. For additional level-of-control, we propose a network agnostic method for stroke-orientation adjustment by utilizing the rotation-variance of CNNs. To achieve high output fidelity, we further add a patch-based style transfer method that enables users to obtain output resolutions of more than 20 Megapixel. Our approach empowers users to create many novel results that are not possible with current mobile neural style transfer apps.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge