Bedrettin Cetinkaya

RSPose: Ranking Based Losses for Human Pose Estimation

Nov 17, 2025Abstract:While heatmap-based human pose estimation methods have shown strong performance, they suffer from three main problems: (P1) "Commonly used Mean Squared Error (MSE)" Loss may not always improve joint localization because it penalizes all pixel deviations equally, without focusing explicitly on sharpening and correctly localizing the peak corresponding to the joint; (P2) heatmaps are spatially and class-wise imbalanced; and, (P3) there is a discrepancy between the evaluation metric (i.e., mAP) and the loss functions. We propose ranking-based losses to address these issues. Both theoretically and empirically, we show that our proposed losses are superior to commonly used heatmap losses (MSE, KL-Divergence). Our losses considerably increase the correlation between confidence scores and localization qualities, which is desirable because higher correlation leads to more accurate instance selection during Non-Maximum Suppression (NMS) and better Average Precision (mAP) performance. We refer to the models trained with our losses as RSPose. We show the effectiveness of RSPose across two different modes: one-dimensional and two-dimensional heatmaps, on three different datasets (COCO, CrowdPose, MPII). To the best of our knowledge, we are the first to propose losses that align with the evaluation metric (mAP) for human pose estimation. RSPose outperforms the previous state of the art on the COCO-val set and achieves an mAP score of 79.9 with ViTPose-H, a vision transformer model for human pose estimation. We also improve SimCC Resnet-50, a coordinate classification-based pose estimation method, by 1.5 AP on the COCO-val set, achieving 73.6 AP.

RankED: Addressing Imbalance and Uncertainty in Edge Detection Using Ranking-based Losses

Mar 07, 2024Abstract:Detecting edges in images suffers from the problems of (P1) heavy imbalance between positive and negative classes as well as (P2) label uncertainty owing to disagreement between different annotators. Existing solutions address P1 using class-balanced cross-entropy loss and dice loss and P2 by only predicting edges agreed upon by most annotators. In this paper, we propose RankED, a unified ranking-based approach that addresses both the imbalance problem (P1) and the uncertainty problem (P2). RankED tackles these two problems with two components: One component which ranks positive pixels over negative pixels, and the second which promotes high confidence edge pixels to have more label certainty. We show that RankED outperforms previous studies and sets a new state-of-the-art on NYUD-v2, BSDS500 and Multi-cue datasets. Code is available at https://ranked-cvpr24.github.io.

A New Multi-Level Hazy Image and Video Dataset for Benchmark of Dehazing Methods

Jul 29, 2023Abstract:The changing level of haze is one of the main factors which affects the success of the proposed dehazing methods. However, there is a lack of controlled multi-level hazy dataset in the literature. Therefore, in this study, a new multi-level hazy color image dataset is presented. Color video data is captured for two real scenes with a controlled level of haze. The distance of the scene objects from the camera, haze level, and ground truth (clear image) are available so that different dehazing methods and models can be benchmarked. In this study, the dehazing performance of five different dehazing methods/models is compared on the dataset based on SSIM, PSNR, VSI and DISTS image quality metrics. Results show that traditional methods can generalize the dehazing problem better than many deep learning based methods. The performance of deep models depends mostly on the scene and is generally poor on cross-dataset dehazing.

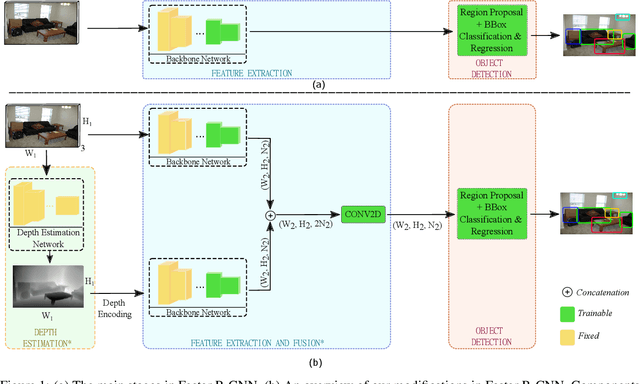

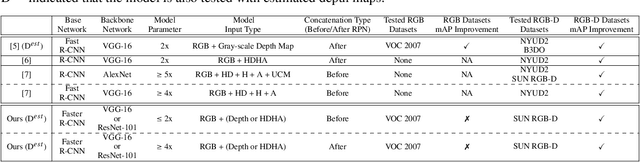

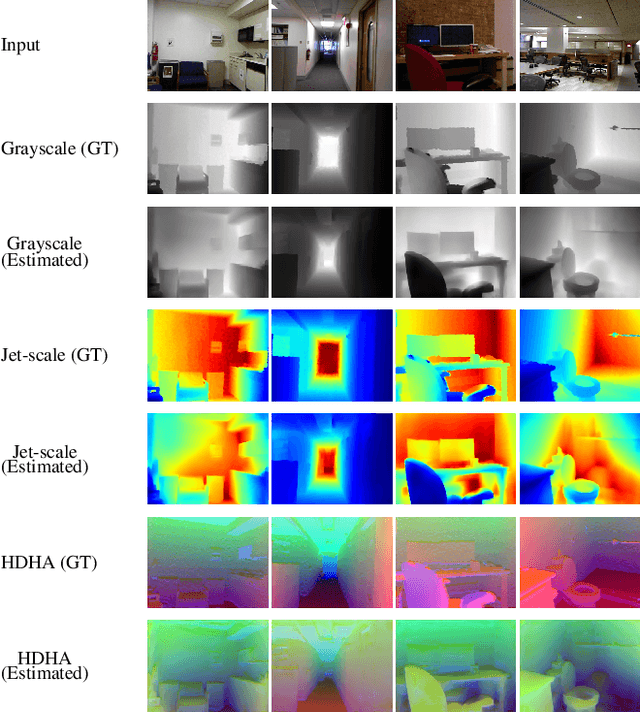

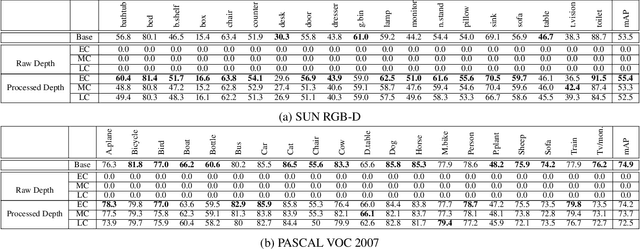

Does depth estimation help object detection?

Apr 13, 2022

Abstract:Ground-truth depth, when combined with color data, helps improve object detection accuracy over baseline models that only use color. However, estimated depth does not always yield improvements. Many factors affect the performance of object detection when estimated depth is used. In this paper, we comprehensively investigate these factors with detailed experiments, such as using ground-truth vs. estimated depth, effects of different state-of-the-art depth estimation networks, effects of using different indoor and outdoor RGB-D datasets as training data for depth estimation, and different architectural choices for integrating depth to the base object detector network. We propose an early concatenation strategy of depth, which yields higher mAP than previous works' while using significantly fewer parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge